-

Notifications

You must be signed in to change notification settings - Fork 1.1k

Get test_aoi_and_aoi_projection passing on numpy 1.22.0 #1369

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

all pytests passed using that environment variable, so I'll switch to loosening the test tolerance. |

|

Wow, nice work. Do you see a change in https://pvlib-benchmarker.github.io/pvlib-benchmarks/#irradiance.Irradiance.time_aoi_projection ? That test might not use enough data points to get over the python overhead. Just noticed that 5bdad644 slowed it down by more than I would have expected. |

I concur. The case that is failing is zenith=tilt=30, and solar_azimuth=system_azimuth=180, which should return AOI=0 but returns AOI ~ 1.2e-6. Close enough to zero for any use case I can think of. |

|

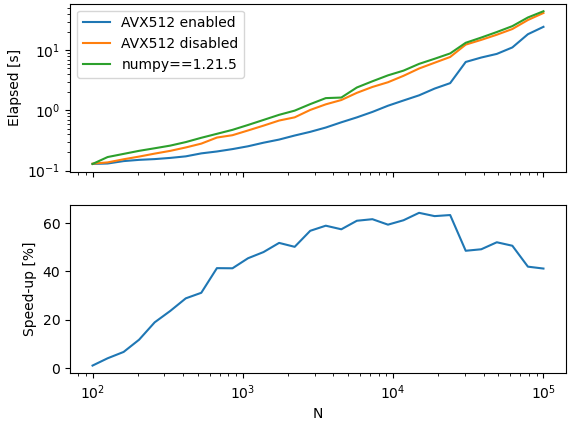

It can apparently be quite a significant speed improvement for numpy-heavy functions. Here is a small comparison for Click to expandimport numpy as np

import pvlib

import timeit

data = []

for N in np.logspace(2, 5, 30).astype(int):

surface_tilt = np.random.random(N)

surface_azimuth = np.random.random(N)

solar_zenith = np.random.random(N)

solar_azimuth = np.random.random(N)

dt = timeit.timeit(lambda: pvlib.irradiance.aoi(surface_tilt, surface_azimuth, solar_zenith, solar_azimuth), number=5000)

data.append({'N': N, 'elapsed': dt})

print(data)As for the ASV results -- I keep managing to fix a problem on the server without actually fixing it, so the current published timings are a bit out of date. I think none of them are new enough to have used numpy 1.22.0 so I wouldn't expect to see a speed-up in those plots yet. |

| @@ -878,7 +878,7 @@ def test_aoi_and_aoi_projection(surface_tilt, surface_azimuth, solar_zenith, | |||

| aoi_proj_expected): | |||

| aoi = irradiance.aoi(surface_tilt, surface_azimuth, solar_zenith, | |||

| solar_azimuth) | |||

| assert_allclose(aoi, aoi_expected, atol=1e-6) | |||

| assert_allclose(aoi, aoi_expected, atol=1e-5) | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@cwhanse any objection to just bumping this tolerance to 1e-5?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

No objection

|

Ok, proposed fix seems uncontroversial so I'll go ahead and merge. It's nice that such a sweeping change (numpy returning slightly different answers) ended up breaking only one test. Also I had another thought: the ASV runs are done in conda envs, which means we might not see the new numpy there for some time. No clue what the update cadence is on conda. |

[ ] Closes #xxxx[ ] Tests added[ ] Updates entries todocs/sphinx/source/api.rstfor API changes.[ ] Adds description and name entries in the appropriate "what's new" file indocs/sphinx/source/whatsnewfor all changes. Includes link to the GitHub Issue with:issue:`num`or this Pull Request with:pull:`num`. Includes contributor name and/or GitHub username (link with:ghuser:`user`).[ ] New code is fully documented. Includes numpydoc compliant docstrings, examples, and comments where necessary.A test recently started failing because a returned value was very slightly different from the expected value (example):

In here #717 (comment) I speculated that this was because of a change in numpy 1.22.0 enabling a set of SIMD extensions (AVX512), which can improve calculation speed but can also result in very slightly different calculation results. Here are the reasons I think it's the source of this test failure:

NPY_DISABLE_CPU_FEATURES="AVX512F,AVX512CD,AVX512VL,AVX512BW,AVX512DQ,AVX512_SKX"So I'm trying that same environment variable in our CI configuration to see if that resolves the CI failure as well. If so I think we can be pretty confident that this numpy change is responsible.

As a permanent fix, I think we should just loosen the tolerance on the failing test a bit.