Stephanie Fu*, Mark Hamilton*, Laura Brandt, Axel Feldman, Zhoutong Zhang, William T. Freeman *Equal Contribution.

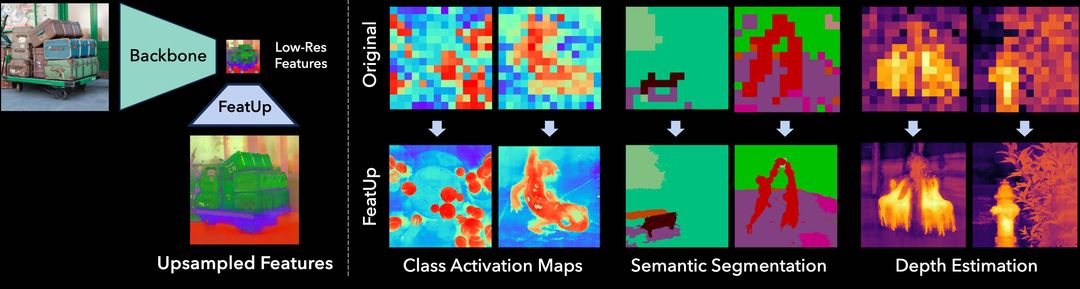

TL;DR:FeatUp improves the spatial resolution of any model's features by 16-32x without changing their semantics.

teaser.2.mp4

For those just looking to quickly use the FeatUp APIs install via:

pip install git+https://github.com/mhamilton723/FeatUpTo install FeatUp for local development and to get access to the sample images install using the following:

git clone https://github.com/mhamilton723/FeatUp.git

cd FeatUp

pip install -e .To see examples of pretrained model usage please see our Collab notebook. We currently supply the following pretrained versions of FeatUp's JBU upsampler:

| Model Name | Checkpoint | Checkpoint (No LayerNorm) | Torch Hub Repository | Torch Hub Name |

|---|---|---|---|---|

| DINO | Download | Download | mhamilton723/FeatUp | dino16 |

| DINO v2 | Download | Download | mhamilton723/FeatUp | dinov2 |

| CLIP | Download | Download | mhamilton723/FeatUp | clip |

| MaskCLIP | n/a | Download | mhamilton723/FeatUp | maskclip |

| ViT | Download | Download | mhamilton723/FeatUp | vit |

| ResNet50 | Download | Download | mhamilton723/FeatUp | resnet50 |

For example, to load the FeatUp JBU upsampler for the DINO backbone without an additional LayerNorm on the spatial features:

upsampler = torch.hub.load("mhamilton723/FeatUp", 'dino16', use_norm=False)To load upsamplers trained on backbones with additional LayerNorm operations which makes training and transfer learning a bit more stable:

upsampler = torch.hub.load("mhamilton723/FeatUp", 'dino16')To train an implicit upsampler for a given image and backbone first clone the repository and install it for local development. Then run

cd featup

python train_implicit_upsampler.pyParameters for this training operation can be found in the implicit_upsampler config file.

To run our HuggingFace Spaces hosted FeatUp demo locally first install FeatUp for local development. Then run:

python gradio_app.pyWait a few seconds for the demo to spin up, then navigate to http://localhost:7860/ to view the demo.

- Training your own FeatUp joint bilateral upsampler

- Simple API for Implicit FeatUp training

@inproceedings{

fu2024featup,

title={FeatUp: A Model-Agnostic Framework for Features at Any Resolution},

author={Stephanie Fu and Mark Hamilton and Laura E. Brandt and Axel Feldmann and Zhoutong Zhang and William T. Freeman},

booktitle={The Twelfth International Conference on Learning Representations},

year={2024},

url={https://openreview.net/forum?id=GkJiNn2QDF}

}

For feedback, questions, or press inquiries please contact Stephanie Fu and Mark Hamilton