Replies: 1 comment

-

|

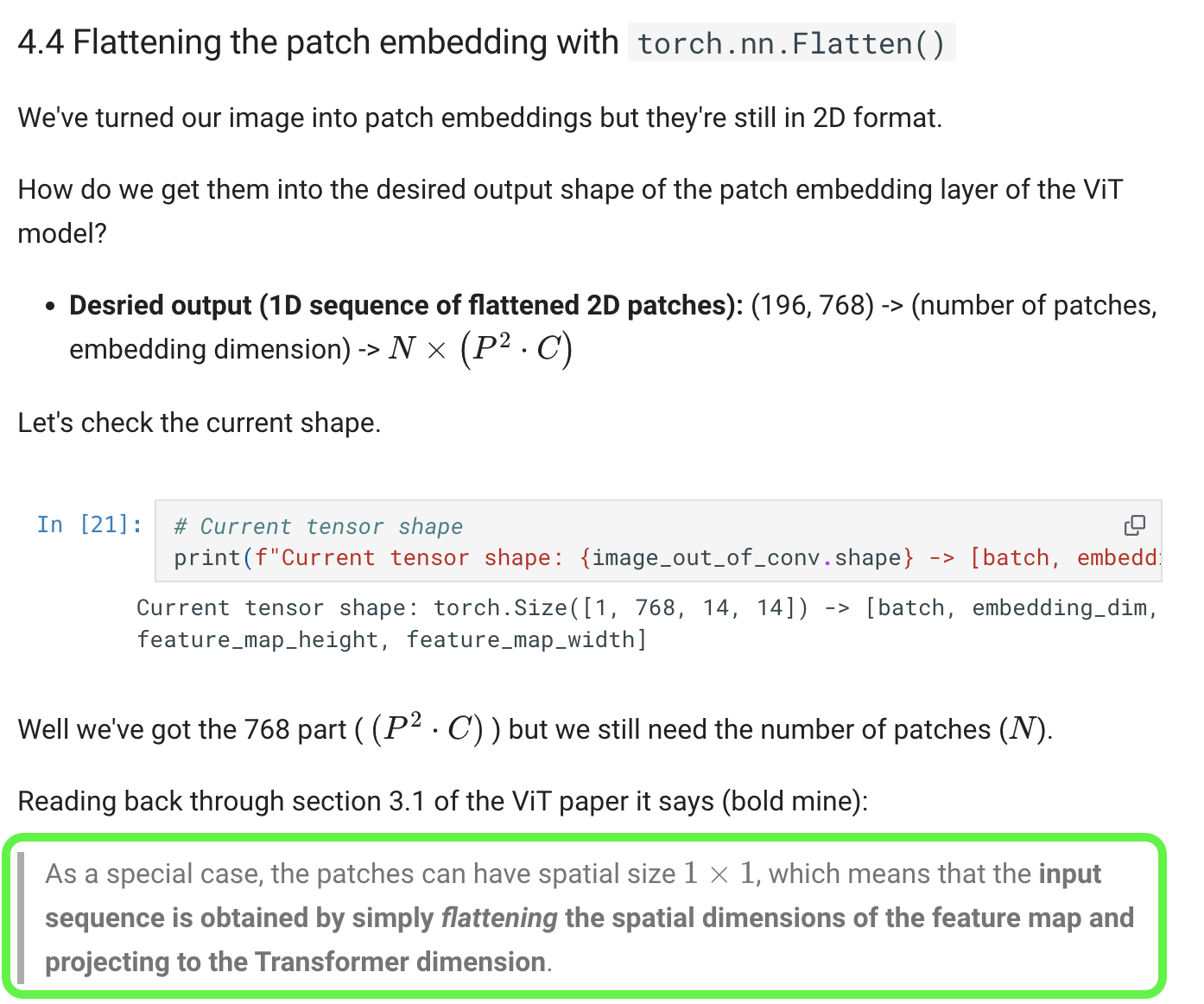

Hi @dzy212, Each patch is embedded into an embedding size of 768. The actual patches of the image start as 14x14 but are then flattened to 196 -> embedded to 768. My interpretation is the feature map they talk about is the features from the image patches (rather than the embedding itself). I'm getting this from green box below, which is taken from the paper: |

Beta Was this translation helpful? Give feedback.

0 replies

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Uh oh!

There was an error while loading. Please reload this page.

Uh oh!

There was an error while loading. Please reload this page.

-

Hi,

In section 4.4 Flattening the patch embedding with torch.nn.Flatten()

The flattened feature map that was visualized was created by indexing on the embedding dimension:

single_flattened_feature_map = image_out_of_conv_flattened_reshaped[ : , : , 0]Which results in the shape:

(1, 196)I thought that a single feature map should have had a dimension of

(1, 768), as each patch is flattened to P x P x C, and we have 196 of them.Is this correct? Is what is being visualized a single pixel across all the patches?

Many thanks

Beta Was this translation helpful? Give feedback.

All reactions