diff --git a/README.md b/README.md

index 93ed684a1..e7a4b97e7 100644

--- a/README.md

+++ b/README.md

@@ -4,15 +4,44 @@ CloudMapper

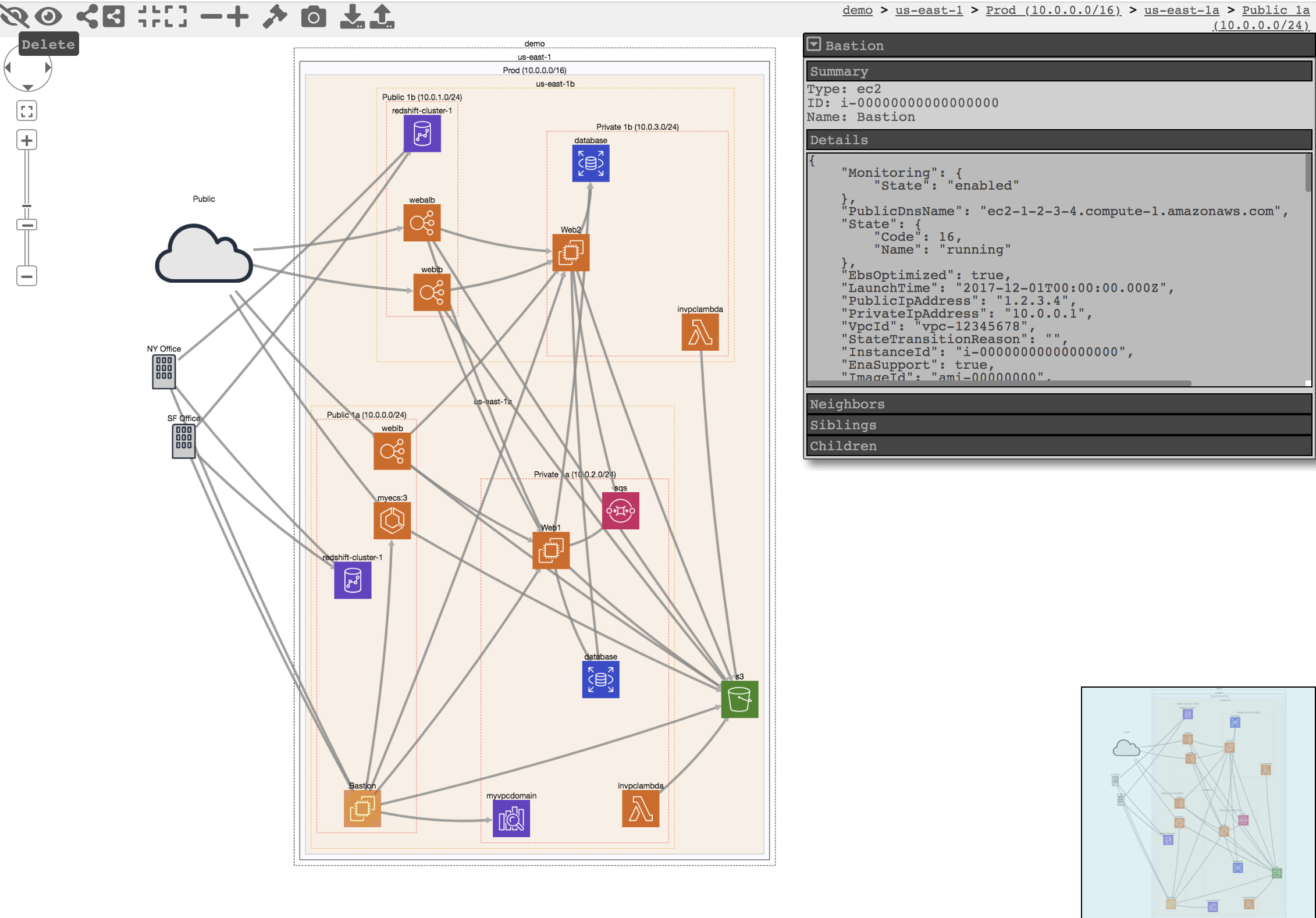

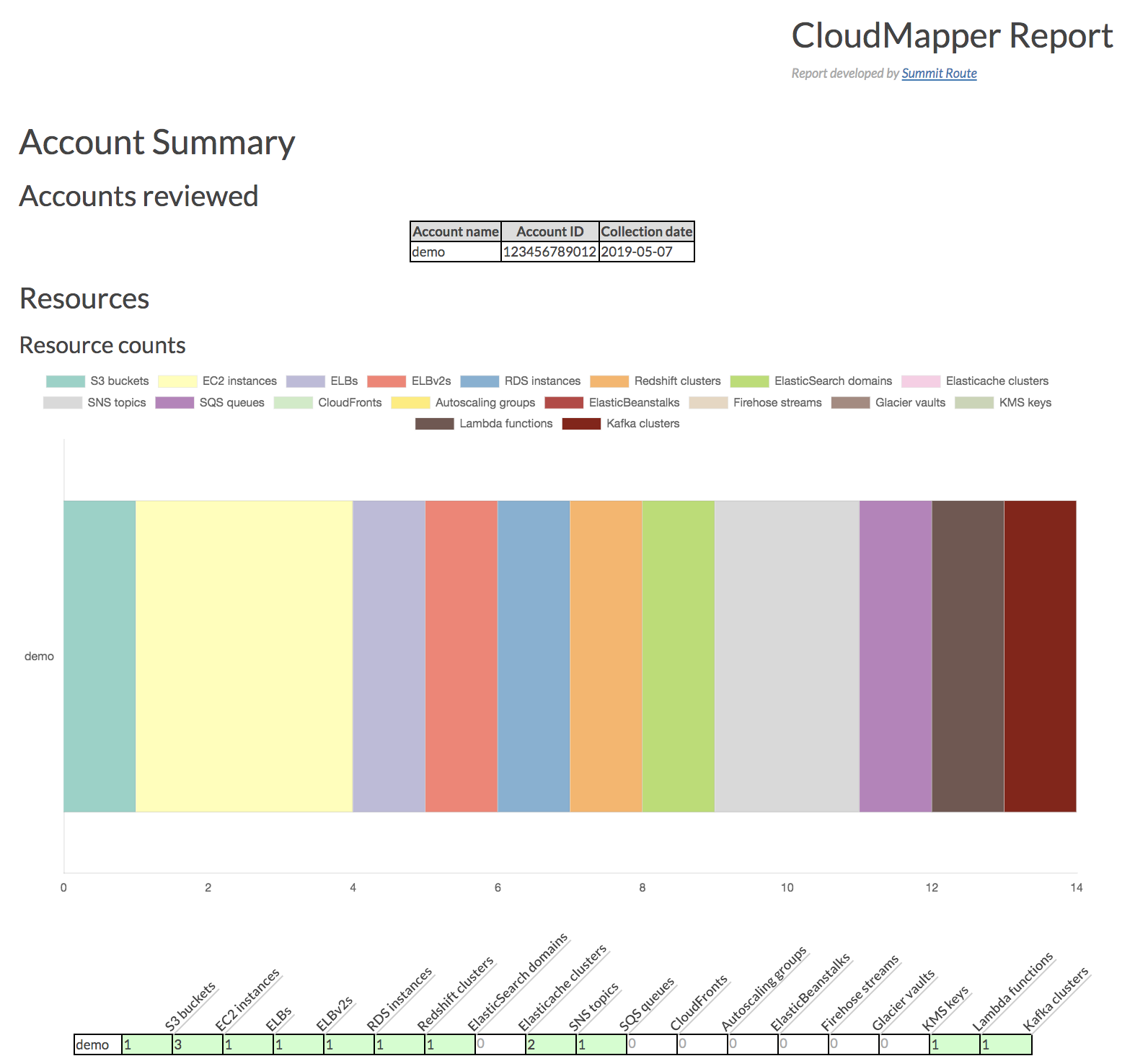

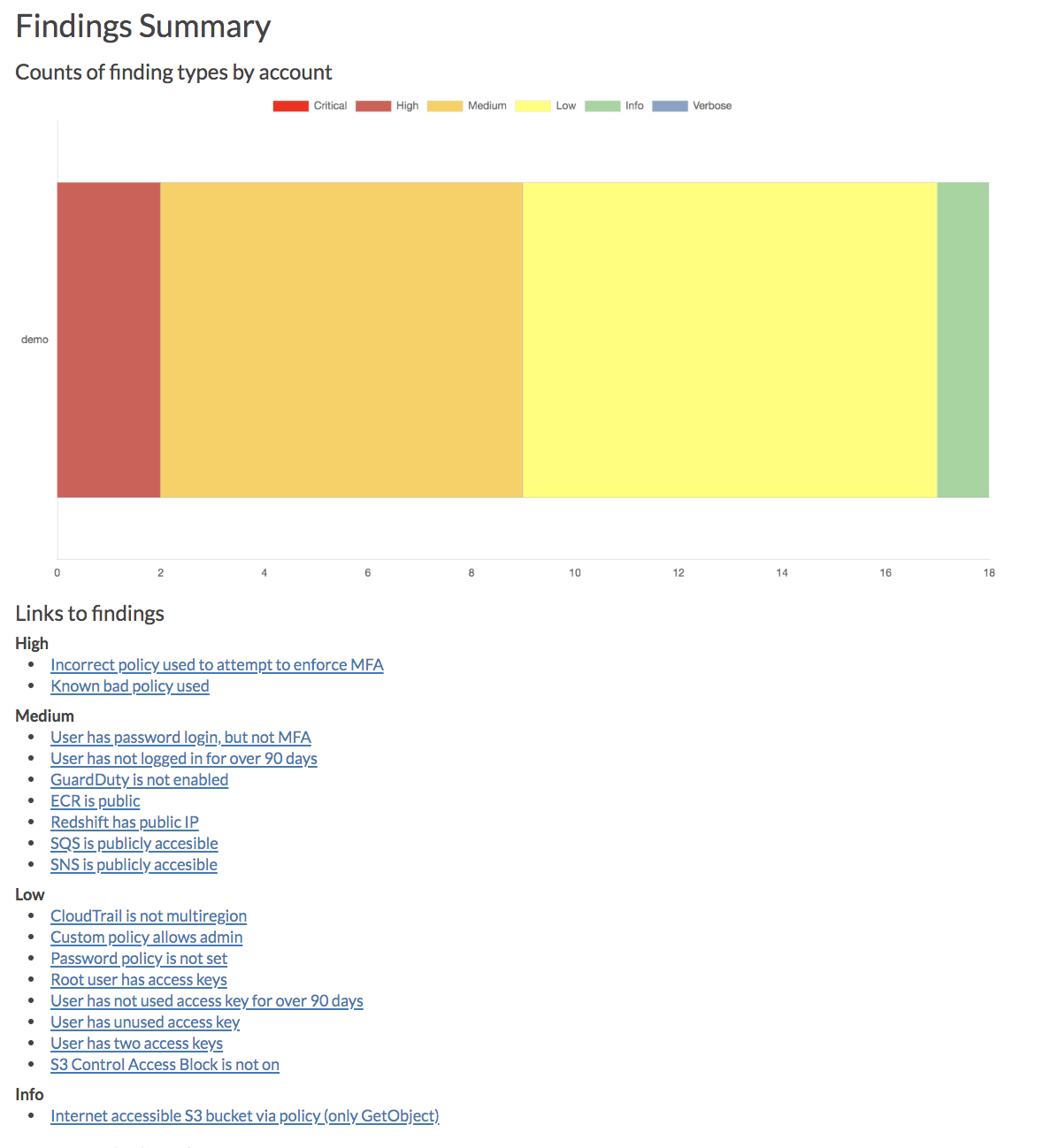

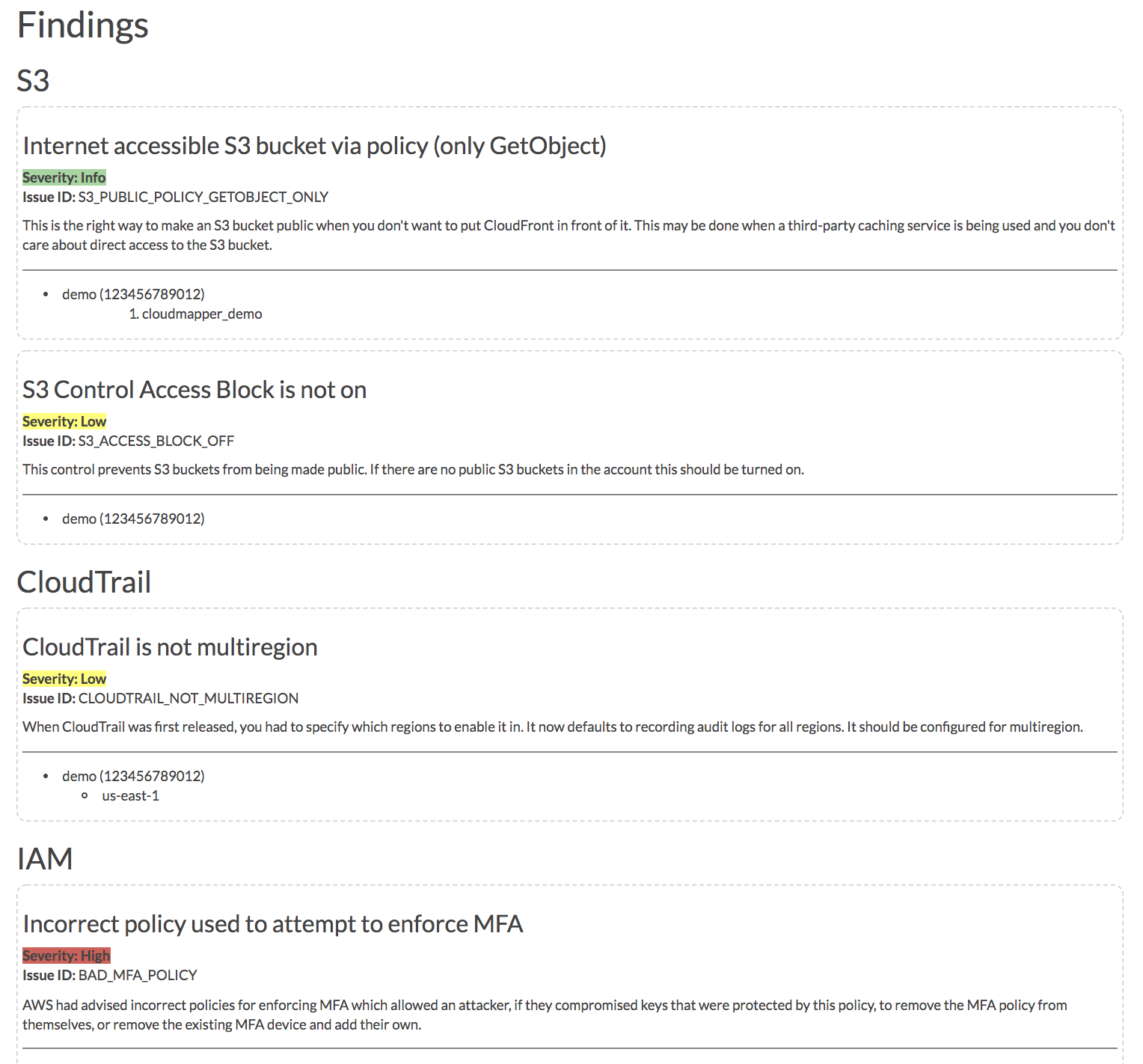

CloudMapper helps you analyze your Amazon Web Services (AWS) environments. The original purpose was to generate network diagrams and display them in your browser. It now contains much more functionality, including auditing for security issues.

-*Network mapping demo: https://duo-labs.github.io/cloudmapper/*

+- [Network mapping demo](https://duo-labs.github.io/cloudmapper/)

+- [Report demo](https://duo-labs.github.io/cloudmapper/account-data/report.html)

+- [Intro post](https://duo.com/blog/introducing-cloudmapper-an-aws-visualization-tool)

+- [Post to show usage in spotting misconfigurations](https://duo.com/blog/spotting-misconfigurations-with-cloudmapper)

-*Report demo: https://duo-labs.github.io/cloudmapper/account-data/report.html*

+# Commands

+

+- `api_endpoints`: List the URLs that can be called via API Gateway.

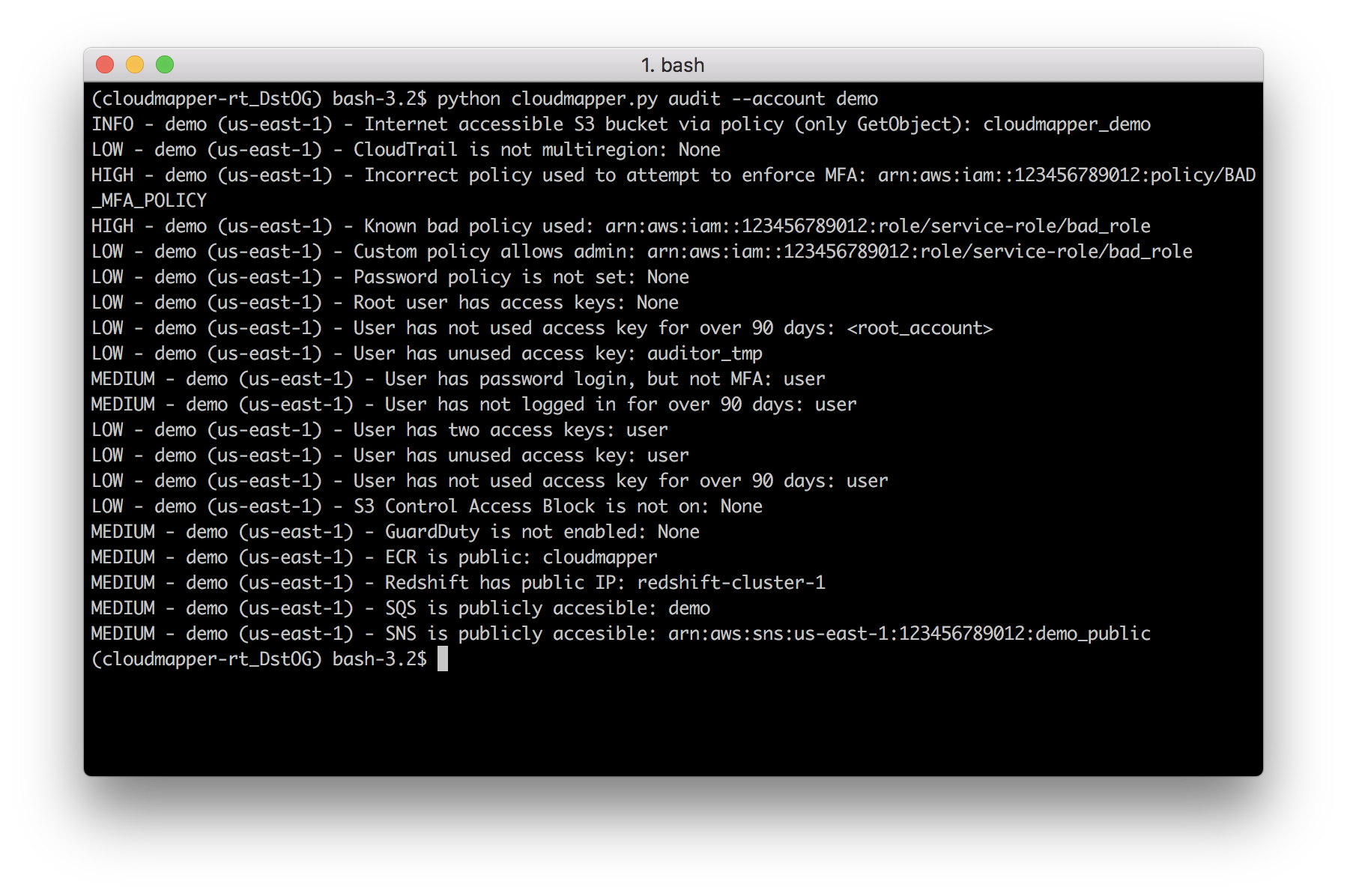

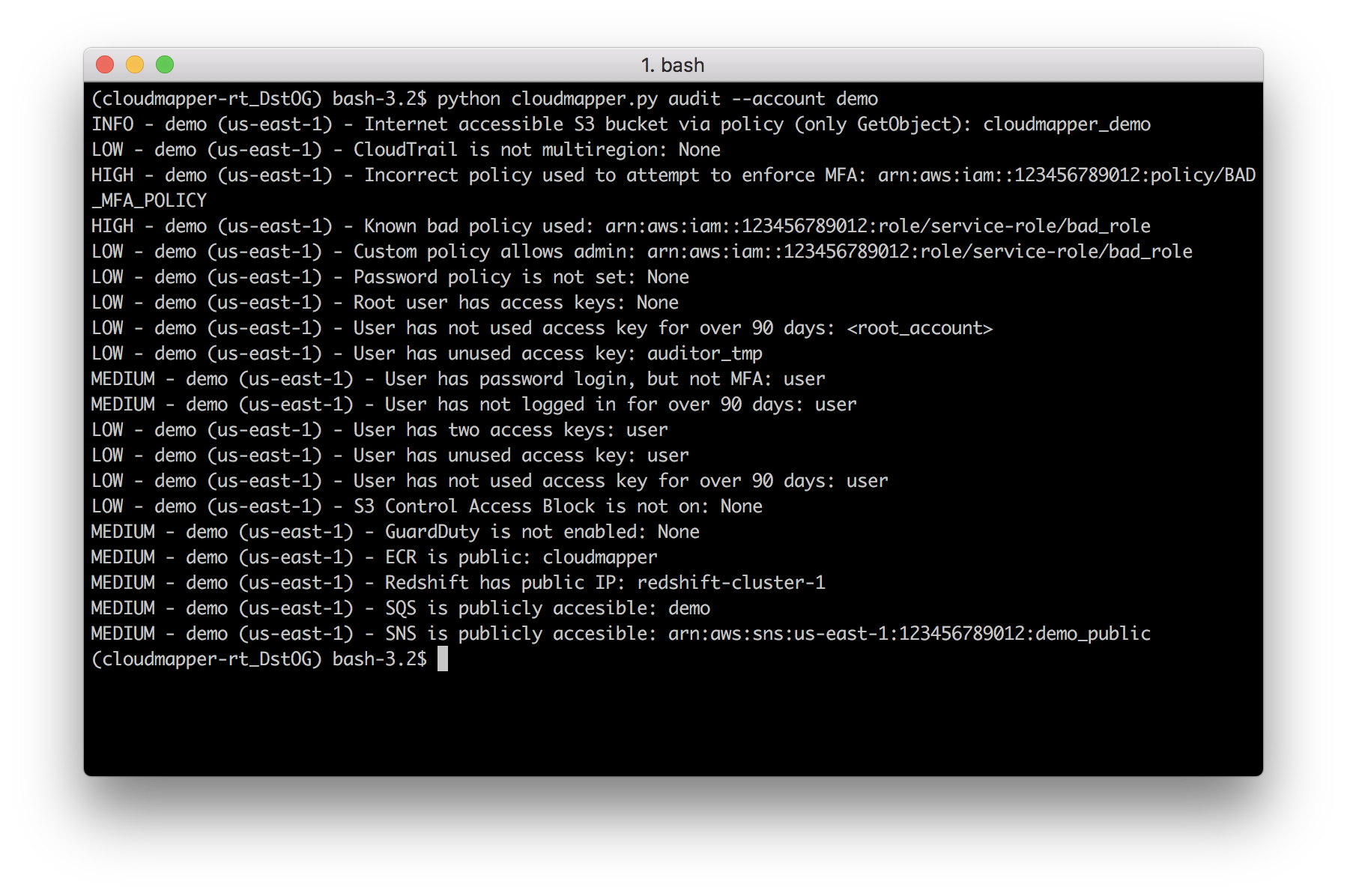

+- `audit`: Check for potential misconfigurations.

+- `collect`: Collect metadata about an account. More details [here](https://summitroute.com/blog/2018/06/05/cloudmapper_collect/).

+- `find_admins`: Look at IAM policies to identify admin users and roles and spot potential IAM issues. More details [here](https://summitroute.com/blog/2018/06/12/cloudmapper_find_admins/).

+- `find_unused`: Look for unused resources in the account. Finds unused Security Groups, Elastic IPs, network interfaces, and volumes.

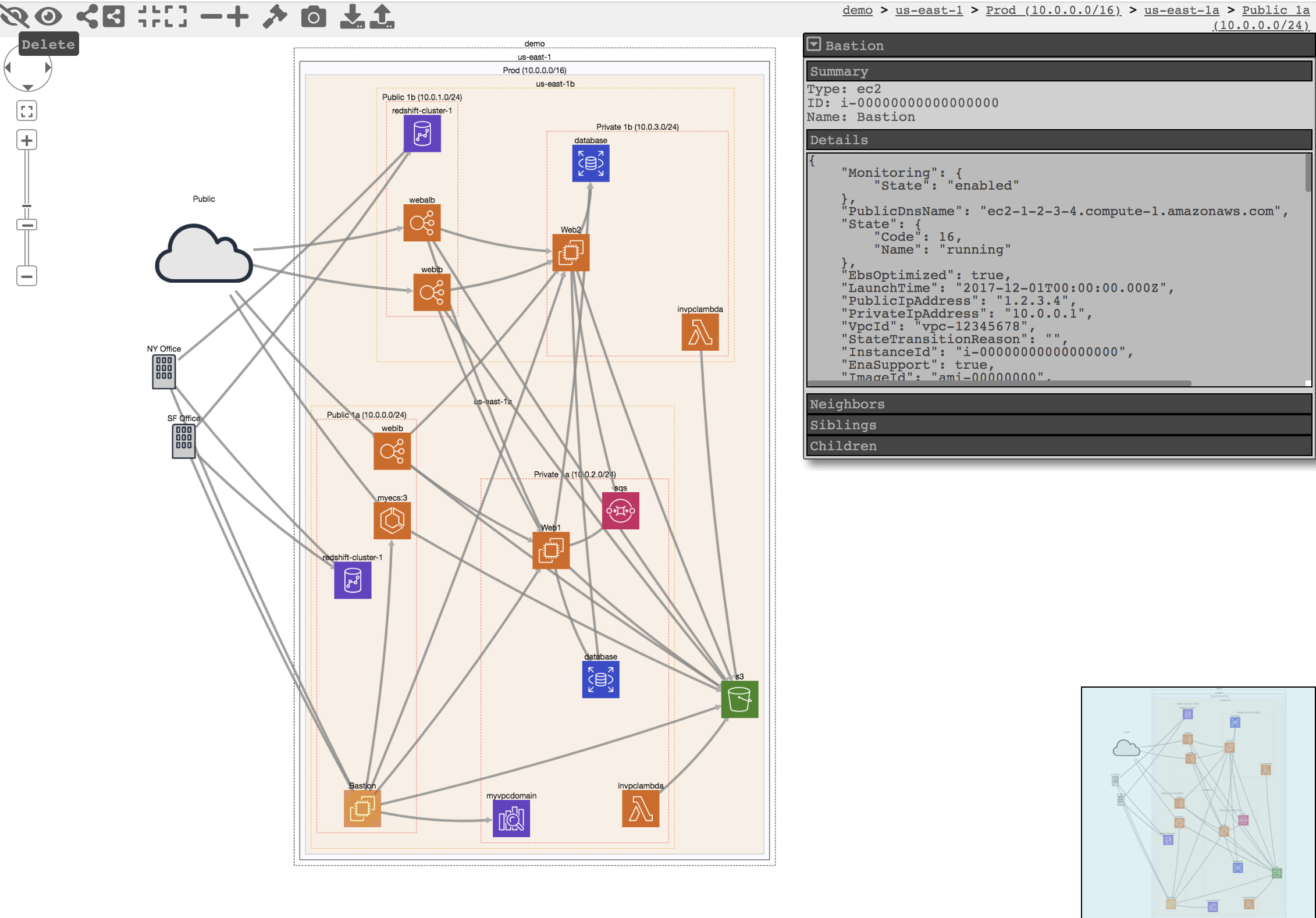

+- `prepare`/`webserver`: See [Network Visualizations](docs/network_visualizations.md)

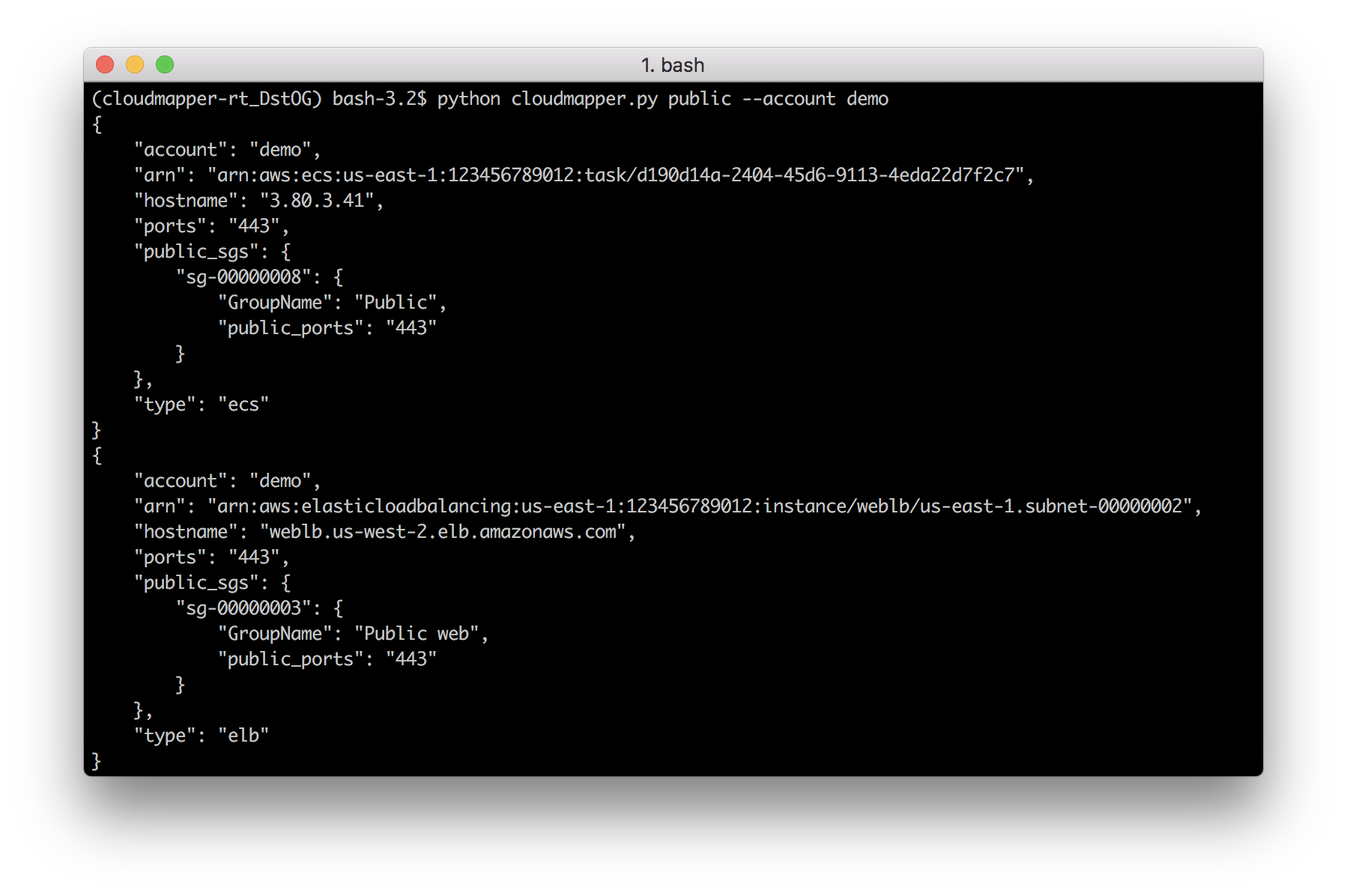

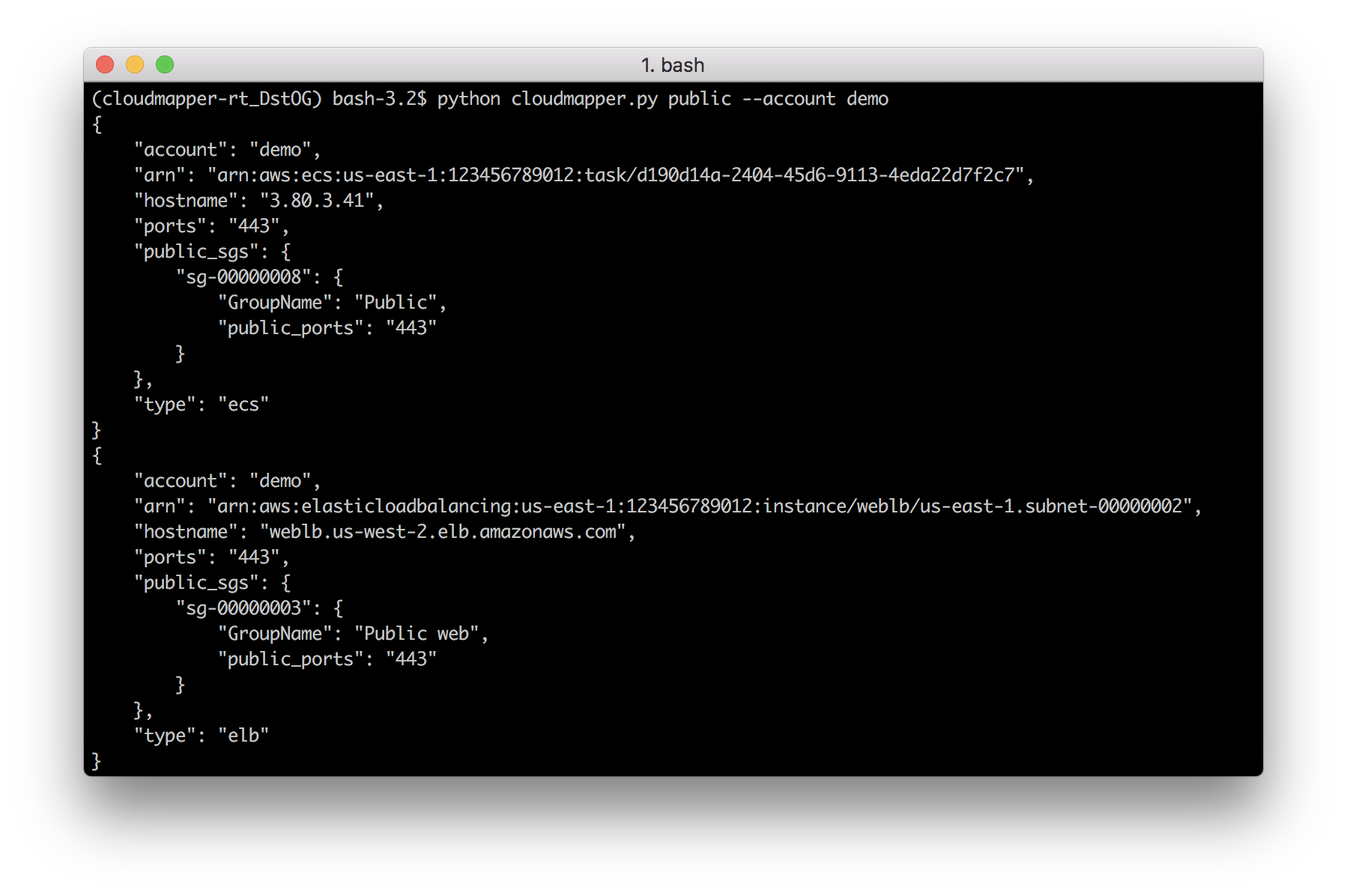

+- `public`: Find public hosts and port ranges. More details [here](https://summitroute.com/blog/2018/06/13/cloudmapper_public/).

+- `sg_ips`: Get geoip info on CIDRs trusted in Security Groups. More details [here](https://summitroute.com/blog/2018/06/12/cloudmapper_sg_ips/).

+- `stats`: Show counts of resources for accounts. More details [here](https://summitroute.com/blog/2018/06/06/cloudmapper_stats/).

+- `weboftrust`: Show Web Of Trust. More details [here](https://summitroute.com/blog/2018/06/13/cloudmapper_wot/).

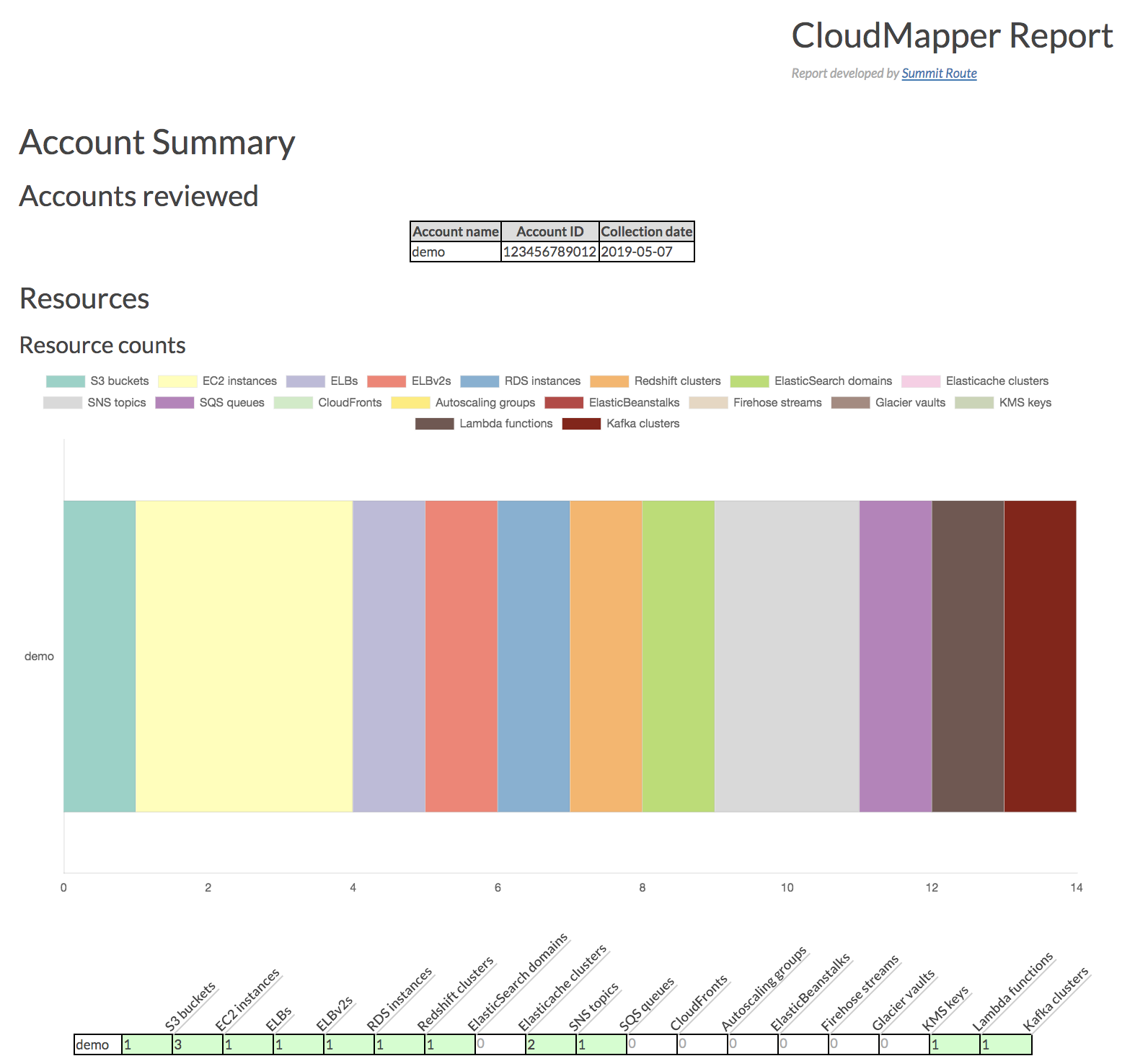

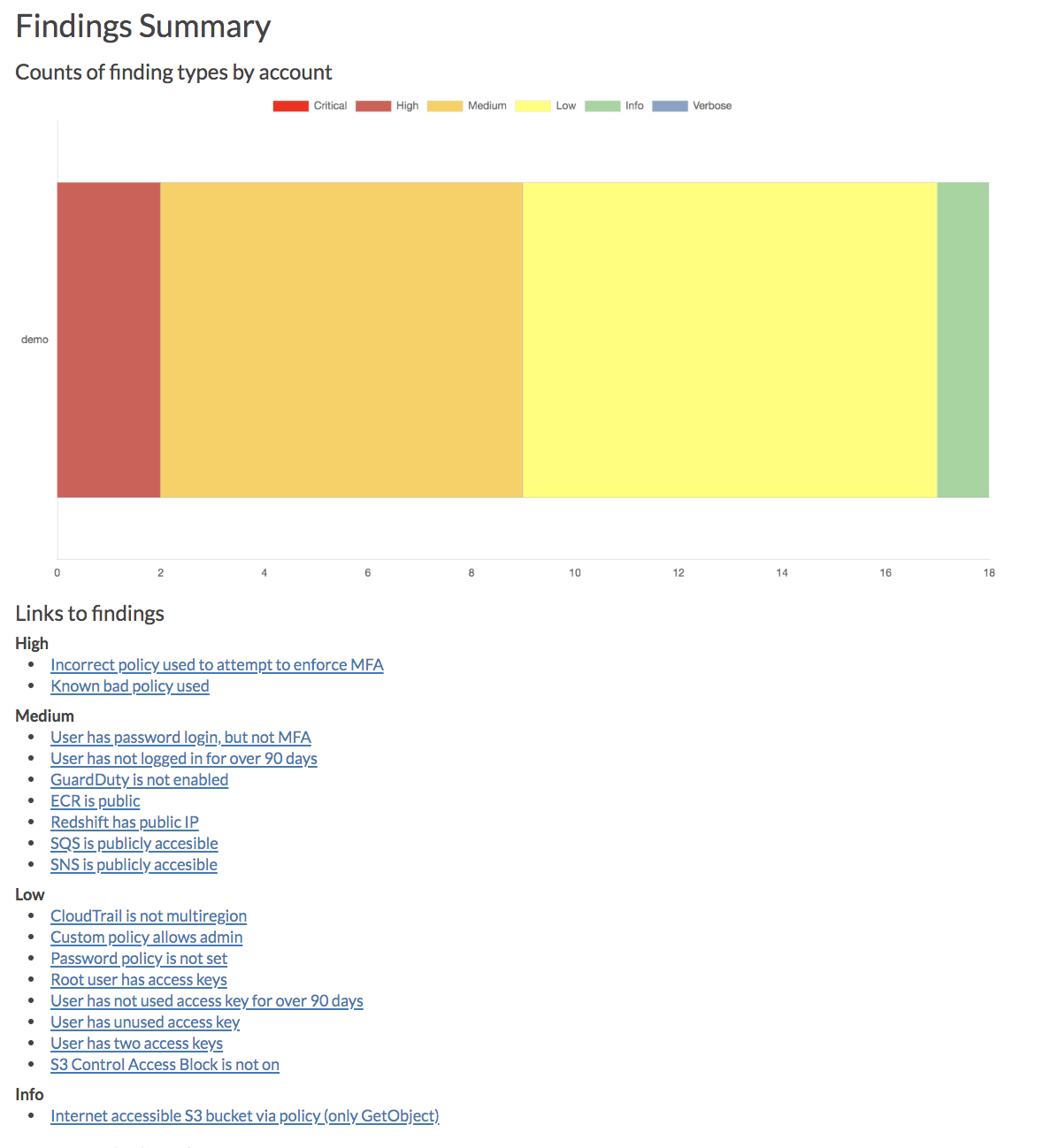

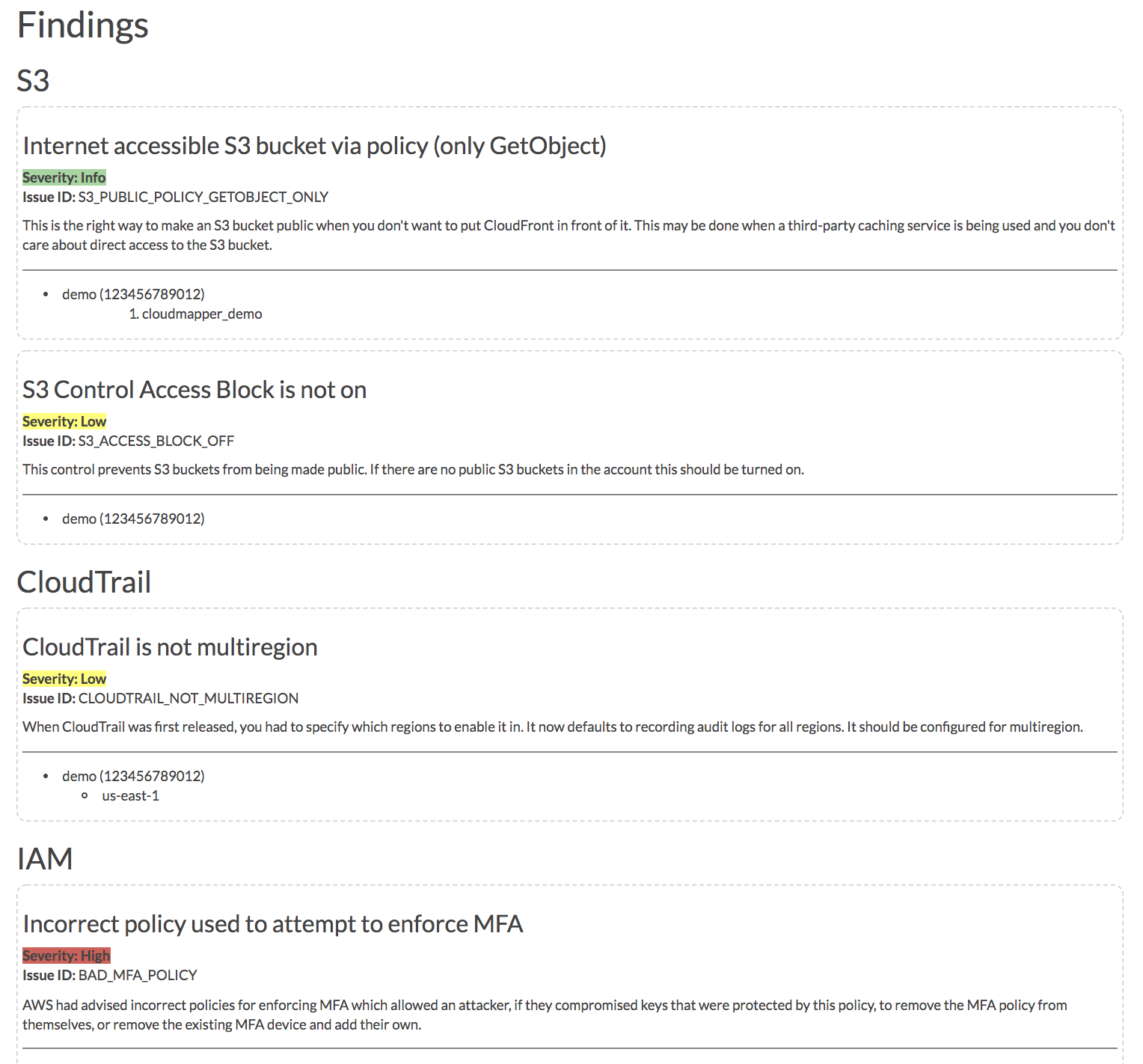

+- `report`: Generate HTML report. Includes summary of the accounts and audit findings. More details [here](https://summitroute.com/blog/2019/03/04/cloudmapper_report_generation/).

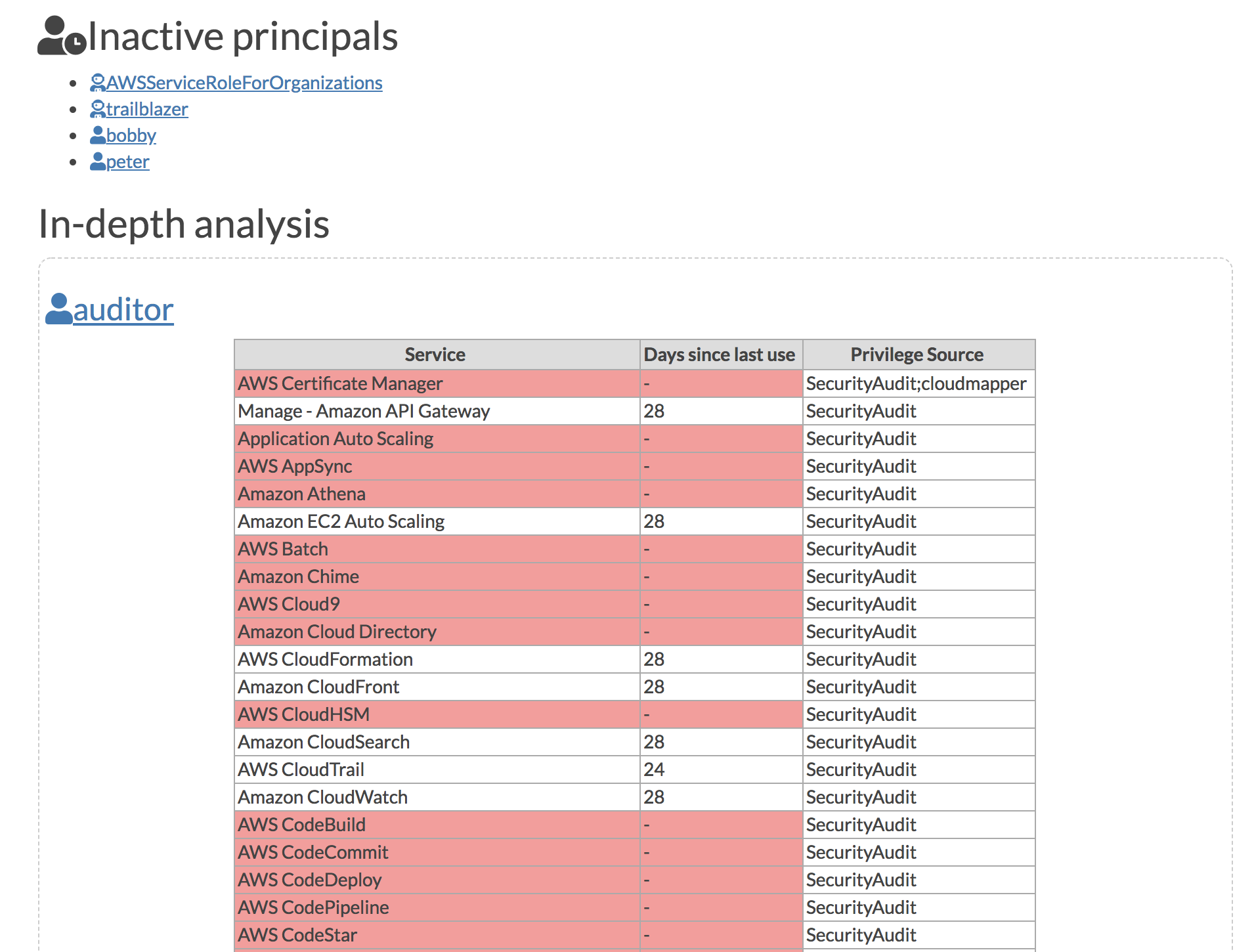

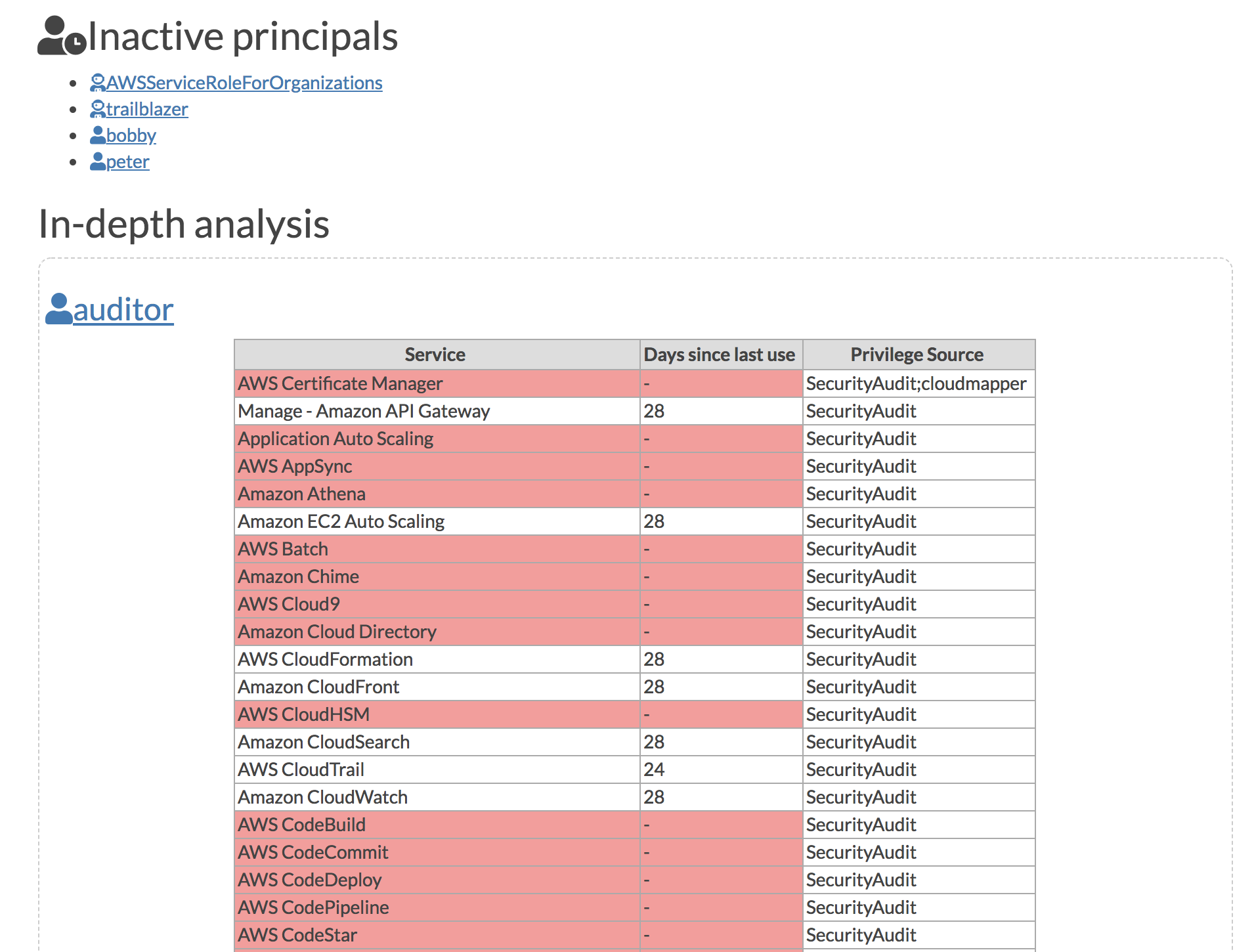

+- `iam_report`: Generate HTML report for the IAM information of an account. More details [here](https://summitroute.com/blog/2019/03/11/cloudmapper_iam_report_command/).

+

+

+If you want to add your own private commands, you can create a `private_commands` directory and add them there.

-*Intro post: https://duo.com/blog/introducing-cloudmapper-an-aws-visualization-tool*

+# Screenshots

-*Post to show usage in spotting misconfigurations: https://duo.com/blog/spotting-misconfigurations-with-cloudmapper*

+ +

-

## Installation

@@ -101,22 +130,10 @@ Collecting the data is done as follows:

python cloudmapper.py collect --account my_account

```

-# Commands

+### Alternatives

+For network diagrams, you may want to try https://github.com/lyft/cartography or https://github.com/anaynayak/aws-security-viz

-- `api_endpoints`: List the URLs that can be called via API Gateway.

-- `audit`: Check for potential misconfigurations.

-- `collect`: Collect metadata about an account. More details [here](https://summitroute.com/blog/2018/06/05/cloudmapper_collect/).

-- `find_admins`: Look at IAM policies to identify admin users and roles and spot potential IAM issues. More details [here](https://summitroute.com/blog/2018/06/12/cloudmapper_find_admins/).

-- `prepare`/`webserver`: See [Network Visualizations](docs/network_visualizations.md)

-- `public`: Find public hosts and port ranges. More details [here](https://summitroute.com/blog/2018/06/13/cloudmapper_public/).

-- `sg_ips`: Get geoip info on CIDRs trusted in Security Groups. More details [here](https://summitroute.com/blog/2018/06/12/cloudmapper_sg_ips/).

-- `stats`: Show counts of resources for accounts. More details [here](https://summitroute.com/blog/2018/06/06/cloudmapper_stats/).

-- `weboftrust`: Show Web Of Trust. More details [here](https://summitroute.com/blog/2018/06/13/cloudmapper_wot/).

-- `report`: Generate HTML report. Includes summary of the accounts and audit findings. More details [here](https://summitroute.com/blog/2019/03/04/cloudmapper_report_generation/).

-- `iam_report`: Generate HTML report for the IAM information of an account. More details [here](https://summitroute.com/blog/2019/03/11/cloudmapper_iam_report_command/).

-

-

-If you want to add your own private commands, you can create a `private_commands` directory and add them there.

+For auditng and other AWS security tools see https://github.com/toniblyx/my-arsenal-of-aws-security-tools

Licenses

--------

diff --git a/account-data/demo/us-east-1/iam-get-account-authorization-details.json b/account-data/demo/us-east-1/iam-get-account-authorization-details.json

index 352a0b41b..6d56bce8f 100644

--- a/account-data/demo/us-east-1/iam-get-account-authorization-details.json

+++ b/account-data/demo/us-east-1/iam-get-account-authorization-details.json

@@ -117,7 +117,12 @@

],

"Version": "2012-10-17"

},

- "AttachedManagedPolicies": [],

+ "AttachedManagedPolicies": [

+ {

+ "PolicyName": "AmazonEC2RoleforSSM",

+ "PolicyArn": "arn:aws:iam::aws:policy/service-role/AmazonEC2RoleforSSM"

+ }

+ ],

"CreateDate": "2018-11-20T17:14:45+00:00",

"InstanceProfileList": [],

"Path": "/service-role/",

diff --git a/audit_config.yaml b/audit_config.yaml

index a5925d6e6..a7fa22c26 100644

--- a/audit_config.yaml

+++ b/audit_config.yaml

@@ -141,13 +141,20 @@ USER_HAS_NOT_USED_ACCESS_KEY_FOR_MAX_DAYS:

is_global: True

group: IAM

-BAD_MFA_POLICY:

+IAM_BAD_MFA_POLICY:

title: Incorrect policy used to attempt to enforce MFA

description: AWS had advised incorrect policies for enforcing MFA which allowed an attacker, if they compromised keys that were protected by this policy, to remove the MFA policy from themselves, or remove the existing MFA device and add their own.

severity: High

is_global: True

group: IAM

+IAM_KNOWN_BAD_POLICY:

+ title: Known bad policy used

+ description: AWS has provided flawed policies to customers. These are either deprecated or no longer advised.

+ severity: High

+ is_global: True

+ group: IAM

+

IAM_NOTACTION_ALLOW:

title: Use of NotAction in an Allow statement

description: Using NotAction in an Allow policy almost always results in unwanted actions being allowed and should be avoided.

@@ -155,6 +162,13 @@ IAM_NOTACTION_ALLOW:

is_global: True

group: IAM

+IAM_ROLE_ALLOWS_ASSUMPTION_FROM_ANYWHERE:

+ title: IAM role allows assumption from anywhere

+ description: The IAM role's trust policy allows any other account to assume it.

+ severity: High

+ is_global: True

+ group: IAM

+

IAM_MANAGED_POLICY_UNINTENTIONALLY_ALLOWING_ADMIN:

title: Managed policy is allowing admin

description: This finding is primarily for the deprecated AmazonElasticTranscoderFullAccess policy that was found to grant admin privileges.

@@ -265,6 +279,12 @@ EC2_CLASSIC:

severity: Info

group: EC2

+EC2_OLD:

+ title: Old EC2

+ description: EC2 runnning that was launched more than 365 days ago.

+ severity: Info

+ group: EC2

+

LAMBDA_PUBLIC:

title: Lambda is internet accessible

description: Lambdas should not be publicly callable. Other resources, such as an API Gateway should be used to call the Lambda.

diff --git a/cloudmapper.py b/cloudmapper.py

index 09302f78c..810dd56b5 100755

--- a/cloudmapper.py

+++ b/cloudmapper.py

@@ -30,7 +30,7 @@

import pkgutil

import importlib

-__version__ = "2.5.7"

+__version__ = "2.6.0"

def show_help(commands):

diff --git a/commands/find_unused.py b/commands/find_unused.py

new file mode 100644

index 000000000..986907381

--- /dev/null

+++ b/commands/find_unused.py

@@ -0,0 +1,14 @@

+from __future__ import print_function

+import json

+

+from shared.common import parse_arguments

+from shared.find_unused import find_unused_resources

+

+

+__description__ = "Find unused resources in accounts"

+

+def run(arguments):

+ _, accounts, config = parse_arguments(arguments)

+ unused_resources = find_unused_resources(accounts)

+

+ print(json.dumps(unused_resources, indent=2, sort_keys=True))

diff --git a/commands/report.py b/commands/report.py

index 3307e6bd0..ae4732753 100644

--- a/commands/report.py

+++ b/commands/report.py

@@ -84,7 +84,7 @@ def report(accounts, config, args):

{

"name": account["name"],

"id": account["id"],

- "collection_date": get_collection_date(account),

+ "collection_date": get_collection_date(account)[:10],

}

)

diff --git a/docs/images/command_line_audit.png b/docs/images/command_line_audit.png

new file mode 100644

index 000000000..f9f970fde

Binary files /dev/null and b/docs/images/command_line_audit.png differ

diff --git a/docs/images/command_line_public.png b/docs/images/command_line_public.png

new file mode 100644

index 000000000..7b74b5f25

Binary files /dev/null and b/docs/images/command_line_public.png differ

diff --git a/docs/images/iam_report-inactive_and_detail.png b/docs/images/iam_report-inactive_and_detail.png

new file mode 100644

index 000000000..a5e838abb

Binary files /dev/null and b/docs/images/iam_report-inactive_and_detail.png differ

diff --git a/docs/images/report_findings.png b/docs/images/report_findings.png

new file mode 100644

index 000000000..e36a9cc15

Binary files /dev/null and b/docs/images/report_findings.png differ

diff --git a/docs/images/report_findings_summary.png b/docs/images/report_findings_summary.png

new file mode 100644

index 000000000..e5c2a0634

Binary files /dev/null and b/docs/images/report_findings_summary.png differ

diff --git a/docs/images/report_resources.png b/docs/images/report_resources.png

new file mode 100644

index 000000000..7af7d639c

Binary files /dev/null and b/docs/images/report_resources.png differ

diff --git a/shared/audit.py b/shared/audit.py

index ad1a17a81..3edd5bfc6 100644

--- a/shared/audit.py

+++ b/shared/audit.py

@@ -1,5 +1,4 @@

import json

-from datetime import datetime

import pyjq

import traceback

@@ -12,6 +11,8 @@

is_unblockable_cidr,

is_external_cidr,

Finding,

+ get_collection_date,

+ days_between

)

from shared.query import query_aws, get_parameter_file

from shared.nodes import Account, Region

@@ -236,14 +237,6 @@ def audit_root_user(findings, region):

def audit_users(findings, region):

MAX_DAYS_SINCE_LAST_USAGE = 90

- def days_between(s1, s2):

- """s1 and s2 are date strings, such as 2018-04-08T23:33:20+00:00 """

- time_format = "%Y-%m-%dT%H:%M:%S"

-

- d1 = datetime.strptime(s1.split("+")[0], time_format)

- d2 = datetime.strptime(s2.split("+")[0], time_format)

- return abs((d1 - d2).days)

-

# TODO: Convert all of this into a table

json_blob = query_aws(region.account, "iam-get-credential-report", region)

@@ -635,6 +628,23 @@ def audit_ec2(findings, region):

# Ignore EC2's that are off

continue

+ # Check for old instances

+ if instance.get("LaunchTime", "") != "":

+ MAX_RESOURCE_AGE_DAYS = 365

+ collection_date = get_collection_date(region.account)

+ launch_time = instance["LaunchTime"].split(".")[0]

+ age_in_days = days_between(launch_time, collection_date)

+ if age_in_days > MAX_RESOURCE_AGE_DAYS:

+ findings.add(Finding(

+ region,

+ "EC2_OLD",

+ instance["InstanceId"],

+ resource_details={

+ "Age in days": age_in_days

+ },

+ ))

+

+ # Check for EC2 Classic

if "vpc" not in instance.get("VpcId", ""):

findings.add(Finding(region, "EC2_CLASSIC", instance["InstanceId"]))

@@ -729,7 +739,7 @@ def audit_sg(findings, region):

region,

"SG_LARGE_CIDR",

cidr,

- resource_details={"size": ip.size, "security_groups": cidrs[cidr]},

+ resource_details={"size": ip.size, "security_groups": list(cidrs[cidr])},

)

)

@@ -932,7 +942,7 @@ def audit(accounts):

if region.name == "us-east-1":

audit_s3_buckets(findings, region)

audit_cloudtrail(findings, region)

- audit_iam(findings, region.account)

+ audit_iam(findings, region)

audit_password_policy(findings, region)

audit_root_user(findings, region)

audit_users(findings, region)

diff --git a/shared/common.py b/shared/common.py

index 9b576b18f..07519fb70 100644

--- a/shared/common.py

+++ b/shared/common.py

@@ -309,9 +309,21 @@ def get_us_east_1(account):

raise Exception("us-east-1 not found")

+def iso_date(d):

+ """ Convert ISO format date string such as 2018-04-08T23:33:20+00:00"""

+ time_format = "%Y-%m-%dT%H:%M:%S"

+ return datetime.datetime.strptime(d.split("+")[0], time_format)

+

+def days_between(s1, s2):

+ """s1 and s2 are date strings"""

+ d1 = iso_date(s1)

+ d2 = iso_date(s2)

+ return abs((d1 - d2).days)

def get_collection_date(account):

- account_struct = Account(None, account)

+ if type(account) is not Account:

+ account = Account(None, account)

+ account_struct = account

json_blob = query_aws(

account_struct, "iam-get-credential-report", get_us_east_1(account_struct)

)

@@ -319,8 +331,7 @@ def get_collection_date(account):

raise Exception("File iam-get-credential-report.json does not exist or is not well-formed. Likely cause is you did not run the collect command for this account.")

# GeneratedTime looks like "2019-01-30T15:43:24+00:00"

- # so extract the data part "2019-01-30"

- return json_blob["GeneratedTime"][:10]

+ return json_blob["GeneratedTime"]

def get_access_advisor_active_counts(account, max_age=90):

diff --git a/shared/find_unused.py b/shared/find_unused.py

new file mode 100644

index 000000000..5e208b389

--- /dev/null

+++ b/shared/find_unused.py

@@ -0,0 +1,119 @@

+import pyjq

+

+from shared.common import query_aws, get_regions

+from shared.nodes import Account, Region

+

+

+def find_unused_security_groups(region):

+ # Get the defined security groups, then find all the Security Groups associated with the

+ # ENIs. Then diff these to find the unused Security Groups.

+ used_sgs = set()

+

+ defined_sgs = query_aws(region.account, "ec2-describe-security-groups", region)

+

+ network_interfaces = query_aws(

+ region.account, "ec2-describe-network-interfaces", region

+ )

+

+ defined_sg_set = {}

+

+ for sg in pyjq.all(".SecurityGroups[]", defined_sgs):

+ defined_sg_set[sg["GroupId"]] = sg

+

+ for used_sg in pyjq.all(

+ ".NetworkInterfaces[].Groups[].GroupId", network_interfaces

+ ):

+ used_sgs.add(used_sg)

+

+ unused_sg_ids = set(defined_sg_set) - used_sgs

+ unused_sgs = []

+ for sg_id in unused_sg_ids:

+ unused_sgs.append(

+ {

+ "id": sg_id,

+ "name": defined_sg_set[sg_id]["GroupName"],

+ "description": defined_sg_set[sg_id].get("Description", ""),

+ }

+ )

+ return unused_sgs

+

+

+def find_unused_volumes(region):

+ unused_volumes = []

+ volumes = query_aws(region.account, "ec2-describe-volumes", region)

+ for volume in pyjq.all('.Volumes[]|select(.State=="available")', volumes):

+ unused_volumes.append({"id": volume["VolumeId"]})

+

+ return unused_volumes

+

+

+def find_unused_elastic_ips(region):

+ unused_ips = []

+ ips = query_aws(region.account, "ec2-describe-addresses", region)

+ for ip in pyjq.all(".Addresses[] | select(.AssociationId == null)", ips):

+ unused_ips.append({"id": ip["AllocationId"], "ip": ip["PublicIp"]})

+

+ return unused_ips

+

+

+def find_unused_network_interfaces(region):

+ unused_network_interfaces = []

+ network_interfaces = query_aws(

+ region.account, "ec2-describe-network-interfaces", region

+ )

+ for network_interface in pyjq.all(

+ '.NetworkInterfaces[]|select(.Status=="available")', network_interfaces

+ ):

+ unused_network_interfaces.append(

+ {"id": network_interface["NetworkInterfaceId"]}

+ )

+

+ return unused_network_interfaces

+

+

+def add_if_exists(dictionary, key, value):

+ if value:

+ dictionary[key] = value

+

+

+def find_unused_resources(accounts):

+ unused_resources = []

+ for account in accounts:

+ unused_resources_for_account = []

+ for region_json in get_regions(Account(None, account)):

+ region = Region(Account(None, account), region_json)

+

+ unused_resources_for_region = {}

+

+ add_if_exists(

+ unused_resources_for_region,

+ "security_groups",

+ find_unused_security_groups(region),

+ )

+ add_if_exists(

+ unused_resources_for_region, "volumes", find_unused_volumes(region)

+ )

+ add_if_exists(

+ unused_resources_for_region,

+ "elastic_ips",

+ find_unused_elastic_ips(region),

+ )

+ add_if_exists(

+ unused_resources_for_region,

+ "network_interfaces",

+ find_unused_network_interfaces(region),

+ )

+

+ unused_resources_for_account.append(

+ {

+ "region": region_json["RegionName"],

+ "unused_resources": unused_resources_for_region,

+ }

+ )

+ unused_resources.append(

+ {

+ "account": {"id": account["id"], "name": account["name"]},

+ "regions": unused_resources_for_account,

+ }

+ )

+ return unused_resources

diff --git a/shared/iam_audit.py b/shared/iam_audit.py

index 99f2cbe84..583365ba4 100644

--- a/shared/iam_audit.py

+++ b/shared/iam_audit.py

@@ -12,6 +12,11 @@

from shared.query import query_aws, get_parameter_file

from shared.nodes import Account, Region

+KNOWN_BAD_POLICIES = {

+ "arn:aws:iam::aws:policy/service-role/AmazonEC2RoleforSSM": "Use AmazonSSMManagedInstanceCore instead and add privs as needed",

+ "arn:aws:iam::aws:policy/service-role/AmazonMachineLearningRoleforRedshiftDataSource": "Use AmazonMachineLearningRoleforRedshiftDataSourceV2 instead"

+

+}

def get_current_policy_doc(policy):

for doc in policy["PolicyVersionList"]:

@@ -44,7 +49,7 @@ def policy_action_count(policy_doc, location):

return actions_count

-def is_admin_policy(policy_doc, location):

+def is_admin_policy(policy_doc, location, findings, region):

# This attempts to identify policies that directly allow admin privs, or indirectly through possible

# privilege escalation (ex. iam:PutRolePolicy to add an admin policy to itself).

# It is a best effort. It will have false negatives, meaning it may not identify an admin policy

@@ -135,14 +140,14 @@ def check_for_bad_policy(findings, region, arn, policy_text):

== "AllowIndividualUserToViewAndManageTheirOwnMFA"

):

if "iam:DeactivateMFADevice" in make_list(statement.get("Action", [])):

- findings.add(Finding(region, "BAD_MFA_POLICY", arn, policy_text))

+ findings.add(Finding(region, "IAM_BAD_MFA_POLICY", arn, policy_text))

return

elif (

statement.get("Sid", "")

== "BlockAnyAccessOtherThanAboveUnlessSignedInWithMFA"

):

if "iam:*" in make_list(statement.get("NotAction", [])):

- findings.add(Finding(region, "BAD_MFA_POLICY", arn, policy_text))

+ findings.add(Finding(region, "IAM_BAD_MFA_POLICY", arn, policy_text))

return

@@ -180,7 +185,7 @@ def find_admins_in_account(region, findings):

policy_action_counts[policy["Arn"]] = policy_action_count(policy_doc, location)

- if is_admin_policy(policy_doc, location):

+ if is_admin_policy(policy_doc, location, findings, region):

admin_policies.append(policy["Arn"])

if (

"arn:aws:iam::aws:policy/AdministratorAccess" in policy["Arn"]

@@ -220,9 +225,22 @@ def find_admins_in_account(region, findings):

reasons.append(

"Attached managed policy: {}".format(policy["PolicyArn"])

)

+ if policy["PolicyArn"] in KNOWN_BAD_POLICIES:

+ findings.add(

+ Finding(

+ region,

+ "IAM_KNOWN_BAD_POLICY",

+ role["Arn"],

+ resource_details={

+ "comment": KNOWN_BAD_POLICIES[policy["PolicyArn"]],

+ "policy": policy["PolicyArn"],

+ },

+ )

+ )

+

for policy in role["RolePolicyList"]:

policy_doc = policy["PolicyDocument"]

- if is_admin_policy(policy_doc, location):

+ if is_admin_policy(policy_doc, location, findings, region):

reasons.append("Custom policy: {}".format(policy["PolicyName"]))

findings.add(

Finding(

@@ -236,23 +254,39 @@ def find_admins_in_account(region, findings):

)

)

- if len(reasons) != 0:

- for stmt in role["AssumeRolePolicyDocument"]["Statement"]:

- if stmt["Effect"] != "Allow":

- findings.add(

- Finding(

- region,

- "IAM_UNEXPECTED_FORMAT",

- role["Arn"],

- resource_details={

- "comment": "Unexpected Effect in AssumeRolePolicyDocument",

- "statement": stmt,

- },

- )

+ # Check if role is accessible from anywhere

+ policy = Policy(role["AssumeRolePolicyDocument"])

+ if policy.is_internet_accessible():

+ findings.add(

+ Finding(

+ region,

+ "IAM_ROLE_ALLOWS_ASSUMPTION_FROM_ANYWHERE",

+ role["Arn"],

+ resource_details={

+ "statement": role["AssumeRolePolicyDocument"],

+ },

+ )

+ )

+

+ # Check if anything looks malformed

+ for stmt in role["AssumeRolePolicyDocument"]["Statement"]:

+ if stmt["Effect"] != "Allow":

+ findings.add(

+ Finding(

+ region,

+ "IAM_UNEXPECTED_FORMAT",

+ role["Arn"],

+ resource_details={

+ "comment": "Unexpected Effect in AssumeRolePolicyDocument",

+ "statement": stmt,

+ },

)

- continue

+ )

+ continue

- if stmt["Action"] == "sts:AssumeRole":

+ if stmt["Action"] == "sts:AssumeRole":

+ if len(reasons) != 0:

+ # Admin assumption should be done by users or roles, not by AWS services

if "AWS" not in stmt["Principal"] or len(stmt["Principal"]) != 1:

findings.add(

Finding(

@@ -260,27 +294,28 @@ def find_admins_in_account(region, findings):

"IAM_UNEXPECTED_FORMAT",

role["Arn"],

resource_details={

- "comment": "Unexpected Principal in AssumeRolePolicyDocument",

+ "comment": "Unexpected Principal in AssumeRolePolicyDocument for an admin",

"Principal": stmt["Principal"],

},

)

)

- elif stmt["Action"] == "sts:AssumeRoleWithSAML":

- continue

- else:

- findings.add(

- Finding(

- region,

- "IAM_UNEXPECTED_FORMAT",

- role["Arn"],

- resource_details={

- "comment": "Unexpected Action in AssumeRolePolicyDocument",

- "statement": [stmt],

- },

- )

+ elif stmt["Action"] == "sts:AssumeRoleWithSAML":

+ continue

+ else:

+ findings.add(

+ Finding(

+ region,

+ "IAM_UNEXPECTED_FORMAT",

+ role["Arn"],

+ resource_details={

+ "comment": "Unexpected Action in AssumeRolePolicyDocument",

+ "statement": [stmt],

+ },

)

+ )

- record_admin(admins, account.name, "role", role["RoleName"])

+ if len(reasons) != 0:

+ record_admin(admins, account.name, "role", role["RoleName"])

# TODO Should check users or other roles allowed to assume this role to show they are admins

location.pop("role", None)

@@ -301,9 +336,21 @@ def find_admins_in_account(region, findings):

None,

)

)

+ if policy["PolicyArn"] in KNOWN_BAD_POLICIES:

+ findings.add(

+ Finding(

+ region,

+ "IAM_KNOWN_BAD_POLICY",

+ role["Arn"],

+ resource_details={

+ "comment": KNOWN_BAD_POLICIES[policy["PolicyArn"]],

+ "policy": policy["PolicyArn"],

+ },

+ )

+ )

for policy in group["GroupPolicyList"]:

policy_doc = policy["PolicyDocument"]

- if is_admin_policy(policy_doc, location):

+ if is_admin_policy(policy_doc, location, findings, region):

is_admin = True

findings.add(

Finding(

@@ -331,9 +378,21 @@ def find_admins_in_account(region, findings):

reasons.append(

"Attached managed policy: {}".format(policy["PolicyArn"])

)

+ if policy["PolicyArn"] in KNOWN_BAD_POLICIES:

+ findings.add(

+ Finding(

+ region,

+ "IAM_KNOWN_BAD_POLICY",

+ role["Arn"],

+ resource_details={

+ "comment": KNOWN_BAD_POLICIES[policy["PolicyArn"]],

+ "policy": policy["PolicyArn"],

+ },

+ )

+ )

for policy in user.get("UserPolicyList", []):

policy_doc = policy["PolicyDocument"]

- if is_admin_policy(policy_doc, location):

+ if is_admin_policy(policy_doc, location, findings, region):

reasons.append("Custom user policy: {}".format(policy["PolicyName"]))

findings.add(

Finding(

diff --git a/tests/unit/test_audit.py b/tests/unit/test_audit.py

index cecba9e17..6390ee114 100644

--- a/tests/unit/test_audit.py

+++ b/tests/unit/test_audit.py

@@ -39,8 +39,11 @@ def test_audit(self):

"ECR_PUBLIC",

"SQS_PUBLIC",

"SNS_PUBLIC",

- "BAD_MFA_POLICY",

+ "IAM_BAD_MFA_POLICY",

"IAM_CUSTOM_POLICY_ALLOWS_ADMIN",

+ "IAM_KNOWN_BAD_POLICY",

+ "IAM_ROLE_ALLOWS_ASSUMPTION_FROM_ANYWHERE",

+ "EC2_OLD"

]

),

)

diff --git a/tests/unit/test_common.py b/tests/unit/test_common.py

index f4e37cd46..bf44ec733 100644

--- a/tests/unit/test_common.py

+++ b/tests/unit/test_common.py

@@ -39,7 +39,7 @@ def test_get_account_stats(self):

def test_get_collection_date(self):

account = get_account("demo")

- assert_equal("2019-05-07", get_collection_date(account))

+ assert_equal("2019-05-07T15:40:22+00:00", get_collection_date(account))

# def test_get_access_advisor_active_counts(self):

# account = get_account("demo")

diff --git a/tests/unit/test_find_unused.py b/tests/unit/test_find_unused.py

new file mode 100644

index 000000000..77d6e724f

--- /dev/null

+++ b/tests/unit/test_find_unused.py

@@ -0,0 +1,273 @@

+import sys

+import json

+from importlib import reload

+

+from unittest import TestCase, mock

+from unittest.mock import MagicMock

+from nose.tools import assert_equal, assert_true, assert_false

+

+

+class TestFindUnused(TestCase):

+ mock_account = type("account", (object,), {"name": "a"})

+ mock_region = type("region", (object,), {"account": mock_account, "name": "a"})

+

+ def test_find_unused_elastic_ips_empty(self):

+ def mocked_query_side_effect(account, query, region):

+ if query == "ec2-describe-addresses":

+ return {

+ "Addresses": [

+ {

+ "AllocationId": "eipalloc-1",

+ "AssociationId": "eipassoc-1",

+ "Domain": "vpc",

+ "NetworkInterfaceId": "eni-1",

+ "NetworkInterfaceOwnerId": "123456789012",

+ "PrivateIpAddress": "10.0.0.1",

+ "PublicIp": "1.2.3.4",

+ "PublicIpv4Pool": "amazon",

+ }

+ ]

+ }

+

+ # Clear cached module so we can mock stuff

+ if "shared.find_unused" in sys.modules:

+ del sys.modules["shared.find_unused"]

+

+ with mock.patch("shared.common.query_aws") as mock_query:

+ mock_query.side_effect = mocked_query_side_effect

+ from shared.find_unused import find_unused_elastic_ips

+

+ assert_equal(find_unused_elastic_ips(self.mock_region), [])

+

+ def test_find_unused_elastic_ips(self):

+ def mocked_query_side_effect(account, query, region):

+ if query == "ec2-describe-addresses":

+ return {

+ "Addresses": [

+ {

+ "AllocationId": "eipalloc-1",

+ "AssociationId": "eipassoc-1",

+ "Domain": "vpc",

+ "NetworkInterfaceId": "eni-1",

+ "NetworkInterfaceOwnerId": "123456789012",

+ "PrivateIpAddress": "10.0.0.1",

+ "PublicIp": "1.2.3.4",

+ "PublicIpv4Pool": "amazon",

+ },

+ {

+ "PublicIp": "2.3.4.5",

+ "Domain": "vpc",

+ "AllocationId": "eipalloc-2",

+ },

+ ]

+ }

+

+ # Clear cached module so we can mock stuff

+ if "shared.find_unused" in sys.modules:

+ del sys.modules["shared.find_unused"]

+

+ with mock.patch("shared.common.query_aws") as mock_query:

+ mock_query.side_effect = mocked_query_side_effect

+ from shared.find_unused import find_unused_elastic_ips

+

+ assert_equal(

+ find_unused_elastic_ips(self.mock_region),

+ [{"id": "eipalloc-2", "ip": "2.3.4.5"}],

+ )

+

+ def test_find_unused_volumes(self):

+ def mocked_query_side_effect(account, query, region):

+ if query == "ec2-describe-volumes":

+ return {

+ "Volumes": [

+ {

+ "Attachments": [

+ {

+ "AttachTime": "2019-03-21T21:03:04+00:00",

+ "DeleteOnTermination": True,

+ "Device": "/dev/xvda",

+ "InstanceId": "i-1234",

+ "State": "attached",

+ "VolumeId": "vol-1234",

+ }

+ ],

+ "AvailabilityZone": "us-east-1b",

+ "CreateTime": "2019-03-21T21:03:04.345000+00:00",

+ "Encrypted": False,

+ "Iops": 300,

+ "Size": 100,

+ "SnapshotId": "snap-1234",

+ "State": "in-use",

+ "VolumeId": "vol-1234",

+ "VolumeType": "gp2",

+ },

+ {

+ "Attachments": [

+ {

+ "AttachTime": "2019-03-21T21:03:04+00:00",

+ "DeleteOnTermination": True,

+ "Device": "/dev/xvda",

+ "InstanceId": "i-2222",

+ "State": "attached",

+ "VolumeId": "vol-2222",

+ }

+ ],

+ "AvailabilityZone": "us-east-1b",

+ "CreateTime": "2019-03-21T21:03:04.345000+00:00",

+ "Encrypted": False,

+ "Iops": 300,

+ "Size": 100,

+ "SnapshotId": "snap-2222",

+ "State": "available",

+ "VolumeId": "vol-2222",

+ "VolumeType": "gp2",

+ },

+ ]

+ }

+

+ # Clear cached module so we can mock stuff

+ if "shared.find_unused" in sys.modules:

+ del sys.modules["shared.find_unused"]

+

+ with mock.patch("shared.common.query_aws") as mock_query:

+ mock_query.side_effect = mocked_query_side_effect

+ from shared.find_unused import find_unused_volumes

+

+ assert_equal(find_unused_volumes(self.mock_region), [{"id": "vol-2222"}])

+

+ def test_find_unused_security_groups(self):

+ def mocked_query_side_effect(account, query, region):

+ if query == "ec2-describe-security-groups":

+ return {

+ "SecurityGroups": [

+ {

+ "IpPermissionsEgress": [

+ {

+ "IpProtocol": "-1",

+ "PrefixListIds": [],

+ "IpRanges": [{"CidrIp": "0.0.0.0/0"}],

+ "UserIdGroupPairs": [],

+ "Ipv6Ranges": [],

+ }

+ ],

+ "Description": "Public access",

+ "IpPermissions": [

+ {

+ "PrefixListIds": [],

+ "FromPort": 22,

+ "IpRanges": [],

+ "ToPort": 22,

+ "IpProtocol": "tcp",

+ "UserIdGroupPairs": [

+ {

+ "UserId": "123456789012",

+ "GroupId": "sg-00000002",

+ }

+ ],

+ "Ipv6Ranges": [],

+ },

+ {

+ "PrefixListIds": [],

+ "FromPort": 443,

+ "IpRanges": [{"CidrIp": "0.0.0.0/0"}],

+ "ToPort": 443,

+ "IpProtocol": "tcp",

+ "UserIdGroupPairs": [],

+ "Ipv6Ranges": [],

+ },

+ ],

+ "GroupName": "Public",

+ "VpcId": "vpc-12345678",

+ "OwnerId": "123456789012",

+ "GroupId": "sg-00000008",

+ }

+ ]

+ }

+ elif query == "ec2-describe-network-interfaces":

+ return {"NetworkInterfaces": []}

+

+ # Clear cached module so we can mock stuff

+ if "shared.find_unused" in sys.modules:

+ del sys.modules["shared.find_unused"]

+

+ with mock.patch("shared.common.query_aws") as mock_query:

+ mock_query.side_effect = mocked_query_side_effect

+ from shared.find_unused import find_unused_security_groups

+

+ assert_equal(

+ find_unused_security_groups(self.mock_region),

+ [

+ {

+ "description": "Public access",

+ "id": "sg-00000008",

+ "name": "Public",

+ }

+ ],

+ )

+

+ def test_find_unused_security_groups(self):

+ def mocked_query_side_effect(account, query, region):

+ if query == "ec2-describe-network-interfaces":

+ return {

+ "NetworkInterfaces": [

+ {

+ "Association": {

+ "IpOwnerId": "amazon",

+ "PublicDnsName": "ec2-3-80-3-41.compute-1.amazonaws.com",

+ "PublicIp": "3.80.3.41",

+ },

+ "Attachment": {

+ "AttachTime": "2018-11-27T03:36:34+00:00",

+ "AttachmentId": "eni-attach-08ac3da5d33fc7a02",

+ "DeleteOnTermination": False,

+ "DeviceIndex": 1,

+ "InstanceOwnerId": "501673713797",

+ "Status": "attached",

+ },

+ "AvailabilityZone": "us-east-1f",

+ "Description": "arn:aws:ecs:us-east-1:653711331788:attachment/ed8fed01-82d0-4bf6-86cf-fe3115c23ab8",

+ "Groups": [

+ {"GroupId": "sg-00000008", "GroupName": "Public"}

+ ],

+ "InterfaceType": "interface",

+ "Ipv6Addresses": [],

+ "MacAddress": "16:2f:d0:d6:ed:28",

+ "NetworkInterfaceId": "eni-00000001",

+ "OwnerId": "653711331788",

+ "PrivateDnsName": "ip-172-31-48-168.ec2.internal",

+ "PrivateIpAddress": "172.31.48.168",

+ "PrivateIpAddresses": [

+ {

+ "Association": {

+ "IpOwnerId": "amazon",

+ "PublicDnsName": "ec2-3-80-3-41.compute-1.amazonaws.com",

+ "PublicIp": "3.80.3.41",

+ },

+ "Primary": True,

+ "PrivateDnsName": "ip-172-31-48-168.ec2.internal",

+ "PrivateIpAddress": "172.31.48.168",

+ }

+ ],

+ "RequesterId": "578734482556",

+ "RequesterManaged": True,

+ "SourceDestCheck": True,

+ "Status": "available",

+ "SubnetId": "subnet-00000001",

+ "TagSet": [],

+ "VpcId": "vpc-12345678",

+ }

+ ]

+ }

+

+ # Clear cached module so we can mock stuff

+ if "shared.find_unused" in sys.modules:

+ del sys.modules["shared.find_unused"]

+

+ with mock.patch("shared.common.query_aws") as mock_query:

+ mock_query.side_effect = mocked_query_side_effect

+ from shared.find_unused import find_unused_network_interfaces

+

+ assert_equal(

+ find_unused_network_interfaces(self.mock_region),

+ [{"id": "eni-00000001"}],

+ )

diff --git a/vendor_accounts.yaml b/vendor_accounts.yaml

index 7e25882e6..b311e6f3f 100644

--- a/vendor_accounts.yaml

+++ b/vendor_accounts.yaml

@@ -11,6 +11,8 @@

# Note the `name` is referenced in ./web/style.json which then references an

# image file in ./web/icons/logos/ for these vendors to display in the

# web-of-trust view.

+#

+# Some of these were found originally from https://github.com/dagrz/aws_pwn/blob/master/miscellanea/integrations.txt

- name: Cloudhealth

source: 'https://github.com/mozilla/security/blob/master/operations/cloudformation-templates/cloudhealth_iam_role.json'

@@ -67,8 +69,6 @@

type: 'aws'

source: 'https://docs.aws.amazon.com/awsaccountbilling/latest/aboutv2/billing-getting-started.html#step-2'

accounts: ['386209384616']

-

- # The following are from https://github.com/dagrz/aws_pwn/blob/master/miscellanea/integrations.txt and still need logos

- name: 'dome9'

source: 'http://support.dome9.com/knowledgebase/articles/796419-convert-dome9-aws-account-connection-from-iam-user'

accounts: ['634729597623']

@@ -148,4 +148,10 @@

Source: 'https://onelogin.service-now.com/kb_view_customer.do?sysparm_article=KB0010344'

- name: 'nOps'

accounts: ['202279780353']

- source: 'https://help.nops.io/manual_setup'

\ No newline at end of file

+ source: 'https://help.nops.io/manual_setup'

+- name: 'Fivetran'

+ source: 'https://fivetran.com/docs/logs/cloudwatch/setup-guide'

+ accounts: ['834469178297']

+- name: 'Databricks'

+ source: 'https://docs.databricks.com/administration-guide/account-settings/aws-accounts.html'

+ accounts: ['414351767826']

\ No newline at end of file

diff --git a/web/icons/logos/databricks.png b/web/icons/logos/databricks.png

new file mode 100644

index 000000000..5c0c6bc41

Binary files /dev/null and b/web/icons/logos/databricks.png differ

diff --git a/web/icons/logos/fivetran.png b/web/icons/logos/fivetran.png

new file mode 100644

index 000000000..05498df97

Binary files /dev/null and b/web/icons/logos/fivetran.png differ

diff --git a/web/js/cloudmap.js b/web/js/cloudmap.js

index 86dab1a96..a586bc88b 100644

--- a/web/js/cloudmap.js

+++ b/web/js/cloudmap.js

@@ -51,6 +51,11 @@ $(window).on('load', function(){

},

style: stylefile[0]

});

+ })

+ .fail(function(e) {

+ if (e.status == 404) {

+ alert("Failed to fetch data!\nPlease run cloudmapper.py prepare before using webserver");

+ }

});

}); // Page loaded

diff --git a/web/style.json b/web/style.json

index 10c25fc4f..afed45eae 100644

--- a/web/style.json

+++ b/web/style.json

@@ -745,6 +745,32 @@

"height": 100

}

},

+ {

+ "selector": "[type = \"Fivetran\"]",

+

+ "css": {

+ "label": "",

+ "background-opacity": 0,

+ "background-image": "./icons/logos/fivetran.png",

+ "background-fit": "contain",

+ "background-clip": "none",

+ "width": 150,

+ "height": 100

+ }

+ },

+ {

+ "selector": "[type = \"Databricks\"]",

+

+ "css": {

+ "label": "",

+ "background-opacity": 0,

+ "background-image": "./icons/logos/databricks.png",

+ "background-fit": "contain",

+ "background-clip": "none",

+ "width": 150,

+ "height": 100

+ }

+ },

{

"selector": "[type = \"Lacework\"]",

+

-

## Installation

@@ -101,22 +130,10 @@ Collecting the data is done as follows:

python cloudmapper.py collect --account my_account

```

-# Commands

+### Alternatives

+For network diagrams, you may want to try https://github.com/lyft/cartography or https://github.com/anaynayak/aws-security-viz

-- `api_endpoints`: List the URLs that can be called via API Gateway.

-- `audit`: Check for potential misconfigurations.

-- `collect`: Collect metadata about an account. More details [here](https://summitroute.com/blog/2018/06/05/cloudmapper_collect/).

-- `find_admins`: Look at IAM policies to identify admin users and roles and spot potential IAM issues. More details [here](https://summitroute.com/blog/2018/06/12/cloudmapper_find_admins/).

-- `prepare`/`webserver`: See [Network Visualizations](docs/network_visualizations.md)

-- `public`: Find public hosts and port ranges. More details [here](https://summitroute.com/blog/2018/06/13/cloudmapper_public/).

-- `sg_ips`: Get geoip info on CIDRs trusted in Security Groups. More details [here](https://summitroute.com/blog/2018/06/12/cloudmapper_sg_ips/).

-- `stats`: Show counts of resources for accounts. More details [here](https://summitroute.com/blog/2018/06/06/cloudmapper_stats/).

-- `weboftrust`: Show Web Of Trust. More details [here](https://summitroute.com/blog/2018/06/13/cloudmapper_wot/).

-- `report`: Generate HTML report. Includes summary of the accounts and audit findings. More details [here](https://summitroute.com/blog/2019/03/04/cloudmapper_report_generation/).

-- `iam_report`: Generate HTML report for the IAM information of an account. More details [here](https://summitroute.com/blog/2019/03/11/cloudmapper_iam_report_command/).

-

-

-If you want to add your own private commands, you can create a `private_commands` directory and add them there.

+For auditng and other AWS security tools see https://github.com/toniblyx/my-arsenal-of-aws-security-tools

Licenses

--------

diff --git a/account-data/demo/us-east-1/iam-get-account-authorization-details.json b/account-data/demo/us-east-1/iam-get-account-authorization-details.json

index 352a0b41b..6d56bce8f 100644

--- a/account-data/demo/us-east-1/iam-get-account-authorization-details.json

+++ b/account-data/demo/us-east-1/iam-get-account-authorization-details.json

@@ -117,7 +117,12 @@

],

"Version": "2012-10-17"

},

- "AttachedManagedPolicies": [],

+ "AttachedManagedPolicies": [

+ {

+ "PolicyName": "AmazonEC2RoleforSSM",

+ "PolicyArn": "arn:aws:iam::aws:policy/service-role/AmazonEC2RoleforSSM"

+ }

+ ],

"CreateDate": "2018-11-20T17:14:45+00:00",

"InstanceProfileList": [],

"Path": "/service-role/",

diff --git a/audit_config.yaml b/audit_config.yaml

index a5925d6e6..a7fa22c26 100644

--- a/audit_config.yaml

+++ b/audit_config.yaml

@@ -141,13 +141,20 @@ USER_HAS_NOT_USED_ACCESS_KEY_FOR_MAX_DAYS:

is_global: True

group: IAM

-BAD_MFA_POLICY:

+IAM_BAD_MFA_POLICY:

title: Incorrect policy used to attempt to enforce MFA

description: AWS had advised incorrect policies for enforcing MFA which allowed an attacker, if they compromised keys that were protected by this policy, to remove the MFA policy from themselves, or remove the existing MFA device and add their own.

severity: High

is_global: True

group: IAM

+IAM_KNOWN_BAD_POLICY:

+ title: Known bad policy used

+ description: AWS has provided flawed policies to customers. These are either deprecated or no longer advised.

+ severity: High

+ is_global: True

+ group: IAM

+

IAM_NOTACTION_ALLOW:

title: Use of NotAction in an Allow statement

description: Using NotAction in an Allow policy almost always results in unwanted actions being allowed and should be avoided.

@@ -155,6 +162,13 @@ IAM_NOTACTION_ALLOW:

is_global: True

group: IAM

+IAM_ROLE_ALLOWS_ASSUMPTION_FROM_ANYWHERE:

+ title: IAM role allows assumption from anywhere

+ description: The IAM role's trust policy allows any other account to assume it.

+ severity: High

+ is_global: True

+ group: IAM

+

IAM_MANAGED_POLICY_UNINTENTIONALLY_ALLOWING_ADMIN:

title: Managed policy is allowing admin

description: This finding is primarily for the deprecated AmazonElasticTranscoderFullAccess policy that was found to grant admin privileges.

@@ -265,6 +279,12 @@ EC2_CLASSIC:

severity: Info

group: EC2

+EC2_OLD:

+ title: Old EC2

+ description: EC2 runnning that was launched more than 365 days ago.

+ severity: Info

+ group: EC2

+

LAMBDA_PUBLIC:

title: Lambda is internet accessible

description: Lambdas should not be publicly callable. Other resources, such as an API Gateway should be used to call the Lambda.

diff --git a/cloudmapper.py b/cloudmapper.py

index 09302f78c..810dd56b5 100755

--- a/cloudmapper.py

+++ b/cloudmapper.py

@@ -30,7 +30,7 @@

import pkgutil

import importlib

-__version__ = "2.5.7"

+__version__ = "2.6.0"

def show_help(commands):

diff --git a/commands/find_unused.py b/commands/find_unused.py

new file mode 100644

index 000000000..986907381

--- /dev/null

+++ b/commands/find_unused.py

@@ -0,0 +1,14 @@

+from __future__ import print_function

+import json

+

+from shared.common import parse_arguments

+from shared.find_unused import find_unused_resources

+

+

+__description__ = "Find unused resources in accounts"

+

+def run(arguments):

+ _, accounts, config = parse_arguments(arguments)

+ unused_resources = find_unused_resources(accounts)

+

+ print(json.dumps(unused_resources, indent=2, sort_keys=True))

diff --git a/commands/report.py b/commands/report.py

index 3307e6bd0..ae4732753 100644

--- a/commands/report.py

+++ b/commands/report.py

@@ -84,7 +84,7 @@ def report(accounts, config, args):

{

"name": account["name"],

"id": account["id"],

- "collection_date": get_collection_date(account),

+ "collection_date": get_collection_date(account)[:10],

}

)

diff --git a/docs/images/command_line_audit.png b/docs/images/command_line_audit.png

new file mode 100644

index 000000000..f9f970fde

Binary files /dev/null and b/docs/images/command_line_audit.png differ

diff --git a/docs/images/command_line_public.png b/docs/images/command_line_public.png

new file mode 100644

index 000000000..7b74b5f25

Binary files /dev/null and b/docs/images/command_line_public.png differ

diff --git a/docs/images/iam_report-inactive_and_detail.png b/docs/images/iam_report-inactive_and_detail.png

new file mode 100644

index 000000000..a5e838abb

Binary files /dev/null and b/docs/images/iam_report-inactive_and_detail.png differ

diff --git a/docs/images/report_findings.png b/docs/images/report_findings.png

new file mode 100644

index 000000000..e36a9cc15

Binary files /dev/null and b/docs/images/report_findings.png differ

diff --git a/docs/images/report_findings_summary.png b/docs/images/report_findings_summary.png

new file mode 100644

index 000000000..e5c2a0634

Binary files /dev/null and b/docs/images/report_findings_summary.png differ

diff --git a/docs/images/report_resources.png b/docs/images/report_resources.png

new file mode 100644

index 000000000..7af7d639c

Binary files /dev/null and b/docs/images/report_resources.png differ

diff --git a/shared/audit.py b/shared/audit.py

index ad1a17a81..3edd5bfc6 100644

--- a/shared/audit.py

+++ b/shared/audit.py

@@ -1,5 +1,4 @@

import json

-from datetime import datetime

import pyjq

import traceback

@@ -12,6 +11,8 @@

is_unblockable_cidr,

is_external_cidr,

Finding,

+ get_collection_date,

+ days_between

)

from shared.query import query_aws, get_parameter_file

from shared.nodes import Account, Region

@@ -236,14 +237,6 @@ def audit_root_user(findings, region):

def audit_users(findings, region):

MAX_DAYS_SINCE_LAST_USAGE = 90

- def days_between(s1, s2):

- """s1 and s2 are date strings, such as 2018-04-08T23:33:20+00:00 """

- time_format = "%Y-%m-%dT%H:%M:%S"

-

- d1 = datetime.strptime(s1.split("+")[0], time_format)

- d2 = datetime.strptime(s2.split("+")[0], time_format)

- return abs((d1 - d2).days)

-

# TODO: Convert all of this into a table

json_blob = query_aws(region.account, "iam-get-credential-report", region)

@@ -635,6 +628,23 @@ def audit_ec2(findings, region):

# Ignore EC2's that are off

continue

+ # Check for old instances

+ if instance.get("LaunchTime", "") != "":

+ MAX_RESOURCE_AGE_DAYS = 365

+ collection_date = get_collection_date(region.account)

+ launch_time = instance["LaunchTime"].split(".")[0]

+ age_in_days = days_between(launch_time, collection_date)

+ if age_in_days > MAX_RESOURCE_AGE_DAYS:

+ findings.add(Finding(

+ region,

+ "EC2_OLD",

+ instance["InstanceId"],

+ resource_details={

+ "Age in days": age_in_days

+ },

+ ))

+

+ # Check for EC2 Classic

if "vpc" not in instance.get("VpcId", ""):

findings.add(Finding(region, "EC2_CLASSIC", instance["InstanceId"]))

@@ -729,7 +739,7 @@ def audit_sg(findings, region):

region,

"SG_LARGE_CIDR",

cidr,

- resource_details={"size": ip.size, "security_groups": cidrs[cidr]},

+ resource_details={"size": ip.size, "security_groups": list(cidrs[cidr])},

)

)

@@ -932,7 +942,7 @@ def audit(accounts):

if region.name == "us-east-1":

audit_s3_buckets(findings, region)

audit_cloudtrail(findings, region)

- audit_iam(findings, region.account)

+ audit_iam(findings, region)

audit_password_policy(findings, region)

audit_root_user(findings, region)

audit_users(findings, region)

diff --git a/shared/common.py b/shared/common.py

index 9b576b18f..07519fb70 100644

--- a/shared/common.py

+++ b/shared/common.py

@@ -309,9 +309,21 @@ def get_us_east_1(account):

raise Exception("us-east-1 not found")

+def iso_date(d):

+ """ Convert ISO format date string such as 2018-04-08T23:33:20+00:00"""

+ time_format = "%Y-%m-%dT%H:%M:%S"

+ return datetime.datetime.strptime(d.split("+")[0], time_format)

+

+def days_between(s1, s2):

+ """s1 and s2 are date strings"""

+ d1 = iso_date(s1)

+ d2 = iso_date(s2)

+ return abs((d1 - d2).days)

def get_collection_date(account):

- account_struct = Account(None, account)

+ if type(account) is not Account:

+ account = Account(None, account)

+ account_struct = account

json_blob = query_aws(

account_struct, "iam-get-credential-report", get_us_east_1(account_struct)

)

@@ -319,8 +331,7 @@ def get_collection_date(account):

raise Exception("File iam-get-credential-report.json does not exist or is not well-formed. Likely cause is you did not run the collect command for this account.")

# GeneratedTime looks like "2019-01-30T15:43:24+00:00"

- # so extract the data part "2019-01-30"

- return json_blob["GeneratedTime"][:10]

+ return json_blob["GeneratedTime"]

def get_access_advisor_active_counts(account, max_age=90):

diff --git a/shared/find_unused.py b/shared/find_unused.py

new file mode 100644

index 000000000..5e208b389

--- /dev/null

+++ b/shared/find_unused.py

@@ -0,0 +1,119 @@

+import pyjq

+

+from shared.common import query_aws, get_regions

+from shared.nodes import Account, Region

+

+

+def find_unused_security_groups(region):

+ # Get the defined security groups, then find all the Security Groups associated with the

+ # ENIs. Then diff these to find the unused Security Groups.

+ used_sgs = set()

+

+ defined_sgs = query_aws(region.account, "ec2-describe-security-groups", region)

+

+ network_interfaces = query_aws(

+ region.account, "ec2-describe-network-interfaces", region

+ )

+

+ defined_sg_set = {}

+

+ for sg in pyjq.all(".SecurityGroups[]", defined_sgs):

+ defined_sg_set[sg["GroupId"]] = sg

+

+ for used_sg in pyjq.all(

+ ".NetworkInterfaces[].Groups[].GroupId", network_interfaces

+ ):

+ used_sgs.add(used_sg)

+

+ unused_sg_ids = set(defined_sg_set) - used_sgs

+ unused_sgs = []

+ for sg_id in unused_sg_ids:

+ unused_sgs.append(

+ {

+ "id": sg_id,

+ "name": defined_sg_set[sg_id]["GroupName"],

+ "description": defined_sg_set[sg_id].get("Description", ""),

+ }

+ )

+ return unused_sgs

+

+

+def find_unused_volumes(region):

+ unused_volumes = []

+ volumes = query_aws(region.account, "ec2-describe-volumes", region)

+ for volume in pyjq.all('.Volumes[]|select(.State=="available")', volumes):

+ unused_volumes.append({"id": volume["VolumeId"]})

+

+ return unused_volumes

+

+

+def find_unused_elastic_ips(region):

+ unused_ips = []

+ ips = query_aws(region.account, "ec2-describe-addresses", region)

+ for ip in pyjq.all(".Addresses[] | select(.AssociationId == null)", ips):

+ unused_ips.append({"id": ip["AllocationId"], "ip": ip["PublicIp"]})

+

+ return unused_ips

+

+

+def find_unused_network_interfaces(region):

+ unused_network_interfaces = []

+ network_interfaces = query_aws(

+ region.account, "ec2-describe-network-interfaces", region

+ )

+ for network_interface in pyjq.all(

+ '.NetworkInterfaces[]|select(.Status=="available")', network_interfaces

+ ):

+ unused_network_interfaces.append(

+ {"id": network_interface["NetworkInterfaceId"]}

+ )

+

+ return unused_network_interfaces

+

+

+def add_if_exists(dictionary, key, value):

+ if value:

+ dictionary[key] = value

+

+

+def find_unused_resources(accounts):

+ unused_resources = []

+ for account in accounts:

+ unused_resources_for_account = []

+ for region_json in get_regions(Account(None, account)):

+ region = Region(Account(None, account), region_json)

+

+ unused_resources_for_region = {}

+

+ add_if_exists(

+ unused_resources_for_region,

+ "security_groups",

+ find_unused_security_groups(region),

+ )

+ add_if_exists(

+ unused_resources_for_region, "volumes", find_unused_volumes(region)

+ )

+ add_if_exists(

+ unused_resources_for_region,

+ "elastic_ips",

+ find_unused_elastic_ips(region),

+ )

+ add_if_exists(

+ unused_resources_for_region,

+ "network_interfaces",

+ find_unused_network_interfaces(region),

+ )

+

+ unused_resources_for_account.append(

+ {

+ "region": region_json["RegionName"],

+ "unused_resources": unused_resources_for_region,

+ }

+ )

+ unused_resources.append(

+ {

+ "account": {"id": account["id"], "name": account["name"]},

+ "regions": unused_resources_for_account,

+ }

+ )

+ return unused_resources

diff --git a/shared/iam_audit.py b/shared/iam_audit.py

index 99f2cbe84..583365ba4 100644

--- a/shared/iam_audit.py

+++ b/shared/iam_audit.py

@@ -12,6 +12,11 @@

from shared.query import query_aws, get_parameter_file

from shared.nodes import Account, Region

+KNOWN_BAD_POLICIES = {

+ "arn:aws:iam::aws:policy/service-role/AmazonEC2RoleforSSM": "Use AmazonSSMManagedInstanceCore instead and add privs as needed",

+ "arn:aws:iam::aws:policy/service-role/AmazonMachineLearningRoleforRedshiftDataSource": "Use AmazonMachineLearningRoleforRedshiftDataSourceV2 instead"

+

+}

def get_current_policy_doc(policy):

for doc in policy["PolicyVersionList"]:

@@ -44,7 +49,7 @@ def policy_action_count(policy_doc, location):

return actions_count

-def is_admin_policy(policy_doc, location):

+def is_admin_policy(policy_doc, location, findings, region):

# This attempts to identify policies that directly allow admin privs, or indirectly through possible

# privilege escalation (ex. iam:PutRolePolicy to add an admin policy to itself).

# It is a best effort. It will have false negatives, meaning it may not identify an admin policy

@@ -135,14 +140,14 @@ def check_for_bad_policy(findings, region, arn, policy_text):

== "AllowIndividualUserToViewAndManageTheirOwnMFA"

):

if "iam:DeactivateMFADevice" in make_list(statement.get("Action", [])):

- findings.add(Finding(region, "BAD_MFA_POLICY", arn, policy_text))

+ findings.add(Finding(region, "IAM_BAD_MFA_POLICY", arn, policy_text))

return

elif (

statement.get("Sid", "")

== "BlockAnyAccessOtherThanAboveUnlessSignedInWithMFA"

):

if "iam:*" in make_list(statement.get("NotAction", [])):

- findings.add(Finding(region, "BAD_MFA_POLICY", arn, policy_text))

+ findings.add(Finding(region, "IAM_BAD_MFA_POLICY", arn, policy_text))

return

@@ -180,7 +185,7 @@ def find_admins_in_account(region, findings):

policy_action_counts[policy["Arn"]] = policy_action_count(policy_doc, location)

- if is_admin_policy(policy_doc, location):

+ if is_admin_policy(policy_doc, location, findings, region):

admin_policies.append(policy["Arn"])

if (

"arn:aws:iam::aws:policy/AdministratorAccess" in policy["Arn"]

@@ -220,9 +225,22 @@ def find_admins_in_account(region, findings):

reasons.append(

"Attached managed policy: {}".format(policy["PolicyArn"])

)

+ if policy["PolicyArn"] in KNOWN_BAD_POLICIES:

+ findings.add(

+ Finding(

+ region,

+ "IAM_KNOWN_BAD_POLICY",

+ role["Arn"],

+ resource_details={

+ "comment": KNOWN_BAD_POLICIES[policy["PolicyArn"]],

+ "policy": policy["PolicyArn"],

+ },

+ )

+ )

+

for policy in role["RolePolicyList"]:

policy_doc = policy["PolicyDocument"]

- if is_admin_policy(policy_doc, location):

+ if is_admin_policy(policy_doc, location, findings, region):

reasons.append("Custom policy: {}".format(policy["PolicyName"]))

findings.add(

Finding(

@@ -236,23 +254,39 @@ def find_admins_in_account(region, findings):

)

)

- if len(reasons) != 0:

- for stmt in role["AssumeRolePolicyDocument"]["Statement"]:

- if stmt["Effect"] != "Allow":

- findings.add(

- Finding(

- region,

- "IAM_UNEXPECTED_FORMAT",

- role["Arn"],

- resource_details={

- "comment": "Unexpected Effect in AssumeRolePolicyDocument",

- "statement": stmt,

- },

- )

+ # Check if role is accessible from anywhere

+ policy = Policy(role["AssumeRolePolicyDocument"])

+ if policy.is_internet_accessible():

+ findings.add(

+ Finding(

+ region,

+ "IAM_ROLE_ALLOWS_ASSUMPTION_FROM_ANYWHERE",

+ role["Arn"],

+ resource_details={

+ "statement": role["AssumeRolePolicyDocument"],

+ },

+ )

+ )

+

+ # Check if anything looks malformed

+ for stmt in role["AssumeRolePolicyDocument"]["Statement"]:

+ if stmt["Effect"] != "Allow":

+ findings.add(

+ Finding(

+ region,

+ "IAM_UNEXPECTED_FORMAT",

+ role["Arn"],

+ resource_details={

+ "comment": "Unexpected Effect in AssumeRolePolicyDocument",

+ "statement": stmt,

+ },

)

- continue

+ )

+ continue

- if stmt["Action"] == "sts:AssumeRole":

+ if stmt["Action"] == "sts:AssumeRole":

+ if len(reasons) != 0:

+ # Admin assumption should be done by users or roles, not by AWS services

if "AWS" not in stmt["Principal"] or len(stmt["Principal"]) != 1:

findings.add(

Finding(

@@ -260,27 +294,28 @@ def find_admins_in_account(region, findings):

"IAM_UNEXPECTED_FORMAT",

role["Arn"],

resource_details={

- "comment": "Unexpected Principal in AssumeRolePolicyDocument",

+ "comment": "Unexpected Principal in AssumeRolePolicyDocument for an admin",

"Principal": stmt["Principal"],

},

)

)

- elif stmt["Action"] == "sts:AssumeRoleWithSAML":

- continue

- else:

- findings.add(

- Finding(

- region,

- "IAM_UNEXPECTED_FORMAT",

- role["Arn"],

- resource_details={

- "comment": "Unexpected Action in AssumeRolePolicyDocument",

- "statement": [stmt],

- },

- )

+ elif stmt["Action"] == "sts:AssumeRoleWithSAML":

+ continue

+ else:

+ findings.add(

+ Finding(

+ region,

+ "IAM_UNEXPECTED_FORMAT",

+ role["Arn"],

+ resource_details={

+ "comment": "Unexpected Action in AssumeRolePolicyDocument",

+ "statement": [stmt],

+ },

)

+ )

- record_admin(admins, account.name, "role", role["RoleName"])

+ if len(reasons) != 0:

+ record_admin(admins, account.name, "role", role["RoleName"])

# TODO Should check users or other roles allowed to assume this role to show they are admins

location.pop("role", None)

@@ -301,9 +336,21 @@ def find_admins_in_account(region, findings):

None,

)

)

+ if policy["PolicyArn"] in KNOWN_BAD_POLICIES:

+ findings.add(

+ Finding(

+ region,

+ "IAM_KNOWN_BAD_POLICY",

+ role["Arn"],

+ resource_details={

+ "comment": KNOWN_BAD_POLICIES[policy["PolicyArn"]],

+ "policy": policy["PolicyArn"],

+ },

+ )

+ )

for policy in group["GroupPolicyList"]:

policy_doc = policy["PolicyDocument"]

- if is_admin_policy(policy_doc, location):

+ if is_admin_policy(policy_doc, location, findings, region):

is_admin = True

findings.add(

Finding(

@@ -331,9 +378,21 @@ def find_admins_in_account(region, findings):

reasons.append(

"Attached managed policy: {}".format(policy["PolicyArn"])

)

+ if policy["PolicyArn"] in KNOWN_BAD_POLICIES:

+ findings.add(

+ Finding(

+ region,

+ "IAM_KNOWN_BAD_POLICY",

+ role["Arn"],

+ resource_details={

+ "comment": KNOWN_BAD_POLICIES[policy["PolicyArn"]],

+ "policy": policy["PolicyArn"],

+ },

+ )

+ )

for policy in user.get("UserPolicyList", []):

policy_doc = policy["PolicyDocument"]

- if is_admin_policy(policy_doc, location):

+ if is_admin_policy(policy_doc, location, findings, region):

reasons.append("Custom user policy: {}".format(policy["PolicyName"]))

findings.add(

Finding(

diff --git a/tests/unit/test_audit.py b/tests/unit/test_audit.py

index cecba9e17..6390ee114 100644

--- a/tests/unit/test_audit.py

+++ b/tests/unit/test_audit.py

@@ -39,8 +39,11 @@ def test_audit(self):

"ECR_PUBLIC",

"SQS_PUBLIC",

"SNS_PUBLIC",

- "BAD_MFA_POLICY",

+ "IAM_BAD_MFA_POLICY",

"IAM_CUSTOM_POLICY_ALLOWS_ADMIN",

+ "IAM_KNOWN_BAD_POLICY",

+ "IAM_ROLE_ALLOWS_ASSUMPTION_FROM_ANYWHERE",

+ "EC2_OLD"

]

),

)

diff --git a/tests/unit/test_common.py b/tests/unit/test_common.py

index f4e37cd46..bf44ec733 100644

--- a/tests/unit/test_common.py

+++ b/tests/unit/test_common.py

@@ -39,7 +39,7 @@ def test_get_account_stats(self):

def test_get_collection_date(self):

account = get_account("demo")

- assert_equal("2019-05-07", get_collection_date(account))

+ assert_equal("2019-05-07T15:40:22+00:00", get_collection_date(account))

# def test_get_access_advisor_active_counts(self):

# account = get_account("demo")

diff --git a/tests/unit/test_find_unused.py b/tests/unit/test_find_unused.py

new file mode 100644

index 000000000..77d6e724f

--- /dev/null

+++ b/tests/unit/test_find_unused.py

@@ -0,0 +1,273 @@

+import sys

+import json

+from importlib import reload

+

+from unittest import TestCase, mock

+from unittest.mock import MagicMock

+from nose.tools import assert_equal, assert_true, assert_false

+

+

+class TestFindUnused(TestCase):

+ mock_account = type("account", (object,), {"name": "a"})

+ mock_region = type("region", (object,), {"account": mock_account, "name": "a"})

+

+ def test_find_unused_elastic_ips_empty(self):

+ def mocked_query_side_effect(account, query, region):

+ if query == "ec2-describe-addresses":

+ return {

+ "Addresses": [

+ {

+ "AllocationId": "eipalloc-1",

+ "AssociationId": "eipassoc-1",

+ "Domain": "vpc",

+ "NetworkInterfaceId": "eni-1",

+ "NetworkInterfaceOwnerId": "123456789012",

+ "PrivateIpAddress": "10.0.0.1",

+ "PublicIp": "1.2.3.4",

+ "PublicIpv4Pool": "amazon",

+ }

+ ]

+ }

+

+ # Clear cached module so we can mock stuff

+ if "shared.find_unused" in sys.modules:

+ del sys.modules["shared.find_unused"]

+

+ with mock.patch("shared.common.query_aws") as mock_query:

+ mock_query.side_effect = mocked_query_side_effect

+ from shared.find_unused import find_unused_elastic_ips

+

+ assert_equal(find_unused_elastic_ips(self.mock_region), [])

+

+ def test_find_unused_elastic_ips(self):

+ def mocked_query_side_effect(account, query, region):

+ if query == "ec2-describe-addresses":

+ return {

+ "Addresses": [

+ {

+ "AllocationId": "eipalloc-1",

+ "AssociationId": "eipassoc-1",

+ "Domain": "vpc",

+ "NetworkInterfaceId": "eni-1",

+ "NetworkInterfaceOwnerId": "123456789012",

+ "PrivateIpAddress": "10.0.0.1",

+ "PublicIp": "1.2.3.4",

+ "PublicIpv4Pool": "amazon",

+ },

+ {

+ "PublicIp": "2.3.4.5",

+ "Domain": "vpc",

+ "AllocationId": "eipalloc-2",

+ },

+ ]

+ }

+

+ # Clear cached module so we can mock stuff

+ if "shared.find_unused" in sys.modules:

+ del sys.modules["shared.find_unused"]

+

+ with mock.patch("shared.common.query_aws") as mock_query:

+ mock_query.side_effect = mocked_query_side_effect

+ from shared.find_unused import find_unused_elastic_ips

+

+ assert_equal(

+ find_unused_elastic_ips(self.mock_region),

+ [{"id": "eipalloc-2", "ip": "2.3.4.5"}],

+ )

+

+ def test_find_unused_volumes(self):

+ def mocked_query_side_effect(account, query, region):

+ if query == "ec2-describe-volumes":

+ return {

+ "Volumes": [

+ {

+ "Attachments": [

+ {

+ "AttachTime": "2019-03-21T21:03:04+00:00",

+ "DeleteOnTermination": True,

+ "Device": "/dev/xvda",

+ "InstanceId": "i-1234",

+ "State": "attached",

+ "VolumeId": "vol-1234",

+ }

+ ],

+ "AvailabilityZone": "us-east-1b",

+ "CreateTime": "2019-03-21T21:03:04.345000+00:00",

+ "Encrypted": False,

+ "Iops": 300,

+ "Size": 100,

+ "SnapshotId": "snap-1234",

+ "State": "in-use",

+ "VolumeId": "vol-1234",

+ "VolumeType": "gp2",

+ },

+ {

+ "Attachments": [

+ {

+ "AttachTime": "2019-03-21T21:03:04+00:00",

+ "DeleteOnTermination": True,

+ "Device": "/dev/xvda",

+ "InstanceId": "i-2222",

+ "State": "attached",

+ "VolumeId": "vol-2222",

+ }

+ ],

+ "AvailabilityZone": "us-east-1b",

+ "CreateTime": "2019-03-21T21:03:04.345000+00:00",

+ "Encrypted": False,

+ "Iops": 300,

+ "Size": 100,

+ "SnapshotId": "snap-2222",

+ "State": "available",

+ "VolumeId": "vol-2222",

+ "VolumeType": "gp2",

+ },

+ ]

+ }

+

+ # Clear cached module so we can mock stuff

+ if "shared.find_unused" in sys.modules:

+ del sys.modules["shared.find_unused"]

+

+ with mock.patch("shared.common.query_aws") as mock_query:

+ mock_query.side_effect = mocked_query_side_effect

+ from shared.find_unused import find_unused_volumes

+

+ assert_equal(find_unused_volumes(self.mock_region), [{"id": "vol-2222"}])

+

+ def test_find_unused_security_groups(self):

+ def mocked_query_side_effect(account, query, region):

+ if query == "ec2-describe-security-groups":

+ return {

+ "SecurityGroups": [

+ {

+ "IpPermissionsEgress": [

+ {

+ "IpProtocol": "-1",

+ "PrefixListIds": [],

+ "IpRanges": [{"CidrIp": "0.0.0.0/0"}],

+ "UserIdGroupPairs": [],

+ "Ipv6Ranges": [],

+ }

+ ],

+ "Description": "Public access",

+ "IpPermissions": [

+ {

+ "PrefixListIds": [],

+ "FromPort": 22,

+ "IpRanges": [],

+ "ToPort": 22,

+ "IpProtocol": "tcp",

+ "UserIdGroupPairs": [

+ {

+ "UserId": "123456789012",

+ "GroupId": "sg-00000002",

+ }

+ ],

+ "Ipv6Ranges": [],

+ },

+ {

+ "PrefixListIds": [],

+ "FromPort": 443,

+ "IpRanges": [{"CidrIp": "0.0.0.0/0"}],

+ "ToPort": 443,

+ "IpProtocol": "tcp",

+ "UserIdGroupPairs": [],

+ "Ipv6Ranges": [],

+ },

+ ],

+ "GroupName": "Public",

+ "VpcId": "vpc-12345678",

+ "OwnerId": "123456789012",

+ "GroupId": "sg-00000008",

+ }

+ ]

+ }

+ elif query == "ec2-describe-network-interfaces":

+ return {"NetworkInterfaces": []}

+

+ # Clear cached module so we can mock stuff

+ if "shared.find_unused" in sys.modules:

+ del sys.modules["shared.find_unused"]

+

+ with mock.patch("shared.common.query_aws") as mock_query:

+ mock_query.side_effect = mocked_query_side_effect

+ from shared.find_unused import find_unused_security_groups

+

+ assert_equal(

+ find_unused_security_groups(self.mock_region),

+ [

+ {

+ "description": "Public access",

+ "id": "sg-00000008",

+ "name": "Public",

+ }

+ ],

+ )

+

+ def test_find_unused_security_groups(self):

+ def mocked_query_side_effect(account, query, region):

+ if query == "ec2-describe-network-interfaces":

+ return {

+ "NetworkInterfaces": [

+ {

+ "Association": {

+ "IpOwnerId": "amazon",

+ "PublicDnsName": "ec2-3-80-3-41.compute-1.amazonaws.com",

+ "PublicIp": "3.80.3.41",

+ },

+ "Attachment": {

+ "AttachTime": "2018-11-27T03:36:34+00:00",

+ "AttachmentId": "eni-attach-08ac3da5d33fc7a02",

+ "DeleteOnTermination": False,

+ "DeviceIndex": 1,

+ "InstanceOwnerId": "501673713797",

+ "Status": "attached",

+ },

+ "AvailabilityZone": "us-east-1f",

+ "Description": "arn:aws:ecs:us-east-1:653711331788:attachment/ed8fed01-82d0-4bf6-86cf-fe3115c23ab8",

+ "Groups": [

+ {"GroupId": "sg-00000008", "GroupName": "Public"}

+ ],

+ "InterfaceType": "interface",

+ "Ipv6Addresses": [],

+ "MacAddress": "16:2f:d0:d6:ed:28",

+ "NetworkInterfaceId": "eni-00000001",

+ "OwnerId": "653711331788",

+ "PrivateDnsName": "ip-172-31-48-168.ec2.internal",

+ "PrivateIpAddress": "172.31.48.168",

+ "PrivateIpAddresses": [

+ {

+ "Association": {

+ "IpOwnerId": "amazon",

+ "PublicDnsName": "ec2-3-80-3-41.compute-1.amazonaws.com",

+ "PublicIp": "3.80.3.41",

+ },

+ "Primary": True,

+ "PrivateDnsName": "ip-172-31-48-168.ec2.internal",

+ "PrivateIpAddress": "172.31.48.168",

+ }

+ ],

+ "RequesterId": "578734482556",

+ "RequesterManaged": True,

+ "SourceDestCheck": True,

+ "Status": "available",

+ "SubnetId": "subnet-00000001",

+ "TagSet": [],

+ "VpcId": "vpc-12345678",

+ }

+ ]

+ }

+

+ # Clear cached module so we can mock stuff

+ if "shared.find_unused" in sys.modules:

+ del sys.modules["shared.find_unused"]

+

+ with mock.patch("shared.common.query_aws") as mock_query:

+ mock_query.side_effect = mocked_query_side_effect

+ from shared.find_unused import find_unused_network_interfaces

+

+ assert_equal(

+ find_unused_network_interfaces(self.mock_region),

+ [{"id": "eni-00000001"}],

+ )

diff --git a/vendor_accounts.yaml b/vendor_accounts.yaml

index 7e25882e6..b311e6f3f 100644

--- a/vendor_accounts.yaml

+++ b/vendor_accounts.yaml

@@ -11,6 +11,8 @@

# Note the `name` is referenced in ./web/style.json which then references an

# image file in ./web/icons/logos/ for these vendors to display in the

# web-of-trust view.

+#

+# Some of these were found originally from https://github.com/dagrz/aws_pwn/blob/master/miscellanea/integrations.txt

- name: Cloudhealth

source: 'https://github.com/mozilla/security/blob/master/operations/cloudformation-templates/cloudhealth_iam_role.json'

@@ -67,8 +69,6 @@

type: 'aws'

source: 'https://docs.aws.amazon.com/awsaccountbilling/latest/aboutv2/billing-getting-started.html#step-2'

accounts: ['386209384616']

-

- # The following are from https://github.com/dagrz/aws_pwn/blob/master/miscellanea/integrations.txt and still need logos

- name: 'dome9'

source: 'http://support.dome9.com/knowledgebase/articles/796419-convert-dome9-aws-account-connection-from-iam-user'

accounts: ['634729597623']

@@ -148,4 +148,10 @@

Source: 'https://onelogin.service-now.com/kb_view_customer.do?sysparm_article=KB0010344'

- name: 'nOps'

accounts: ['202279780353']

- source: 'https://help.nops.io/manual_setup'

\ No newline at end of file

+ source: 'https://help.nops.io/manual_setup'

+- name: 'Fivetran'

+ source: 'https://fivetran.com/docs/logs/cloudwatch/setup-guide'

+ accounts: ['834469178297']

+- name: 'Databricks'

+ source: 'https://docs.databricks.com/administration-guide/account-settings/aws-accounts.html'

+ accounts: ['414351767826']

\ No newline at end of file

diff --git a/web/icons/logos/databricks.png b/web/icons/logos/databricks.png

new file mode 100644

index 000000000..5c0c6bc41

Binary files /dev/null and b/web/icons/logos/databricks.png differ

diff --git a/web/icons/logos/fivetran.png b/web/icons/logos/fivetran.png

new file mode 100644

index 000000000..05498df97

Binary files /dev/null and b/web/icons/logos/fivetran.png differ

diff --git a/web/js/cloudmap.js b/web/js/cloudmap.js

index 86dab1a96..a586bc88b 100644

--- a/web/js/cloudmap.js

+++ b/web/js/cloudmap.js

@@ -51,6 +51,11 @@ $(window).on('load', function(){

},

style: stylefile[0]

});

+ })

+ .fail(function(e) {

+ if (e.status == 404) {

+ alert("Failed to fetch data!\nPlease run cloudmapper.py prepare before using webserver");

+ }

});

}); // Page loaded

diff --git a/web/style.json b/web/style.json

index 10c25fc4f..afed45eae 100644

--- a/web/style.json

+++ b/web/style.json

@@ -745,6 +745,32 @@

"height": 100

}

},

+ {

+ "selector": "[type = \"Fivetran\"]",

+

+ "css": {

+ "label": "",

+ "background-opacity": 0,

+ "background-image": "./icons/logos/fivetran.png",

+ "background-fit": "contain",

+ "background-clip": "none",

+ "width": 150,

+ "height": 100

+ }

+ },

+ {

+ "selector": "[type = \"Databricks\"]",

+

+ "css": {

+ "label": "",

+ "background-opacity": 0,

+ "background-image": "./icons/logos/databricks.png",

+ "background-fit": "contain",

+ "background-clip": "none",

+ "width": 150,

+ "height": 100

+ }

+ },

{

"selector": "[type = \"Lacework\"]",

+

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+