|

| 1 | +# 接入本地部署大模型教程 |

| 2 | + |

| 3 | +# 安装部署平台(Ollama/Xinference/GPUStack) |

| 4 | + |

| 5 | +请按照您想要接入的平台的教程部署, 注意 pandawiki只支持linux系统部署 |

| 6 | + |

| 7 | +[ollama安装教程](https://docs.ollama.com/quickstart) |

| 8 | + |

| 9 | +[xinference安装教程](https://inference.readthedocs.io/zh-cn/v1.2.0/getting_started/installation.html) |

| 10 | + |

| 11 | +[gpustack安装教程](https://docs.gpustack.ai/latest/quickstart/) |

| 12 | + |

| 13 | +# 确认大模型平台安装成功 |

| 14 | + |

| 15 | +## 将大模型平台的监听IP设置为0.0.0.0 |

| 16 | + |

| 17 | +### ollama |

| 18 | + |

| 19 | +1. 通过执行 `systemctl edit ollama.service`编辑 systemd 服务文件 |

| 20 | + |

| 21 | +2. 在 `[Service]`部分下,添加一行 `Environment` |

| 22 | + |

| 23 | + |

| 24 | +```ini |

| 25 | +[Service] |

| 26 | +Environment="OLLAMA_HOST=0.0.0.0:11434" |

| 27 | +``` |

| 28 | + |

| 29 | +1. 保存并退出编辑器。 |

| 30 | + |

| 31 | +2. 重新加载 systemd 并重启 Ollama: |

| 32 | + |

| 33 | + |

| 34 | +```bash |

| 35 | +systemctl daemon-reload |

| 36 | +systemctl restart ollama |

| 37 | +``` |

| 38 | + |

| 39 | +### xinference |

| 40 | + |

| 41 | +在启动 Xinference 时加上 `-H 0.0.0.0` 参数: |

| 42 | + |

| 43 | +```plaintext |

| 44 | +xinference-local -H 0.0.0.0 |

| 45 | +``` |

| 46 | + |

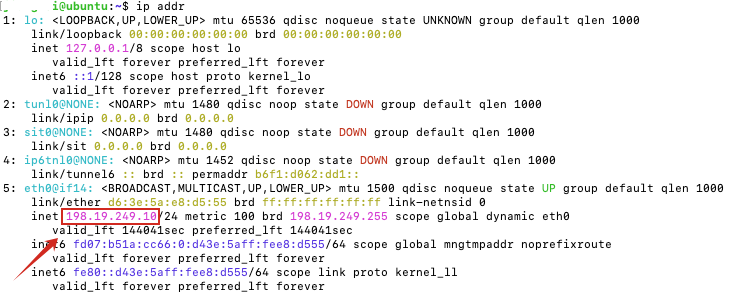

| 47 | +## 获取部署机器的ip: 在命令行中输入 ip addr, 通常为 eth0或wlan0 网卡中的inet 后的ip |

| 48 | + |

| 49 | + |

| 50 | + |

| 51 | +## 获取模型列表 |

| 52 | + |

| 53 | +请替换命令中的端口号为 ollama的默认端口号: 11434, xinference的默认端口号: 9997, gpustack的默认端口号: 80 |

| 54 | + |

| 55 | +```c++ |

| 56 | +curl -X GET \ |

| 57 | + http://部署机器的ip:端口/v1/models \ |

| 58 | + -H "Content-Type: application/json" |

| 59 | + |

| 60 | +``` |

| 61 | +示例响应 |

| 62 | +```c++ |

| 63 | + |

| 64 | +{ |

| 65 | + "object": "list", |

| 66 | + "data": [ |

| 67 | + { |

| 68 | + "id": "gemma3:latest", |

| 69 | + "object": "model", |

| 70 | + "created": 1755516177, |

| 71 | + "owned_by": "library" |

| 72 | + } |

| 73 | + ] |

| 74 | +} |

| 75 | +``` |

| 76 | + |

| 77 | +## 检查模型是否可以使用 |

| 78 | +对话模型 |

| 79 | +```c++ |

| 80 | + curl -X POST \ |

| 81 | + http://部署机器的ip:端口/v1/chat/completions \ |

| 82 | + -H "Authorization: Bearer 您设置的API Key,没有可以去掉这行" \ |

| 83 | + -H "Content-Type: application/json" \ |

| 84 | + -d '{ |

| 85 | + "model": "模型列表中您想要配置的模型id", |

| 86 | + "messages": [{"role": "user", "content": "Hello"}] |

| 87 | + }' |

| 88 | +``` |

| 89 | +向量模型 |

| 90 | +```c++ |

| 91 | + curl -X POST \ |

| 92 | + http://部署机器的ip:端口/v1/embeddings \ |

| 93 | + -H "Content-Type: application/json" \ |

| 94 | + -H "Authorization: Bearer 您设置的API Key,没有可以去掉这行" \ |

| 95 | + -d '{ |

| 96 | + "model": "模型列表中您想要配置的模型id", |

| 97 | + "input": "hello, nice to meet you , and you?", |

| 98 | + "encoding_format": "float" |

| 99 | + }' |

| 100 | +``` |

| 101 | +重排序模型 |

| 102 | +```c++ |

| 103 | + curl -X POST \ |

| 104 | + http://部署机器的ip:端口/v1/rerank \ |

| 105 | + -H "Content-Type: application/json" \ |

| 106 | + -H "Authorization: Bearer 您设置的API Key,没有可以去掉这行" \ |

| 107 | + -d '{ |

| 108 | + "model": "模型列表中您想要配置的模型id", |

| 109 | + "documents": ["hello"], |

| 110 | + "query": "test" |

| 111 | + }' |

| 112 | +``` |

| 113 | +

|

| 114 | +# 配置模型 |

| 115 | +

|

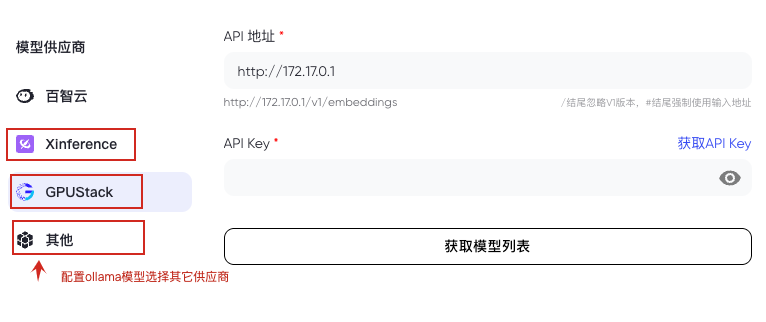

| 116 | +## 选择供应商 |

| 117 | +

|

| 118 | +**对话模型** |

| 119 | +

|

| 120 | + |

| 121 | +

|

| 122 | +**向量/重排序模型** |

| 123 | +

|

| 124 | +注意 ollama不支持重排序模型! |

| 125 | +

|

| 126 | + |

| 127 | +

|

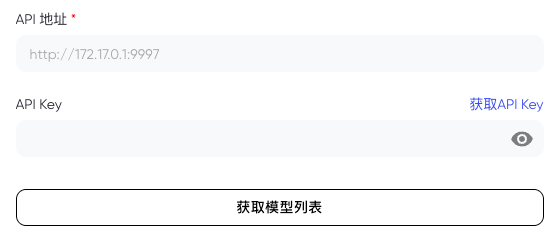

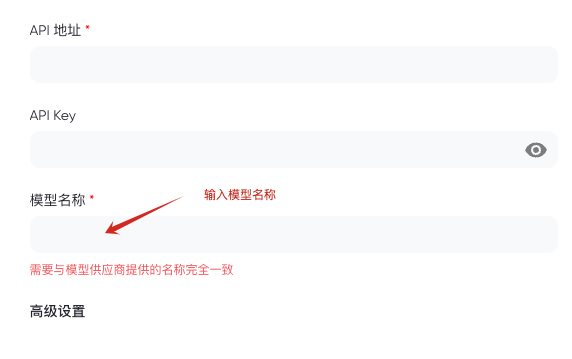

| 128 | +## 输入API地址与API Key |

| 129 | +

|

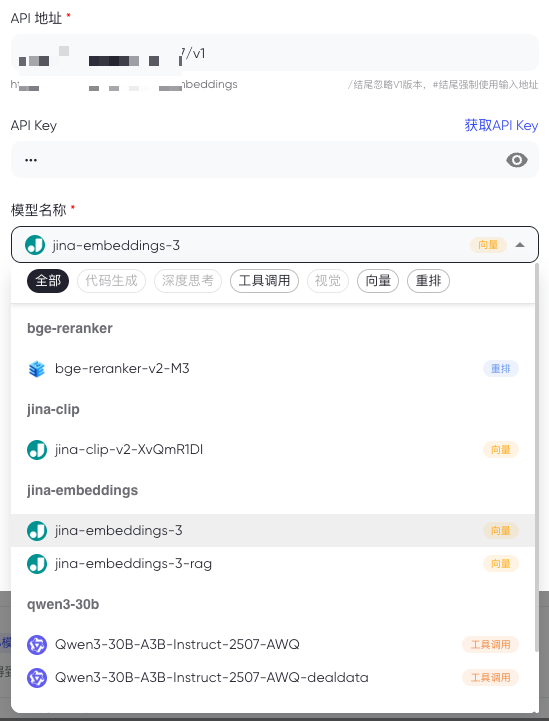

| 130 | +1. API地址为`http://curl中的ip:curl中的端口` |

| 131 | + |

| 132 | +2. 选择其它供应商时, 还需要输入之前curl中您输入的模型名称 |

| 133 | + |

| 134 | +

|

| 135 | + |

| 136 | +

|

| 137 | +## 选择模型 |

| 138 | +

|

| 139 | +注意向量/重排只能选择对应标签下的模型 |

| 140 | +

|

| 141 | + |

| 142 | +

|

| 143 | +## 确认保存 |

| 144 | +

|

| 145 | + |

| 146 | +

|

| 147 | +配置成功后会弹出“修改成功”的提示 |

| 148 | +

|

| 149 | + |

| 150 | +

|

| 151 | +# 按照上述流程执行, 依然配置失败怎么办? |

| 152 | +

|

| 153 | +请附上报错的截图提Issue, 开发者会及时解答您的问题 |

0 commit comments