-

-

Notifications

You must be signed in to change notification settings - Fork 1.1k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Errors when using Meshroom to make photogrammetry model of small (1 cm long) objects #2591

Comments

No, I do not think I can get any closer. I was already getting fairly close to the minimum possible focal distance and when I tried to get closer the camera would fail to take the picture.

When I bring the one with the decent point cloud up it says the result mesh has only 99 triangles. By contrast, the one with the distorted model produces a mesh of about 326k triangles.

This is the one that failed to work This is the revised version of the first specimen that produced a near-empty model I tried opening the other project that produced an empty model, but somehow it got overwritten and is now blank.

Here are some cropped in views of the subject at full resolution. These are the same images I showed the views with the icon with three dots..

Yes. How may I best be able to send them to you? |

Would focus stacking be an option?

Could you put them on Google Drive (or Dropbox) and share the folder? |

I am not sure. The specimen is in focus with the camera with the current set of images. I have the depth of field turned up to maximum for each photo.

Okay, I have a folder together. Where do I need to share it? |

|

Maybe you could paint your object to avoid flare. |

|

I'm not allowed to. Boss doesn't want to for fear it will damage the

specimen.

…On Sun, Nov 10, 2024, 3:59 PM Florian Foinant-Willig < ***@***.***> wrote:

Maybe you could paint your object to avoid flare.

—

Reply to this email directly, view it on GitHub

<#2591 (comment)>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/ARUYOO3GW25PL3SJ622AROLZ77CJTAVCNFSM6AAAAABRCLWYGKVHI2DSMVQWIX3LMV43OSLTON2WKQ3PNVWWK3TUHMZDINRWHEYTKNJSGI>

.

You are receiving this because you authored the thread.Message ID:

***@***.***>

|

|

And what about structured lighting? |

Just paste the link here. |

|

Here is the link to the folder. I am unsure what you mean by structured lighting. Do you mean a 3D surface scanner? I tried experimenting with one but I didn't get very good results, the type of scanner I had available didn't seem able to scan such a small specimen. |

|

For the first dataset Meshroom produced a model for me: I only did the SfM step for the second dataset, but it looked fine. Can you check the log in the meshing node. Are there any warnings in it? What I would try to do is to reconstruct something else using the exact same settings you used for the specimens. It doesn't have to be very detailed (30 photos will do). If that doesn't work then try again with the default settings. If it works with default settings then you could try changing settings one at a time until it no longer works. |

|

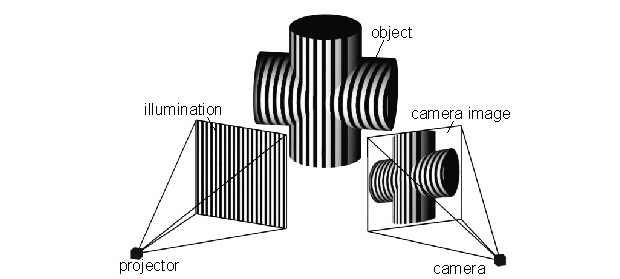

@megaraptor1 I think about a light pattern projected on the model to increase the feature count. The light has to be fixed relative to the model so fixed on the turn-table. |

If that is the case I will have to try it again and see if it works. I don't think it will necessarily produce different results, but if you got something may be it was just random error. Did you use any alternative settings for Meshroom I need to be aware of, or did you just use the default settings?

I have tried doing this with photographs of some other, larger objects and those generally have worked. I can try again with something small if this next attempt at meshing doesn't go well. I'm wondering if it has to do something with how close the object is to the camera, the window showing what Meshroom had detected seem to show the tooth in yellows and reds even though it was close enough for the autofocus to properly focus on it.

I am still a little confused as to what you mean. Would that be something like using a light diffuser in order to prevent their from being any spots of over illumination or flare? What kind of device would produce this structured lighting? Some of my colleagues suggested to me that maybe I should use small dabs of colored Play-Dough or print out a version of the photogrammetry guide with unique, colored marks on the paper in order to increase the number of unique features to link images up. Is that like what you're suggesting? I've also been wondering if printing out the photogrammetry backboard and a high-resolution so lines are much sharper might help, though I am much less confident in this. |

|

Okay, so I tried it again and I got the same result. I didn't change anything in the ImageMatching node just to keep things consistent. What I did was take the first 70 or so photos from the Specimen 2 folder in Dropbox and try to make a mesh out of them. The StructureFromMotion output looked relatively decent, though it had that issue where the image is mirrored on both sides. Almost certainly a result of not using the entire image set; no matter, a great image is not needed here just a replicable one. This is what I get for display features. I also checked the pipeline and there were no errors anywhere that caused a loss of information or a premature truncation of the entire process. However, once again I got a non-functional model. I end up only getting this little scrap of 151 triangles. No clue why this is turning out this way. It cannot be the photos, as MeshRoom produced a model on your end. It also cannot be due to the computer going to sleep in the middle of the meshing process and disrupting MeshRoom; I was on the computer the entire time and it never went into sleep mode. Could it possibly be saving the mesh somewhere else? |

|

I managed to replicate your issue (success!). When I first tried your dataset I had the 'Downscale' value in the DepthMap node set to 4 because my video card couldn't handle the default value of 2. However now I have a better video card so I was able to run with the default downscale and the result was that a mesh was generated with only a handful of triangles. I also tried meshing the second dataset and it failed to produce a mesh when the downscale value was 2 (a few triangles) or 4 (failed completely), however it did work with a downscale value of 8. I guess I should have tried that out earlier instead of assuming it would just work. I have no idea why a lower downscale value causes the meshing step to fail in this way. Luckily using a downscale of 4 or 8 seems to produce acceptable results (although that is for you to decide on). |

|

I'll have to try running it again and see if it works. |

|

Okay, so I tried it again. All settings are set as default except for the downscale factor was set to 8 for the DepthMap node. The data used was the Specimen1 dataset in Dropbox that @msanta used to create their mesh. The process still did not work. The StructureFromMotion map looked okay (but see below), but the final texturing map was basically flat and the model looked like a splattered tomato. I tried setting the downscale factor to 8 for the Texturing node, in case that is where the problem was, but it did not change the resulting model. Again, no other nodes were altered, this is straight out of the box settings aside from the downscale factor. Looking at the StructureFromMotion model what's strange is the model looked inverted from what the specimen should look like, although it looks like a few images may have been reconstructed the right way up (the brown points on the side facing the camera). It seems like this may be related to what the problem is. |

|

No idea why the point cloud is inverted. Has it always been inverted? There is an issue about a mesh getting inverted (#1112) but there was no solution to it. I tried the 2nd image set in Colmap and it also produced an inverted point cloud. Very strange. |

|

I don't think so. It's been right side up in quite a few when looking at the Structure From Motion node in the past. I've seen a few where it doesn't appear to recognize all of the photos are of the same object and makes two images mirrored across the flat backdrop. What's weird is the model are often not consistent when I try to replicate things. Sometimes the same set of photos will produce two slightly different models, or one will hit an error and stop but if I tell it to start again it compiles without issue. |

|

So I tried running a mesh of a larger specimen, a roughly 10 cm long jaw, using a small stack of pictures taken from a phone camera in my version of Meshroom. It produced a decent model, about as much as can be expected given the quality of the pictures. This seems to suggest the problem is related to either the camera type or possibly the size of the object being photographed? However, selecting the three dots still showed the larger specimen in red. |

|

Okay, so I tried some more experimentation with the specimens. Specifically I have tried a couple of new things…

It still is not giving me good results. I tried with an initial sample set of 72 images but this still resulted in an inverted mirror image on the StructureFromMotion model and when I went to see what the resulting model looked like I got a model of 6 triangles. However, all 72 cameras were reconstructed, without any being unable to be identified in the model (though given an inverted image was produced I doubt how accurate they all are). In fact, I suspect quite a few are wrong, looking at the cameras there are a significant number of doubled cameras when they should be largely non-overlapping and arranged in an arc. I did a TextureDownscale of 4 and it still produced a model with a triangle count of 6. I also tried adjusting the VocTree:Max Descriptors and VocTree:Nb Matches settings in the ImageMatching node, just to see if that did anything, but I couldn't get them to open up or work. Clicking on them did not give me an option to change anything. What I wonder is if the reason MeshRoom isn’t producing a coherent image is related to the inverted image problem. Similar to how I’ve seen this photogrammetry guide suggest using two dissimilar bases will result in the program editing out the bases and keeping only shared features, what if the reason it is only producing a few triangles is the program is removing everything that is not shared – and because those inverted images are being produced the specimen is not consistent between all photos? What’s also very strange is when I reconstruct the image using just a single rotation, it reconstructs the cameras in a weird parabola shape. I know for a fact the camera was not reconstructed in these positions, I had the specimen on a turntable and took pictures of it with the camera in a fixed position. Even more interestingly, even reconstructing the specimen with these 35 images in a single rotation creates a significant ghost image where there should be none. Compiling this image I get weird results. Meshroom does an okay job at reconstructing the photogrammetry turntable (but the letters and numbers don't come out clean), but the actual specimen is a complete mess. Checking Display Features a lot of the image is in red. But again, the image itself is in focus... I am using all default settings for this model. @msanta, did you have any other setting set differently beyond installation defaults when you ran the program and produced that image you showed previously? I am unable to replicate your results. I am still not sure what is going wrong. Given @msanta was able to produce a decent model using the images taken previously, it almost seems like something must be set wrong on my end. I am not sure why I cannot replicate their results. However, I am unsure what that would be, as I am using all program defaults straight out of the box. @msanta, something else I am wondering is were you able to produce your image with the camera intrinsics added? I noticed even when using that small dataset with a single rotation much of the points under Display Features were red or yellow. I am wondering if the program is using inbuilt focal length data or something and that is why it is failing to recognize features. Though I would assume the camera metadata is still on the photos I had forwarded. These are the exact camera intrinsics I was using, imported directly as metadata from the images. |

|

Update: I tried compiling the photos with the options "Cross Matching", "Feature Matching" and "Match from Known Camera Poses" turned on in the FeatureMatching node. The model did slightly better (there was no parabolic reconstruction of cameras), but some of the cameras were still incorrectly rotated and "doubled" and some of the points were still inverted. Additionally, no model is produced: I ended up getting a model of only 20 triangles. |

|

Update 2: I wondered if maybe the issue could be lack of disc space on my computer could be causing model compilation to fail. I cleaned out a lot of my hard drive and tried again. However, the model still failed to compile correctly. I noticed the specimen was being reconstructed incorrectly in the StructureFromMotion node, and the resulting model was incorrect. Strangely, the turntable was reconstructed relatively well. I tried running the same images in a trial version of 3D Zephyr and the model was compiled just fine. |

I didn't change any settings from the defaults. Here are the camera instrinsics: The only differences between your setup and mine would be the OS (windows vs linux) and different hardware. I suppose I could also try this on Windows to confirm if that makes a difference. For what it is worth, I have also tried these images in RealityCapture and got a good result. |

|

@msanta Okay, thank you for letting me know. I assume it must be something about the difference between iOS and Windows. Not sure what else could be causing such a different with the default settings. |

I have been having trouble getting Meshroom to properly compile images into a 3D model using photogrammetry. I have been taking pictures of several specimens using a Canon EOS 90D macro lens (so focal length and lens information are available for all photos). These specimens are fairly small, the largest is about 1.2 cm in diameter. There are about ~150 pictures taken in several rings all around the specimen at different orientation angles (see below for reconstructed cameras). The specimen remains in the same position on every image but has merely been rotated on a turntable at angles of about 8-9 degrees between each image. The specimen is also on a background with unique, non-repeating symbols or imagery to make image matching easier. The pictures are all very crisp and it is possible to make out details on them very easily, so in theory it should be relatively straightforward for Meshroom to match the images.

Nevertheless, despite this Meshroom has consistently been unable to produce models of these specimens. I have tried taking photos of these specimens on two different occasions, as well as tried to create models for multiple specimens, but have been unable to.

On the first attempt, despite the specimen being in focus and sharply defined in each photo, Meshroom simply failed to reconstruct

cameras for about half of the total images.

This led to a lot of gaps in the model and a really distorted final product.

I tried taking pictures of the same specimen again from a different orientation and I did get all of the cameras (see picture below).

In practice, the StructureFromMotion model for this attempt looked better but when I opened the resulting mesh for this file in MeshLab it was completely empty and only had a few triangles (which I got out of the Texturing subfolder of the MeshroomCache folder). Additionally, I get an error saying "the following textures have not been loaded: texture_1001.exr", which suggests a texture file was not output from Meshroom.

I tried this again with a second specimen and got similar results. Again, in this case all 164 cameras were accurately reconstructed (see image) and the StructureFromMotion model would suggest the model would turn out relatively okay.

But once the process was finished when I opened the resulting model in Meshlab and there was nothing there but a few triangles.

I am unsure as to what is going wrong. I have been fairly diligent about doing things to improve mesh correlation and creation, but it doesn't seem to work. Notably, I've been able to get Meshroom to work with photographs of large objects from a distance and screenshots of a 3D model, but I haven't been able to get it to work with photos of these smaller specimens.

Desktop (please complete the following and other pertinent information):

The text was updated successfully, but these errors were encountered: