-

Notifications

You must be signed in to change notification settings - Fork 288

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Custom object detection fails even if using a training image as input #194

Comments

|

I got same problems ,maybe we can talk about the following things.

|

|

Hello @sejmoonwei , sorry for the late response.

|

|

well , do you get correct results on your train image? Or fail to detect only on real scene? I recommand you don't use ros yet. , Just a inference script will simplify this.I can share one if you need. |

|

No, not even on training images. I would be grateful if you can provide me with such a script as it would allow me to iterate faster in the training/testing process. |

|

This is the one I currently use for inferencing . It shows the belief map and cuboid of the object. Input as a single image.Please resize your image to 640x480 before input or it may raise a runtime error. |

|

Thank you for the link! I will try it sometime during the winter holidays as for now I am trapped in bureaucratic work. The camera SDK is capable of estimating the 3D position of the detected object and there is a Python API available for further processing of real time data. Of course, DOPE is the better alternative as it does not depend of RGB-D images but the ZED camera is also an alternative. |

|

I‘ll read them , Thanks. Just keep touch on this (Custom object detection , DOPE). |

Hello,

First of all congratulations for your hard work on DOPE and NDDS!

I used NDDS to generate a dataset of 20K images by following the instructions from the wiki.

The dataset looks good even when loaded using

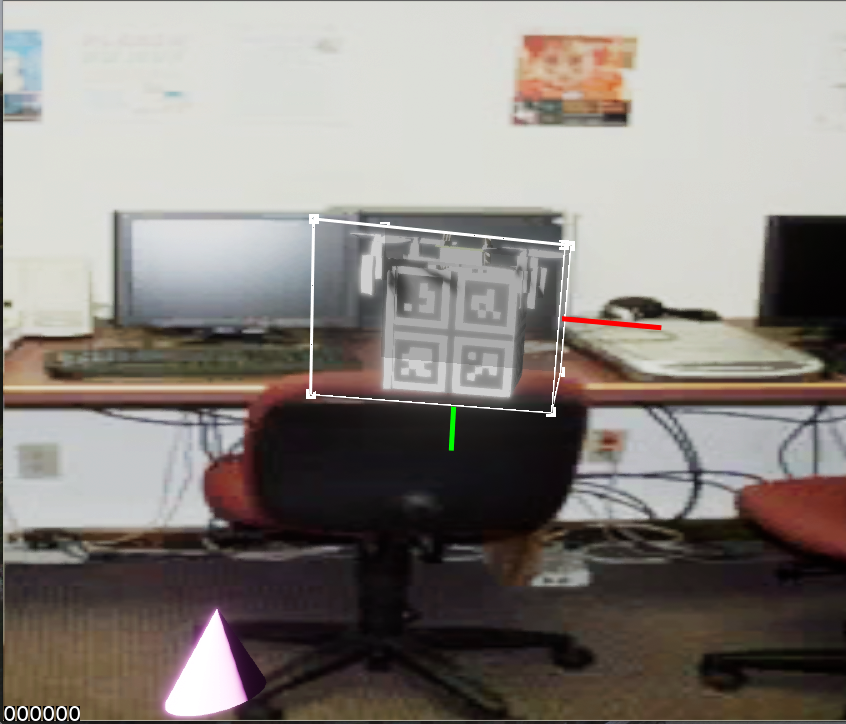

nvdu_vizSince I don't yet have the real object assembled (a Crazyflie drone with a QR code beneath), I tried to point the webcam towads my monitor where one of the training images was displayed. Nothing happend unfortunately.

Afterwards, I tried using the ROS

image_publishernode to stream a single image as a webcam stream and thus avoid the occasional noise that was caused by the monitor refresh rate.I also published the

camera_infotopic along with theimage_raw.However, even when provided with a clear static image, the model does not detect the custom object:

At this point, I don't know the cause of my problems so any expert advice on this matter is highly appreciated :)

My possible theories are that:

image_publisherIf anyone wants to try out the dataset I can provide it.

Since this work is part of a research project, I intend to write a white paper on the exact training steps and hiccups along the way and publish it on Github along with all of the required training data.

The text was updated successfully, but these errors were encountered: