Trainer with ddp accelerator create too much gpu processes #4828

Replies: 14 comments

-

|

Hi! thanks for your contribution!, great first issue! |

Beta Was this translation helpful? Give feedback.

-

|

Reduce the number of workers in dataloader |

Beta Was this translation helpful? Give feedback.

-

I have set it to 0, but it doesn't work. |

Beta Was this translation helpful? Give feedback.

-

|

Are you experiencing any performance degradation? I'm not familiar with gpustat, can you look at the process tree in htop? |

Beta Was this translation helpful? Give feedback.

-

Beta Was this translation helpful? Give feedback.

-

|

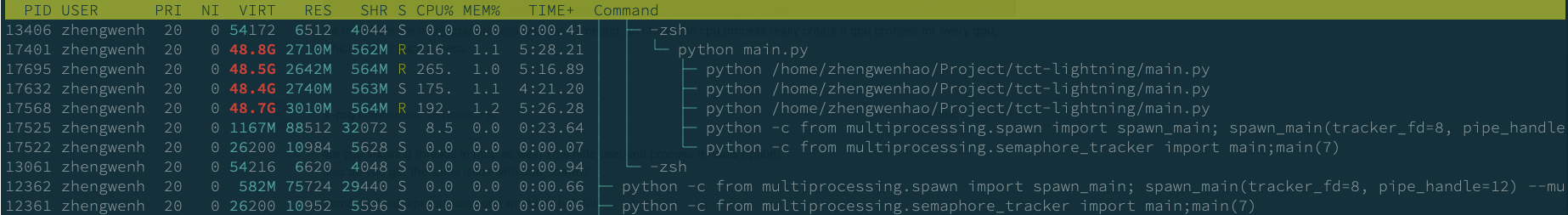

are you showing threads in htop as well? (the hide userland process threads option) Some minimal reproducible code would be helpful! |

Beta Was this translation helpful? Give feedback.

-

|

@shenmishajing I think num_workers no longer controls the exact number of threads created per GPU in pytorch. One post which highlights this: https://discuss.pytorch.org/t/total-number-of-processes-and-threads-created-using-nn-distributed-parallel/71043 But, let me confirm if this is a pytorch and ddp backend thing, rather than a lightning issue. |

Beta Was this translation helpful? Give feedback.

-

Beta Was this translation helpful? Give feedback.

-

|

Yeah so the many processes you are seeing before are actually threads and like @ananyahjha93 said, I'm pretty sure that is a pytorch behavior. That's an issue with TorchGraph and not lightning, it has to do with the way certain functions are implemented that makes them un-picklable. I would stick with ddp for now if you're using TorchGraph then and perhaps post an issue there. |

Beta Was this translation helpful? Give feedback.

-

|

When I use the Trainer with ddp accelerator, I will get some output like this: It looks like every process can see all the gpus. Will it fix this issue, if we set the CUDA_VISIBLE_DEVICES variable in every process before we run the train code? |

Beta Was this translation helpful? Give feedback.

-

|

Same problem. Besides, if we set, say gpus=[3, 4], the first two GPUs will also be used but not really doing any computation (GPU-Util 0%). And I got the similar output: GPU available: True, used: True

TPU available: False, using: 0 TPU cores

LOCAL_RANK: 0 - CUDA_VISIBLE_DEVICES: [0,1,2,3,4,5,6,7]

LOCAL_RANK: 1 - CUDA_VISIBLE_DEVICES: [0,1,2,3,4,5,6,7]

initializing ddp: GLOBAL_RANK: 1, MEMBER: 2/2

initializing ddp: GLOBAL_RANK: 0, MEMBER: 1/2Is this an issue with PyTorch or PL? |

Beta Was this translation helpful? Give feedback.

-

A solution for this.

But, if we use cmd

|

Beta Was this translation helpful? Give feedback.

-

|

was this bug in the ddp backend solved? |

Beta Was this translation helpful? Give feedback.

-

|

Obviously, it is not fixed yet. An alternative solution is to rewrite the ddp backend following the logic of the launch script in pytorch. |

Beta Was this translation helpful? Give feedback.

Uh oh!

There was an error while loading. Please reload this page.

Uh oh!

There was an error while loading. Please reload this page.

-

❓ Questions and Help

What is your question?

When I use ddp accelerator with multi gpu on single machine, I get this from cmd

gpustatIn which xx is my user name and aaa is another user. I only use the first 6 gpus and leave the last gpu for other user. But, my code create too much gpu process on first 6 gpus and even create same number of processes on the last gpu.

My code looks like:

It looks like every cpu process will create a gpu process in every gpu visible, although they will use one gpu only. How can I enforce them to only create one gpu process on the right gpu? I want the gpu process to look like:

What's your environment?

Beta Was this translation helpful? Give feedback.

All reactions