diff --git a/README.md b/README.md

index 311d22c..2536c93 100644

--- a/README.md

+++ b/README.md

@@ -11,34 +11,66 @@

[](https://gitter.im/DeepLabCut/community?utm_source=badge&utm_medium=badge&utm_campaign=pr-badge)

[](https://twitter.com/DeepLabCut)

-This package contains a [DeepLabCut](http://www.mousemotorlab.org/deeplabcut) inference pipeline for real-time applications that has minimal (software) dependencies. Thus, it is as easy to install as possible (in particular, on atypical systems like [NVIDIA Jetson boards](https://developer.nvidia.com/buy-jetson)).

-

-**Performance:** If you would like to see estimates on how your model should perform given different video sizes, neural network type, and hardware, please see: https://deeplabcut.github.io/DLC-inferencespeed-benchmark/

-

-If you have different hardware, please consider submitting your results too! https://github.com/DeepLabCut/DLC-inferencespeed-benchmark

-

-**What this SDK provides:** This package provides a `DLCLive` class which enables pose estimation online to provide feedback. This object loads and prepares a DeepLabCut network for inference, and will return the predicted pose for single images.

-

-To perform processing on poses (such as predicting the future pose of an animal given it's current pose, or to trigger external hardware like send TTL pulses to a laser for optogenetic stimulation), this object takes in a `Processor` object. Processor objects must contain two methods: process and save.

-

-- The `process` method takes in a pose, performs some processing, and returns processed pose.

+This package contains a [DeepLabCut](http://www.mousemotorlab.org/deeplabcut) inference

+pipeline for real-time applications that has minimal (software) dependencies. Thus, it

+is as easy to install as possible (in particular, on atypical systems like [

+NVIDIA Jetson boards](https://developer.nvidia.com/buy-jetson)).

+

+If you've used DeepLabCut-Live with TensorFlow models and want to try the PyTorch

+version, take a look at [_Switching from TensorFlow to PyTorch_](

+#Switching-from-TensorFlow-to-PyTorch)

+

+**Performance of TensorFlow models:** If you would like to see estimates on how your

+model should perform given different video sizes, neural network type, and hardware,

+please see: [deeplabcut.github.io/DLC-inferencespeed-benchmark/

+](https://deeplabcut.github.io/DLC-inferencespeed-benchmark/). **We're working on

+getting these benchmarks for PyTorch architectures as well.**

+

+If you have different hardware, please consider [submitting your results too](

+https://github.com/DeepLabCut/DLC-inferencespeed-benchmark)!

+

+**What this SDK provides:** This package provides a `DLCLive` class which enables pose

+estimation online to provide feedback. This object loads and prepares a DeepLabCut

+network for inference, and will return the predicted pose for single images.

+

+To perform processing on poses (such as predicting the future pose of an animal given

+its current pose, or to trigger external hardware like send TTL pulses to a laser for

+optogenetic stimulation), this object takes in a `Processor` object. Processor objects

+must contain two methods: `process` and `save`.

+

+- The `process` method takes in a pose, performs some processing, and returns processed

+pose.

- The `save` method saves any valuable data created by or used by the processor

For more details and examples, see documentation [here](dlclive/processor/README.md).

-###### 🔥🔥🔥🔥🔥 Note :: alone, this object does not record video or capture images from a camera. This must be done separately, i.e. see our [DeepLabCut-live GUI](https://github.com/gkane26/DeepLabCut-live-GUI).🔥🔥🔥

-

-### News!

-- March 2022: DeepLabCut-Live! 1.0.2 supports poetry installation `poetry install deeplabcut-live`, thanks to PR #60.

-- March 2021: DeepLabCut-Live! [**version 1.0** is released](https://pypi.org/project/deeplabcut-live/), with support for tensorflow 1 and tensorflow 2!

-- Feb 2021: DeepLabCut-Live! was featured in **Nature Methods**: ["Real-time behavioral analysis"](https://www.nature.com/articles/s41592-021-01072-z)

-- Jan 2021: full **eLife** paper is published: ["Real-time, low-latency closed-loop feedback using markerless posture tracking"](https://elifesciences.org/articles/61909)

-- Dec 2020: we talked to **RTS Suisse Radio** about DLC-Live!: ["Capture animal movements in real time"](https://www.rts.ch/play/radio/cqfd/audio/capturer-les-mouvements-des-animaux-en-temps-reel?id=11782529)

-

-

-### Installation:

-

-Please see our instruction manual to install on a [Windows or Linux machine](docs/install_desktop.md) or on a [NVIDIA Jetson Development Board](docs/install_jetson.md). Note, this code works with tensorflow (TF) 1 or TF 2 models, but TF requires that whatever version you exported your model with, you must import with the same version (i.e., export with TF1.13, then use TF1.13 with DlC-Live; export with TF2.3, then use TF2.3 with DLC-live).

+**🔥🔥🔥🔥🔥 Note :: alone, this object does not record video or capture images from a

+camera. This must be done separately, i.e. see our [DeepLabCut-live GUI](

+https://github.com/DeepLabCut/DeepLabCut-live-GUI).🔥🔥🔥🔥🔥**

+

+### News!

+

+- **WIP 2025**: DeepLabCut-Live is implemented for models trained with the PyTorch engine!

+- March 2022: DeepLabCut-Live! 1.0.2 supports poetry installation `poetry install

+deeplabcut-live`, thanks to PR #60.

+- March 2021: DeepLabCut-Live! [**version 1.0** is released](https://pypi.org/project/deeplabcut-live/), with support for

+tensorflow 1 and tensorflow 2!

+- Feb 2021: DeepLabCut-Live! was featured in **Nature Methods**: [

+"Real-time behavioral analysis"](https://www.nature.com/articles/s41592-021-01072-z)

+- Jan 2021: full **eLife** paper is published: ["Real-time, low-latency closed-loop

+feedback using markerless posture tracking"](https://elifesciences.org/articles/61909)

+- Dec 2020: we talked to **RTS Suisse Radio** about DLC-Live!: ["Capture animal

+movements in real time"](

+https://www.rts.ch/play/radio/cqfd/audio/capturer-les-mouvements-des-animaux-en-temps-reel?id=11782529)

+

+### Installation

+

+Please see our instruction manual to install on a [Windows or Linux machine](

+docs/install_desktop.md) or on a [NVIDIA Jetson Development Board](

+docs/install_jetson.md). Note, this code works with PyTorch, TensorFlow 1 or TensorFlow

+2 models, but whatever engine you exported your model with, you must import with the

+same version (i.e., export a PyTorch model, then install PyTorch, export with TF1.13,

+then use TF1.13 with DlC-Live; export with TF2.3, then use TF2.3 with DLC-live).

- available on pypi as: `pip install deeplabcut-live`

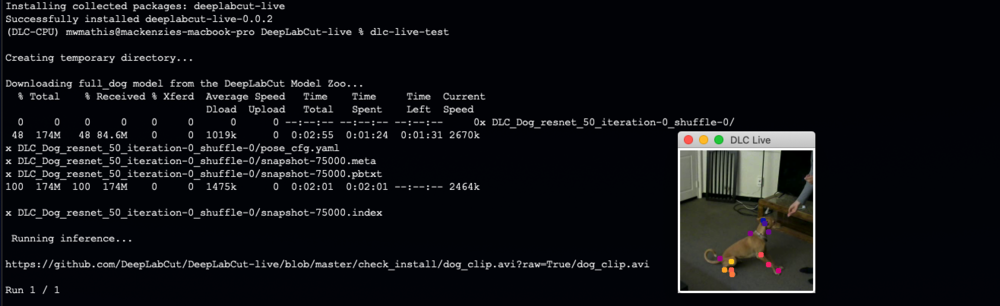

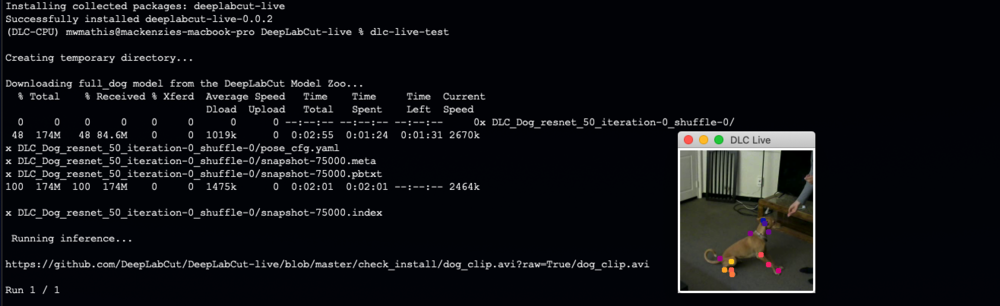

@@ -46,11 +78,25 @@ Note, you can then test your installation by running:

`dlc-live-test`

-If installed properly, this script will i) create a temporary folder ii) download the full_dog model from the [DeepLabCut Model Zoo](http://www.mousemotorlab.org/dlc-modelzoo), iii) download a short video clip of a dog, and iv) run inference while displaying keypoints. v) remove the temporary folder.

+If installed properly, this script will i) create a temporary folder ii) download the

+full_dog model from the [DeepLabCut Model Zoo](

+http://www.mousemotorlab.org/dlc-modelzoo), iii) download a short video clip of

+a dog, and iv) run inference while displaying keypoints. v) remove the temporary folder.

-### Quick Start: instructions for use:

+PyTorch and TensorFlow can be installed as extras with `deeplabcut-live` - though be

+careful with the versions you install!

+

+```bash

+# Install deeplabcut-live and PyTorch

+`pip install deeplabcut-live[pytorch]`

+

+# Install deeplabcut-live and TensorFlow

+`pip install deeplabcut-live[tf]`

+```

+

+### Quick Start: instructions for use

1. Initialize `Processor` (if desired)

2. Initialize the `DLCLive` object

@@ -66,81 +112,161 @@ dlc_live.get_pose()

`DLCLive` **parameters:**

- - `path` = string; full path to the exported DLC model directory

- - `model_type` = string; the type of model to use for inference. Types include:

- - `base` = the base DeepLabCut model

- - `tensorrt` = apply [tensor-rt](https://developer.nvidia.com/tensorrt) optimizations to model

- - `tflite` = use [tensorflow lite](https://www.tensorflow.org/lite) inference (in progress...)

- - `cropping` = list of int, optional; cropping parameters in pixel number: [x1, x2, y1, y2]

- - `dynamic` = tuple, optional; defines parameters for dynamic cropping of images

- - `index 0` = use dynamic cropping, bool

- - `index 1` = detection threshold, float

- - `index 2` = margin (in pixels) around identified points, int

- - `resize` = float, optional; factor by which to resize image (resize=0.5 downsizes both width and height of image by half). Can be used to downsize large images for faster inference

- - `processor` = dlc pose processor object, optional

- - `display` = bool, optional; display processed image with DeepLabCut points? Can be used to troubleshoot cropping and resizing parameters, but is very slow

+- `path` = string; full path to the exported DLC model directory

+- `model_type` = string; the type of model to use for inference. Types include:

+ - `pytorch` = the base PyTorch DeepLabCut model

+ - `base` = the base TensorFlow DeepLabCut model

+ - `tensorrt` = apply [tensor-rt](https://developer.nvidia.com/tensorrt) optimizations to model

+ - `tflite` = use [tensorflow lite](https://www.tensorflow.org/lite) inference (in progress...)

+- `cropping` = list of int, optional; cropping parameters in pixel number: [x1, x2, y1, y2]

+- `dynamic` = tuple, optional; defines parameters for dynamic cropping of images

+ - `index 0` = use dynamic cropping, bool

+ - `index 1` = detection threshold, float

+ - `index 2` = margin (in pixels) around identified points, int

+- `resize` = float, optional; factor by which to resize image (resize=0.5 downsizes

+ both width and height of image by half). Can be used to downsize large images for

+ faster inference

+- `processor` = dlc pose processor object, optional

+- `display` = bool, optional; display processed image with DeepLabCut points? Can be

+ used to troubleshoot cropping and resizing parameters, but is very slow

`DLCLive` **inputs:**

- - `` = path to the folder that has the `.pb` files that you acquire after running `deeplabcut.export_model`

- - `` = is a numpy array of each frame

+- `` =

+ - For TensorFlow models: path to the folder that has the `.pb` files that you

+ acquire after running `deeplabcut.export_model`

+ - For PyTorch models: path to the `.pt` file that is generated after running

+ `deeplabcut.export_model`

+- `` = is a numpy array of each frame

+

+#### DLCLive - PyTorch Specific Guide

+

+This guide is for users who trained a model with the PyTorch engine with

+`DeepLabCut 3.0`.

+Once you've trained your model in [DeepLabCut](https://github.com/DeepLabCut/DeepLabCut)

+and you are happy with its performance, you can export the model to be used for live

+inference with DLCLive!

+

+### Switching from TensorFlow to PyTorch

+

+This section is for users who **have already used DeepLabCut-Live** with

+TensorFlow models (through DeepLabCut 1.X or 2.X) and want to switch to using the

+PyTorch Engine. Some quick notes:

+

+- You may need to adapt your code slightly when creating the DLCLive instance.

+- Processors that were created for TensorFlow models will function the same way with

+PyTorch models. As multi-animal models can be used with PyTorch, the shape of the `pose`

+array given to the processor may be `(num_individuals, num_keypoints, 3)`. Just call

+`DLCLive(..., single_animal=True)` and it will work.

### Benchmarking/Analyzing your exported DeepLabCut models

-DeepLabCut-live offers some analysis tools that allow users to peform the following operations on videos, from python or from the command line:

+DeepLabCut-live offers some analysis tools that allow users to perform the following

+operations on videos, from python or from the command line:

+

+#### Test inference speed across a range of image sizes

+

+Downsizing images can be done by specifying the `resize` or `pixels` parameter. Using

+the `pixels` parameter will resize images to the desired number of `pixels`, without

+changing the aspect ratio. Results will be saved (along with system info) to a pickle

+file if you specify an output directory.

+

+Inside a **python** shell or script, you can run:

-1. Test inference speed across a range of image sizes, downsizing images by specifying the `resize` or `pixels` parameter. Using the `pixels` parameter will resize images to the desired number of `pixels`, without changing the aspect ratio. Results will be saved (along with system info) to a pickle file if you specify an output directory.

-##### python

```python

-dlclive.benchmark_videos('/path/to/exported/model', ['/path/to/video1', '/path/to/video2'], output='/path/to/output', resize=[1.0, 0.75, '0.5'])

-```

-##### command line

+dlclive.benchmark_videos(

+ "/path/to/exported/model",

+ ["/path/to/video1", "/path/to/video2"],

+ output="/path/to/output",

+ resize=[1.0, 0.75, '0.5'],

+)

```

+

+From the **command line**, you can run:

+

+```bash

dlc-live-benchmark /path/to/exported/model /path/to/video1 /path/to/video2 -o /path/to/output -r 1.0 0.75 0.5

```

-2. Display keypoints to visually inspect the accuracy of exported models on different image sizes (note, this is slow and only for testing purposes):

+#### Display keypoints to visually inspect the accuracy of exported models on different image sizes (note, this is slow and only for testing purposes):

+

+Inside a **python** shell or script, you can run:

-##### python

```python

-dlclive.benchmark_videos('/path/to/exported/model', '/path/to/video', resize=0.5, display=True, pcutoff=0.5, display_radius=4, cmap='bmy')

-```

-##### command line

+dlclive.benchmark_videos(

+ "/path/to/exported/model",

+ "/path/to/video",

+ resize=0.5,

+ display=True,

+ pcutoff=0.5,

+ display_radius=4,

+ cmap='bmy'

+)

```

+

+From the **command line**, you can run:

+

+```bash

dlc-live-benchmark /path/to/exported/model /path/to/video -r 0.5 --display --pcutoff 0.5 --display-radius 4 --cmap bmy

```

-3. Analyze and create a labeled video using the exported model and desired resize parameters. This option functions similar to `deeplabcut.benchmark_videos` and `deeplabcut.create_labeled_video` (note, this is slow and only for testing purposes).

+#### Analyze and create a labeled video using the exported model and desired resize parameters.

+

+This option functions similar to `deeplabcut.benchmark_videos` and

+`deeplabcut.create_labeled_video` (note, this is slow and only for testing purposes).

+

+Inside a **python** shell or script, you can run:

-##### python

```python

-dlclive.benchmark_videos('/path/to/exported/model', '/path/to/video', resize=[1.0, 0.75, 0.5], pcutoff=0.5, display_radius=4, cmap='bmy', save_poses=True, save_video=True)

+dlclive.benchmark_videos(

+ "/path/to/exported/model",

+ "/path/to/video",

+ resize=[1.0, 0.75, 0.5],

+ pcutoff=0.5,

+ display_radius=4,

+ cmap='bmy',

+ save_poses=True,

+ save_video=True,

+)

```

-##### command line

+

+From the **command line**, you can run:

+

```

dlc-live-benchmark /path/to/exported/model /path/to/video -r 0.5 --pcutoff 0.5 --display-radius 4 --cmap bmy --save-poses --save-video

```

## License:

-This project is licensed under the GNU AGPLv3. Note that the software is provided "as is", without warranty of any kind, express or implied. If you use the code or data, we ask that you please cite us! This software is available for licensing via the EPFL Technology Transfer Office (https://tto.epfl.ch/, info.tto@epfl.ch).

+This project is licensed under the GNU AGPLv3. Note that the software is provided "as

+is", without warranty of any kind, express or implied. If you use the code or data, we

+ask that you please cite us! This software is available for licensing via the EPFL

+Technology Transfer Office (https://tto.epfl.ch/, info.tto@epfl.ch).

## Community Support, Developers, & Help:

-This is an actively developed package and we welcome community development and involvement.

-

-- If you want to contribute to the code, please read our guide [here](https://github.com/DeepLabCut/DeepLabCut/blob/master/CONTRIBUTING.md), which is provided at the main repository of DeepLabCut.

-

-- We are a community partner on the [](https://forum.image.sc/tags/deeplabcut). Please post help and support questions on the forum with the tag DeepLabCut. Check out their mission statement [Scientific Community Image Forum: A discussion forum for scientific image software](https://journals.plos.org/plosbiology/article?id=10.1371/journal.pbio.3000340).

-

-- If you encounter a previously unreported bug/code issue, please post here (we encourage you to search issues first): https://github.com/DeepLabCut/DeepLabCut-live/issues

-

-- For quick discussions here: [](https://gitter.im/DeepLabCut/community?utm_source=badge&utm_medium=badge&utm_campaign=pr-badge)

+This is an actively developed package, and we welcome community development and

+involvement.

+

+- If you want to contribute to the code, please read our guide [here](

+https://github.com/DeepLabCut/DeepLabCut/blob/master/CONTRIBUTING.md), which is provided

+at the main repository of DeepLabCut.

+- We are a community partner on the [](https://forum.image.sc/tags/deeplabcut). Please post help and

+support questions on the forum with the tag DeepLabCut. Check out their mission

+statement [Scientific Community Image Forum: A discussion forum for scientific image

+software](https://journals.plos.org/plosbiology/article?id=10.1371/journal.pbio.3000340).

+- If you encounter a previously unreported bug/code issue, please post here (we

+encourage you to search issues first): [github.com/DeepLabCut/DeepLabCut-live/issues](

+https://github.com/DeepLabCut/DeepLabCut-live/issues)

+- For quick discussions here: [](

+https://gitter.im/DeepLabCut/community?utm_source=badge&utm_medium=badge&utm_campaign=pr-badge)

### Reference:

-If you utilize our tool, please [cite Kane et al, eLife 2020](https://elifesciences.org/articles/61909). The preprint is available here: https://www.biorxiv.org/content/10.1101/2020.08.04.236422v2

+If you utilize our tool, please [cite Kane et al, eLife 2020](https://elifesciences.org/articles/61909). The preprint is

+available here: https://www.biorxiv.org/content/10.1101/2020.08.04.236422v2

```

@Article{Kane2020dlclive,

@@ -150,4 +276,3 @@ If you utilize our tool, please [cite Kane et al, eLife 2020](https://elifescien

year = {2020},

}

```

-

diff --git a/dlclive/__init__.py b/dlclive/__init__.py

index 2eff208..71a89d9 100644

--- a/dlclive/__init__.py

+++ b/dlclive/__init__.py

@@ -5,7 +5,7 @@

Licensed under GNU Lesser General Public License v3.0

"""

-from dlclive.version import __version__, VERSION

+from dlclive.display import Display

from dlclive.dlclive import DLCLive

-from dlclive.processor import Processor

-from dlclive.benchmark import benchmark, benchmark_videos, download_benchmarking_data

+from dlclive.processor.processor import Processor

+from dlclive.version import VERSION, __version__

diff --git a/dlclive/benchmark.py b/dlclive/benchmark.py

index 4cb4fb1..2f0f2af 100644

--- a/dlclive/benchmark.py

+++ b/dlclive/benchmark.py

@@ -5,133 +5,93 @@

Licensed under GNU Lesser General Public License v3.0

"""

-

+import csv

import platform

-import os

-import time

+import subprocess

import sys

+import time

import warnings

-import subprocess

-import typing

-import pickle

+from pathlib import Path

+

import colorcet as cc

+import cv2

+import numpy as np

+import torch

from PIL import ImageColor

-import ruamel

+from pip._internal.operations import freeze

try:

- from pip._internal.operations import freeze

-except ImportError:

- from pip.operations import freeze

-

-from tqdm import tqdm

-import numpy as np

-import tensorflow as tf

-import cv2

+ import pandas as pd

-from dlclive import DLCLive

-from dlclive import VERSION

-from dlclive import __file__ as dlcfile

+ has_pandas = True

+except ModuleNotFoundError as err:

+ has_pandas = False

-from dlclive.utils import decode_fourcc

+try:

+ from tqdm import tqdm

+ has_tqdm = True

+except ModuleNotFoundError as err:

+ has_tqdm = False

-def download_benchmarking_data(

- target_dir=".",

- url="http://deeplabcut.rowland.harvard.edu/datasets/dlclivebenchmark.tar.gz",

-):

- """

- Downloads a DeepLabCut-Live benchmarking Data (videos & DLC models).

- """

- import urllib.request

- import tarfile

- from tqdm import tqdm

- def show_progress(count, block_size, total_size):

- pbar.update(block_size)

-

- def tarfilenamecutting(tarf):

- """' auxfun to extract folder path

- ie. /xyz-trainsetxyshufflez/

- """

- for memberid, member in enumerate(tarf.getmembers()):

- if memberid == 0:

- parent = str(member.path)

- l = len(parent) + 1

- if member.path.startswith(parent):

- member.path = member.path[l:]

- yield member

-

- response = urllib.request.urlopen(url)

- print(

- "Downloading the benchmarking data from the DeepLabCut server @Harvard -> Go Crimson!!! {}....".format(

- url

- )

- )

- total_size = int(response.getheader("Content-Length"))

- pbar = tqdm(unit="B", total=total_size, position=0)

- filename, _ = urllib.request.urlretrieve(url, reporthook=show_progress)

- with tarfile.open(filename, mode="r:gz") as tar:

- tar.extractall(target_dir, members=tarfilenamecutting(tar))

+from dlclive import DLCLive

+from dlclive.utils import decode_fourcc

+from dlclive.version import VERSION

def get_system_info() -> dict:

- """ Return summary info for system running benchmark

+ """

+ Returns a summary of system information relevant to running benchmarking.

+

Returns

-------

dict

- Dictionary containing the following system information:

- * ``host_name`` (str): name of machine

- * ``op_sys`` (str): operating system

- * ``python`` (str): path to python (which conda/virtual environment)

- * ``device`` (tuple): (device type (``'GPU'`` or ``'CPU'```), device information)

- * ``freeze`` (list): list of installed packages and versions

- * ``python_version`` (str): python version

- * ``git_hash`` (str, None): If installed from git repository, hash of HEAD commit

- * ``dlclive_version`` (str): dlclive version from :data:`dlclive.VERSION`

+ A dictionary containing the following system information:

+ - host_name (str): Name of the machine.

+ - op_sys (str): Operating system.

+ - python (str): Path to the Python executable, indicating the conda/virtual

+ environment in use.

+ - device_type (str): Type of device used ('GPU' or 'CPU').

+ - device (list): List containing the name of the GPU or CPU brand.

+ - freeze (list): List of installed Python packages with their versions.

+ - python_version (str): Version of Python in use.

+ - git_hash (str or None): If installed from git repository, hash of HEAD commit.

+ - dlclive_version (str): Version of the DLCLive package.

"""

- # get os

-

+ # Get OS and host name

op_sys = platform.platform()

host_name = platform.node().replace(" ", "")

- # A string giving the absolute path of the executable binary for the Python interpreter, on systems where this makes sense.

+ # Get Python executable path

if platform.system() == "Windows":

host_python = sys.executable.split(os.path.sep)[-2]

else:

host_python = sys.executable.split(os.path.sep)[-3]

- # try to get git hash if possible

- dlc_basedir = os.path.dirname(os.path.dirname(dlcfile))

+ # Try to get git hash if possible

git_hash = None

+ dlc_basedir = os.path.dirname(os.path.dirname(__file__))

try:

- git_hash = subprocess.check_output(

- ["git", "rev-parse", "HEAD"], cwd=dlc_basedir

+ git_hash = (

+ subprocess.check_output(["git", "rev-parse", "HEAD"], cwd=dlc_basedir)

+ .decode("utf-8")

+ .strip()

)

- git_hash = git_hash.decode("utf-8").rstrip("\n")

except subprocess.CalledProcessError:

- # not installed from git repo, eg. pypi

- # fine, pass quietly

+ # Not installed from git repo, e.g., pypi

pass

- # get device info (GPU or CPU)

- dev = None

- if tf.test.is_gpu_available():

- gpu_name = tf.test.gpu_device_name()

- from tensorflow.python.client import device_lib

-

- dev_desc = [

- d.physical_device_desc

- for d in device_lib.list_local_devices()

- if d.name == gpu_name

- ]

- dev = [d.split(",")[1].split(":")[1].strip() for d in dev_desc]

+ # Get device info (GPU or CPU)

+ if torch.cuda.is_available():

dev_type = "GPU"

+ dev = [torch.cuda.get_device_name(torch.cuda.current_device())]

else:

from cpuinfo import get_cpu_info

- dev = [get_cpu_info()["brand"]]

dev_type = "CPU"

+ dev = [get_cpu_info()["brand_raw"]]

return {

"host_name": host_name,

@@ -139,7 +99,6 @@ def get_system_info() -> dict:

"python": host_python,

"device_type": dev_type,

"device": dev,

- # pip freeze to get versions of all packages

"freeze": list(freeze.freeze()),

"python_version": sys.version,

"git_hash": git_hash,

@@ -148,66 +107,78 @@ def get_system_info() -> dict:

def benchmark(

- model_path,

- video_path,

- tf_config=None,

- resize=None,

- pixels=None,

- cropping=None,

- dynamic=(False, 0.5, 10),

- n_frames=1000,

- print_rate=False,

- display=False,

- pcutoff=0.0,

- display_radius=3,

- cmap="bmy",

- save_poses=False,

- save_video=False,

- output=None,

-) -> typing.Tuple[np.ndarray, tuple, bool, dict]:

- """ Analyze DeepLabCut-live exported model on a video:

- Calculate inference time,

- display keypoints, or

- get poses/create a labeled video

+ path: str | Path,

+ video_path: str | Path,

+ single_animal: bool = True,

+ resize: float | None = None,

+ pixels: int | None = None,

+ cropping: list[int] = None,

+ dynamic: tuple[bool, float, int] = (False, 0.5, 10),

+ n_frames: int = 1000,

+ print_rate: bool = False,

+ display: bool = False,

+ pcutoff: float = 0.0,

+ max_detections: int = 10,

+ display_radius: int = 3,

+ cmap: str = "bmy",

+ save_poses: bool = False,

+ save_video: bool = False,

+ output: str | Path | None = None,

+) -> tuple[np.ndarray, tuple, dict]:

+ """Analyze DeepLabCut-live exported model on a video:

+

+ Calculate inference time, display keypoints, or get poses/create a labeled video.

Parameters

----------

- model_path : str

+ path : str

path to exported DeepLabCut model

video_path : str

path to video file

- tf_config : :class:`tensorflow.ConfigProto`

- tensorflow session configuration

+ single_animal: bool

+ to make code behave like DLCLive for tensorflow models

resize : int, optional

- resize factor. Can only use one of resize or pixels. If both are provided, will use pixels. by default None

+ Resize factor. Can only use one of resize or pixels. If both are provided, will

+ use pixels. by default None

pixels : int, optional

- downsize image to this number of pixels, maintaining aspect ratio. Can only use one of resize or pixels. If both are provided, will use pixels. by default None

+ Downsize image to this number of pixels, maintaining aspect ratio. Can only use

+ one of resize or pixels. If both are provided, will use pixels. by default None

cropping : list of int

cropping parameters in pixel number: [x1, x2, y1, y2]

dynamic: triple containing (state, detectiontreshold, margin)

- If the state is true, then dynamic cropping will be performed. That means that if an object is detected (i.e. any body part > detectiontreshold),

- then object boundaries are computed according to the smallest/largest x position and smallest/largest y position of all body parts. This window is

- expanded by the margin and from then on only the posture within this crop is analyzed (until the object is lost, i.e. detectiontreshold), then object

+ boundaries are computed according to the smallest/largest x position and

+ smallest/largest y position of all body parts. This window is expanded by the

+ margin and from then on only the posture within this crop is analyzed (until the

+ object is lost, i.e. < detectiontreshold). The current position is utilized for

+ updating the crop window for the next frame (this is why the margin is important

+ and should be set large enough given the movement of the animal)

n_frames : int, optional

number of frames to run inference on, by default 1000

print_rate : bool, optional

- flat to print inference rate frame by frame, by default False

+ flag to print inference rate frame by frame, by default False

display : bool, optional

- flag to display keypoints on images. Useful for checking the accuracy of exported models.

+ flag to display keypoints on images. Useful for checking the accuracy of

+ exported models.

pcutoff : float, optional

likelihood threshold to display keypoints

+ max_detections: int

+ for top-down models, the maximum number of individuals to detect in a frame

display_radius : int, optional

size (radius in pixels) of keypoint to display

cmap : str, optional

- a string indicating the :package:`colorcet` colormap, `options here `, by default "bmy"

+ a string indicating the :package:`colorcet` colormap, `options here

+ `, by default "bmy"

save_poses : bool, optional

- flag to save poses to an hdf5 file. If True, operates similar to :function:`DeepLabCut.benchmark_videos`, by default False

+ flag to save poses to an hdf5 file. If True, operates similar to

+ :function:`DeepLabCut.benchmark_videos`, by default False

save_video : bool, optional

- flag to save a labeled video. If True, operates similar to :function:`DeepLabCut.create_labeled_video`, by default False

+ flag to save a labeled video. If True, operates similar to

+ :function:`DeepLabCut.create_labeled_video`, by default False

output : str, optional

- path to directory to save pose and/or video file. If not specified, will use the directory of video_path, by default None

+ path to directory to save pose and/or video file. If not specified, will use

+ the directory of video_path, by default None

Returns

-------

@@ -215,8 +186,6 @@ def benchmark(

vector of inference times

tuple

(image width, image height)

- bool

- tensorflow inference flag

dict

metadata for video

@@ -234,10 +203,19 @@ def benchmark(

Analyze a video (save poses to hdf5) and create a labeled video, similar to :function:`DeepLabCut.benchmark_videos` and :function:`create_labeled_video`

dlclive.benchmark('/my/exported/model', 'my_video.avi', save_poses=True, save_video=True)

"""

+ path = Path(path)

+ video_path = Path(video_path)

+ if not video_path.exists():

+ raise ValueError(f"Could not find video: {video_path}: check that it exists!")

- ### load video

+ if output is None:

+ output = video_path.parent

+ else:

+ output = Path(output)

+ output.mkdir(exist_ok=True, parents=True)

- cap = cv2.VideoCapture(video_path)

+ # load video

+ cap = cv2.VideoCapture(str(video_path))

ret, frame = cap.read()

n_frames = (

n_frames

@@ -245,112 +223,107 @@ def benchmark(

else (cap.get(cv2.CAP_PROP_FRAME_COUNT) - 1)

)

n_frames = int(n_frames)

- im_size = (cap.get(cv2.CAP_PROP_FRAME_WIDTH), cap.get(cv2.CAP_PROP_FRAME_HEIGHT))

-

- ### get resize factor

+ im_size = (

+ int(cap.get(cv2.CAP_PROP_FRAME_WIDTH)),

+ int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT)),

+ )

+ # get resize factor

if pixels is not None:

resize = np.sqrt(pixels / (im_size[0] * im_size[1]))

+

if resize is not None:

im_size = (int(im_size[0] * resize), int(im_size[1] * resize))

- ### create video writer

-

+ # create video writer

if save_video:

colors = None

- out_dir = (

- output

- if output is not None

- else os.path.dirname(os.path.realpath(video_path))

- )

- out_vid_base = os.path.basename(video_path)

- out_vid_file = os.path.normpath(

- f"{out_dir}/{os.path.splitext(out_vid_base)[0]}_DLCLIVE_LABELED.avi"

- )

+ out_vid_file = output / f"{video_path.stem}_DLCLIVE_LABELED.avi"

fourcc = cv2.VideoWriter_fourcc(*"DIVX")

fps = cap.get(cv2.CAP_PROP_FPS)

- vwriter = cv2.VideoWriter(out_vid_file, fourcc, fps, im_size)

-

- ### check for pandas installation if using save_poses flag

-

- if save_poses:

- try:

- import pandas as pd

-

- use_pandas = True

- except:

- use_pandas = False

- warnings.warn(

- "Could not find installation of pandas; saving poses as a numpy array with the dimensions (n_frames, n_keypoints, [x, y, likelihood])."

- )

-

- ### initialize DLCLive and perform inference

+ print(out_vid_file)

+ print(fourcc)

+ print(fps)

+ print(im_size)

+ vid_writer = cv2.VideoWriter(str(out_vid_file), fourcc, fps, im_size)

+ # initialize DLCLive and perform inference

inf_times = np.zeros(n_frames)

poses = []

live = DLCLive(

- model_path,

- tf_config=tf_config,

+ model_path=path,

+ single_animal=single_animal,

resize=resize,

cropping=cropping,

dynamic=dynamic,

display=display,

+ max_detections=max_detections,

pcutoff=pcutoff,

display_radius=display_radius,

display_cmap=cmap,

)

poses.append(live.init_inference(frame))

- TFGPUinference = True if len(live.outputs) == 1 else False

- iterator = range(n_frames) if (print_rate) or (display) else tqdm(range(n_frames))

- for i in iterator:

+ iterator = range(n_frames)

+ if print_rate or display:

+ iterator = tqdm(iterator)

+ for i in iterator:

ret, frame = cap.read()

-

if not ret:

warnings.warn(

- "Did not complete {:d} frames. There probably were not enough frames in the video {}.".format(

- n_frames, video_path

- )

+ f"Did not complete {n_frames:d} frames. There probably were not enough "

+ f"frames in the video {video_path}."

)

break

start_pose = time.time()

poses.append(live.get_pose(frame))

inf_times[i] = time.time() - start_pose

-

if save_video:

+ this_pose = poses[-1]

+

+ if single_animal:

+ # expand individual dimension

+ this_pose = this_pose[None]

+

+ num_idv, num_bpt = this_pose.shape[:2]

+ num_colors = num_bpt

if colors is None:

all_colors = getattr(cc, cmap)

colors = [

ImageColor.getcolor(c, "RGB")[::-1]

- for c in all_colors[:: int(len(all_colors) / poses[-1].shape[0])]

+ for c in all_colors[:: int(len(all_colors) / num_colors)]

]

- this_pose = poses[-1]

- for j in range(this_pose.shape[0]):

- if this_pose[j, 2] > pcutoff:

- x = int(this_pose[j, 0])

- y = int(this_pose[j, 1])

- frame = cv2.circle(

- frame, (x, y), display_radius, colors[j], thickness=-1

- )

+ for j in range(num_idv):

+ for k in range(num_bpt):

+ color_idx = k

+ if this_pose[j, k, 2] > pcutoff:

+ x = int(this_pose[j, k, 0])

+ y = int(this_pose[j, k, 1])

+ frame = cv2.circle(

+ frame,

+ (x, y),

+ display_radius,

+ colors[color_idx],

+ thickness=-1,

+ )

if resize is not None:

frame = cv2.resize(frame, im_size)

- vwriter.write(frame)

+ vid_writer.write(frame)

if print_rate:

- print("pose rate = {:d}".format(int(1 / inf_times[i])))

+ print(f"pose rate = {int(1 / inf_times[i]):d}")

if print_rate:

- print("mean pose rate = {:d}".format(int(np.mean(1 / inf_times))))

-

- ### gather video and test parameterization

+ print(f"mean pose rate = {int(np.mean(1 / inf_times)):d}")

+ # gather video and test parameterization

# dont want to fail here so gracefully failing on exception --

# eg. some packages of cv2 don't have CAP_PROP_CODEC_PIXEL_FORMAT

try:

@@ -360,17 +333,17 @@ def benchmark(

try:

fps = round(cap.get(cv2.CAP_PROP_FPS))

- except:

+ except Exception:

fps = None

try:

pix_fmt = decode_fourcc(cap.get(cv2.CAP_PROP_CODEC_PIXEL_FORMAT))

- except:

+ except Exception:

pix_fmt = ""

try:

frame_count = round(cap.get(cv2.CAP_PROP_FRAME_COUNT))

- except:

+ except Exception:

frame_count = None

try:

@@ -378,7 +351,7 @@ def benchmark(

round(cap.get(cv2.CAP_PROP_FRAME_WIDTH)),

round(cap.get(cv2.CAP_PROP_FRAME_HEIGHT)),

)

- except:

+ except Exception:

orig_im_size = None

meta = {

@@ -391,333 +364,408 @@ def benchmark(

"dlclive_params": live.parameterization,

}

- ### close video and tensorflow session

-

+ # close video

cap.release()

- live.close()

-

if save_video:

- vwriter.release()

+ vid_writer.release()

if save_poses:

-

- cfg_path = os.path.normpath(f"{model_path}/pose_cfg.yaml")

- ruamel_file = ruamel.yaml.YAML()

- dlc_cfg = ruamel_file.load(open(cfg_path, "r"))

- bodyparts = dlc_cfg["all_joints_names"]

- poses = np.array(poses)

-

- if use_pandas:

-

- poses = poses.reshape((poses.shape[0], poses.shape[1] * poses.shape[2]))

- pdindex = pd.MultiIndex.from_product(

- [bodyparts, ["x", "y", "likelihood"]], names=["bodyparts", "coords"]

- )

- pose_df = pd.DataFrame(poses, columns=pdindex)

-

- out_dir = (

- output

- if output is not None

- else os.path.dirname(os.path.realpath(video_path))

- )

- out_vid_base = os.path.basename(video_path)

- out_dlc_file = os.path.normpath(

- f"{out_dir}/{os.path.splitext(out_vid_base)[0]}_DLCLIVE_POSES.h5"

+ bodyparts = live.cfg["metadata"]["bodyparts"]

+ max_idv = np.max([p.shape[0] for p in poses])

+

+ poses_array = -np.ones((len(poses), max_idv, len(bodyparts), 3))

+ for i, p in enumerate(poses):

+ num_det = len(p)

+ poses_array[i, :num_det] = p

+ poses = poses_array

+

+ num_frames, num_idv, num_bpts = poses.shape[:3]

+ individuals = [f"individual-{i}" for i in range(num_idv)]

+

+ if has_pandas:

+ poses = poses.reshape((num_frames, num_idv * num_bpts * 3))

+ col_index = pd.MultiIndex.from_product(

+ [individuals, bodyparts, ["x", "y", "likelihood"]],

+ names=["individual", "bodyparts", "coords"],

)

- pose_df.to_hdf(out_dlc_file, key="df_with_missing", mode="w")

+ pose_df = pd.DataFrame(poses, columns=col_index)

+

+ out_dlc_file = output / (video_path.stem + "_DLCLIVE_POSES.h5")

+ try:

+ pose_df.to_hdf(out_dlc_file, key="df_with_missing", mode="w")

+ except ImportError as err:

+ print(

+ "Cannot export predictions to H5 file. Install ``pytables`` extra "

+ f"to export to HDF: {err}"

+ )

+ out_csv = Path(out_dlc_file).with_suffix(".csv")

+ pose_df.to_csv(out_csv)

else:

-

- out_vid_base = os.path.basename(video_path)

- out_dlc_file = os.path.normpath(

- f"{out_dir}/{os.path.splitext(out_vid_base)[0]}_DLCLIVE_POSES.npy"

+ warnings.warn(

+ "Could not find installation of pandas; saving poses as a numpy array "

+ "with the dimensions (n_frames, n_keypoints, [x, y, likelihood])."

)

- np.save(out_dlc_file, poses)

+ np.save(str(output / (video_path.stem + "_DLCLIVE_POSES.npy")), poses)

- return inf_times, im_size, TFGPUinference, meta

+ return inf_times, im_size, meta

-def save_inf_times(

- sys_info, inf_times, im_size, TFGPUinference, model=None, meta=None, output=None

+def benchmark_videos(

+ video_path: str,

+ model_path: str,

+ model_type: str,

+ device: str,

+ precision: str = "FP32",

+ display=True,

+ pcutoff=0.5,

+ display_radius=5,

+ resize=None,

+ cropping=None, # Adding cropping to the function parameters

+ dynamic=(False, 0.5, 10),

+ save_poses=False,

+ save_dir="model_predictions",

+ draw_keypoint_names=False,

+ cmap="bmy",

+ get_sys_info=True,

+ save_video=False,

):

- """ Save inference time data collected using :function:`benchmark` with system information to a pickle file.

- This is primarily used through :function:`benchmark_videos`

-

+ """

+ Analyzes a video to track keypoints using a DeepLabCut model, and optionally saves

+ the keypoint data and the labeled video.

Parameters

----------

- sys_info : tuple

- system information generated by :func:`get_system_info`

- inf_times : :class:`numpy.ndarray`

- array of inference times generated by :func:`benchmark`

- im_size : tuple or :class:`numpy.ndarray`

- image size (width, height) for each benchmark run. If an array, each row corresponds to a row in inf_times

- TFGPUinference: bool

- flag if using tensorflow inference or numpy inference DLC model

- model: str, optional

- name of model

- meta : dict, optional

- metadata returned by :func:`benchmark`

- output : str, optional

- path to directory to save data. If None, uses pwd, by default None

+ video_path : str

+ Path to the video file to be analyzed.

+ model_path : str

+ Path to the DeepLabCut model.

+ model_type : str

+ Type of the model (e.g., 'onnx').

+ device : str

+ Device to run the model on ('cpu' or 'cuda').

+ precision : str, optional, default='FP32'

+ Precision type for the model ('FP32' or 'FP16').

+ display : bool, optional, default=True

+ Whether to display frame with labelled key points.

+ pcutoff : float, optional, default=0.5

+ Probability cutoff below which keypoints are not visualized.

+ display_radius : int, optional, default=5

+ Radius of circles drawn for keypoints on video frames.

+ resize : tuple of int (width, height) or None, optional

+ Resize dimensions for video frames. e.g. if resize = 0.5, the video will be

+ processed in half the original size. If None, no resizing is applied.

+ cropping : list of int or None, optional

+ Cropping parameters [x1, x2, y1, y2] in pixels. If None, no cropping is applied.

+ dynamic : tuple, optional, default=(False, 0.5, 10) (True/false), p cutoff, margin)

+ Parameters for dynamic cropping. If the state is true, then dynamic cropping

+ will be performed. That means that if an object is detected (i.e. any body part

+ > detectiontreshold), then object boundaries are computed according to the

+ smallest/largest x position and smallest/largest y position of all body parts.

+ This window is expanded by the margin and from then on only the posture within

+ this crop is analyzed (until the object is lost, i.e. detectiontreshold),

- then object boundaries are computed according to the smallest/largest x position and smallest/largest y position of all body parts. This window is

- expanded by the margin and from then on only the posture within this crop is analyzed (until the object is lost, i.e. `, by default "bmy"

- save_poses : bool, optional

- flag to save poses to an hdf5 file. If True, operates similar to :function:`DeepLabCut.benchmark_videos`, by default False

- save_video : bool, optional

- flag to save a labeled video. If True, operates similar to :function:`DeepLabCut.create_labeled_video`, by default False

+ # Get video writer setup

+ fourcc = cv2.VideoWriter_fourcc(*"mp4v")

+ fps = cap.get(cv2.CAP_PROP_FPS)

+ frame_width = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH))

+ frame_height = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT))

+

+ vwriter = cv2.VideoWriter(

+ filename=output_video_path,

+ fourcc=fourcc,

+ fps=fps,

+ frameSize=(frame_width, frame_height),

+ )

- Example

- -------

- Return a vector of inference times for 10000 frames on one video or two videos:

- dlclive.benchmark_videos('/my/exported/model', 'my_video.avi', n_frames=10000)

- dlclive.benchmark_videos('/my/exported/model', ['my_video1.avi', 'my_video2.avi'], n_frames=10000)

+ while True:

+ ret, frame = cap.read()

+ if not ret:

+ break

+ # if frame_index == 0:

+ # pose = dlc_live.init_inference(frame) # load DLC model

+ try:

+ # pose = dlc_live.get_pose(frame)

+ if frame_index == 0:

+ # TODO trying to fix issues with dynamic cropping jumping back and forth

+ # between dyanmic cropped and original image

+ # dlc_live.dynamic = (False, dynamic[1], dynamic[2])

+ pose, inf_time = dlc_live.init_inference(frame) # load DLC model

+ else:

+ # dlc_live.dynamic = dynamic

+ pose, inf_time = dlc_live.get_pose(frame)

+ except Exception as e:

+ print(f"Error analyzing frame {frame_index}: {e}")

+ continue

+

+ poses.append({"frame": frame_index, "pose": pose})

+ times.append(inf_time)

+

+ if save_video:

+ # Visualize keypoints

+ this_pose = pose["poses"][0][0]

+ for j in range(this_pose.shape[0]):

+ if this_pose[j, 2] > pcutoff:

+ x, y = map(int, this_pose[j, :2])

+ cv2.circle(

+ frame,

+ center=(x, y),

+ radius=display_radius,

+ color=colors[j],

+ thickness=-1,

+ )

- Return a vector of inference times, testing full size and resizing images to half the width and height for inference, for two videos

- dlclive.benchmark_videos('/my/exported/model', ['my_video1.avi', 'my_video2.avi'], n_frames=10000, resize=[1.0, 0.5])

+ if draw_keypoint_names:

+ cv2.putText(

+ frame,

+ text=bodyparts[j],

+ org=(x + 10, y),

+ fontFace=cv2.FONT_HERSHEY_SIMPLEX,

+ fontScale=0.5,

+ color=colors[j],

+ thickness=1,

+ lineType=cv2.LINE_AA,

+ )

+

+ vwriter.write(image=frame)

+ frame_index += 1

- Display keypoints to check the accuracy of an exported model

- dlclive.benchmark_videos('/my/exported/model', 'my_video.avi', display=True)

+ cap.release()

+ if save_video:

+ vwriter.release()

- Analyze a video (save poses to hdf5) and create a labeled video, similar to :function:`DeepLabCut.benchmark_videos` and :function:`create_labeled_video`

- dlclive.benchmark_videos('/my/exported/model', 'my_video.avi', save_poses=True, save_video=True)

- """

+ if get_sys_info:

+ print(get_system_info())

- # convert video_paths to list

+ if save_poses:

+ save_poses_to_files(video_path, save_dir, bodyparts, poses, timestamp=timestamp)

- video_path = video_path if type(video_path) is list else [video_path]

+ return poses, times

- # fix resize

- if pixels:

- pixels = pixels if type(pixels) is list else [pixels]

- resize = [None for p in pixels]

- elif resize:

- resize = resize if type(resize) is list else [resize]

- pixels = [None for r in resize]

- else:

- resize = [None]

- pixels = [None]

-

- # loop over videos

-

- for v in video_path:

-

- # initialize full inference times

-

- inf_times = []

- im_size_out = []

-

- for i in range(len(resize)):

-

- print(f"\nRun {i+1} / {len(resize)}\n")

-

- this_inf_times, this_im_size, TFGPUinference, meta = benchmark(

- model_path,

- v,

- tf_config=tf_config,

- resize=resize[i],

- pixels=pixels[i],

- cropping=cropping,

- dynamic=dynamic,

- n_frames=n_frames,

- print_rate=print_rate,

- display=display,

- pcutoff=pcutoff,

- display_radius=display_radius,

- cmap=cmap,

- save_poses=save_poses,

- save_video=save_video,

- output=output,

- )

+def save_poses_to_files(video_path, save_dir, bodyparts, poses, timestamp):

+ """

+ Saves the detected keypoint poses from the video to CSV and HDF5 files.

- inf_times.append(this_inf_times)

- im_size_out.append(this_im_size)

+ Parameters

+ ----------

+ video_path : str

+ Path to the analyzed video file.

+ save_dir : str

+ Directory where the pose data files will be saved.

+ bodyparts : list of str

+ List of body part names corresponding to the keypoints.

+ poses : list of dict

+ List of dictionaries containing frame numbers and corresponding pose data.

- inf_times = np.array(inf_times)

- im_size_out = np.array(im_size_out)

+ Returns

+ -------

+ None

+ """

- # save results

+ base_filename = os.path.splitext(os.path.basename(video_path))[0]

+ csv_save_path = os.path.join(save_dir, f"{base_filename}_poses_{timestamp}.csv")

+ h5_save_path = os.path.join(save_dir, f"{base_filename}_poses_{timestamp}.h5")

- if output is not None:

- sys_info = get_system_info()

- save_inf_times(

- sys_info,

- inf_times,

- im_size_out,

- TFGPUinference,

- model=os.path.basename(model_path),

- meta=meta,

- output=output,

- )

+ # Save to CSV

+ with open(csv_save_path, mode="w", newline="") as file:

+ writer = csv.writer(file)

+ header = ["frame"] + [

+ f"{bp}_{axis}" for bp in bodyparts for axis in ["x", "y", "confidence"]

+ ]

+ writer.writerow(header)

+ for entry in poses:

+ frame_num = entry["frame"]

+ pose = entry["pose"]["poses"][0][0]

+ row = [frame_num] + [

+ item.item() if isinstance(item, torch.Tensor) else item

+ for kp in pose

+ for item in kp

+ ]

+ writer.writerow(row)

+

+

+import argparse

+import os

def main():

- """Provides a command line interface :function:`benchmark_videos`

- """

+ """Provides a command line interface to benchmark_videos function."""

+ parser = argparse.ArgumentParser(

+ description="Analyze a video using a DeepLabCut model and visualize keypoints."

+ )

+ parser.add_argument("model_path", type=str, help="Path to the model.")

+ parser.add_argument("video_path", type=str, help="Path to the video file.")

+ parser.add_argument("model_type", type=str, help="Type of the model (e.g., 'DLC').")

+ parser.add_argument(

+ "device", type=str, help="Device to run the model on (e.g., 'cuda' or 'cpu')."

+ )

+ parser.add_argument(

+ "-p",

+ "--precision",

+ type=str,

+ default="FP32",

+ help="Model precision (e.g., 'FP32', 'FP16').",

+ )

+ parser.add_argument(

+ "-d", "--display", action="store_true", help="Display keypoints on the video."

+ )

+ parser.add_argument(

+ "-c",

+ "--pcutoff",

+ type=float,

+ default=0.5,

+ help="Probability cutoff for keypoints visualization.",

+ )

+ parser.add_argument(

+ "-dr",

+ "--display-radius",

+ type=int,

+ default=5,

+ help="Radius of keypoint circles in the display.",

+ )

+ parser.add_argument(

+ "-r",

+ "--resize",

+ type=int,

+ default=None,

+ help="Resize video frames to [width, height].",

+ )

+ parser.add_argument(

+ "-x",

+ "--cropping",

+ type=int,

+ nargs=4,

+ default=None,

+ help="Cropping parameters [x1, x2, y1, y2].",

+ )

+ parser.add_argument(

+ "-y",

+ "--dynamic",

+ type=float,

+ nargs=3,

+ default=[False, 0.5, 10],

+ help="Dynamic cropping [flag, pcutoff, margin].",

+ )

+ parser.add_argument(

+ "--save-poses", action="store_true", help="Save the keypoint poses to files."

+ )

+ parser.add_argument(

+ "--save-video",

+ action="store_true",

+ help="Save the output video with keypoints.",

+ )

+ parser.add_argument(

+ "--save-dir",

+ type=str,

+ default="model_predictions",

+ help="Directory to save output files.",

+ )

+ parser.add_argument(

+ "--draw-keypoint-names",

+ action="store_true",

+ help="Draw keypoint names on the video.",

+ )

+ parser.add_argument(

+ "--cmap", type=str, default="bmy", help="Colormap for keypoints visualization."

+ )

+ parser.add_argument(

+ "--no-sys-info",

+ action="store_false",

+ help="Do not print system info.",

+ dest="get_sys_info",

+ )

- import argparse

-

- parser = argparse.ArgumentParser()

- parser.add_argument("model_path", type=str)

- parser.add_argument("video_path", type=str, nargs="+")

- parser.add_argument("-o", "--output", type=str, default=None)

- parser.add_argument("-n", "--n-frames", type=int, default=1000)

- parser.add_argument("-r", "--resize", type=float, nargs="+")

- parser.add_argument("-p", "--pixels", type=float, nargs="+")

- parser.add_argument("-v", "--print-rate", default=False, action="store_true")

- parser.add_argument("-d", "--display", default=False, action="store_true")

- parser.add_argument("-l", "--pcutoff", default=0.5, type=float)

- parser.add_argument("-s", "--display-radius", default=3, type=int)

- parser.add_argument("-c", "--cmap", type=str, default="bmy")

- parser.add_argument("--cropping", nargs="+", type=int, default=None)

- parser.add_argument("--dynamic", nargs="+", type=float, default=[])

- parser.add_argument("--save-poses", action="store_true")

- parser.add_argument("--save-video", action="store_true")

args = parser.parse_args()

- if (args.cropping) and (len(args.cropping) < 4):

- raise Exception(

- "Cropping not properly specified. Must provide 4 values: x1, x2, y1, y2"

- )

-

- if not args.dynamic:

- args.dynamic = (False, 0.5, 10)

- elif len(args.dynamic) < 3:

- raise Exception(

- "Dynamic cropping not properly specified. Must provide three values: 0 or 1 as boolean flag, pcutoff, and margin"

- )

- else:

- args.dynamic = (bool(args.dynamic[0]), args.dynamic[1], args.dynamic[2])

-

+ # Call the benchmark_videos function with the parsed arguments

benchmark_videos(

- args.model_path,

- args.video_path,

- output=args.output,

- resize=args.resize,

- pixels=args.pixels,

- cropping=args.cropping,

- dynamic=args.dynamic,

- n_frames=args.n_frames,

- print_rate=args.print_rate,

+ video_path=args.video_path,

+ model_path=args.model_path,

+ model_type=args.model_type,

+ device=args.device,

+ precision=args.precision,

display=args.display,

pcutoff=args.pcutoff,

display_radius=args.display_radius,

- cmap=args.cmap,

+ resize=tuple(args.resize) if args.resize else None,

+ cropping=args.cropping,

+ dynamic=tuple(args.dynamic),

save_poses=args.save_poses,

+ save_dir=args.save_dir,

+ draw_keypoint_names=args.draw_keypoint_names,

+ cmap=args.cmap,

+ get_sys_info=args.get_sys_info,

save_video=args.save_video,

)

diff --git a/dlclive/benchmark_pytorch.py b/dlclive/benchmark_pytorch.py

new file mode 100644

index 0000000..bd5826f

--- /dev/null

+++ b/dlclive/benchmark_pytorch.py

@@ -0,0 +1,484 @@

+import csv

+import os

+import platform

+import subprocess

+import sys

+import time

+

+import colorcet as cc

+import cv2

+import h5py

+import numpy as np

+import torch

+from PIL import ImageColor

+from pip._internal.operations import freeze

+

+from dlclive import DLCLive

+from dlclive.version import VERSION

+

+

+def get_system_info() -> dict:

+ """

+ Returns a summary of system information relevant to running benchmarking.

+

+ Returns

+ -------

+ dict

+ A dictionary containing the following system information:

+ - host_name (str): Name of the machine.

+ - op_sys (str): Operating system.

+ - python (str): Path to the Python executable, indicating the conda/virtual environment in use.

+ - device_type (str): Type of device used ('GPU' or 'CPU').

+ - device (list): List containing the name of the GPU or CPU brand.

+ - freeze (list): List of installed Python packages with their versions.

+ - python_version (str): Version of Python in use.

+ - git_hash (str or None): If installed from git repository, hash of HEAD commit.

+ - dlclive_version (str): Version of the DLCLive package.

+ """

+

+ # Get OS and host name

+ op_sys = platform.platform()

+ host_name = platform.node().replace(" ", "")

+

+ # Get Python executable path

+ if platform.system() == "Windows":

+ host_python = sys.executable.split(os.path.sep)[-2]

+ else:

+ host_python = sys.executable.split(os.path.sep)[-3]

+

+ # Try to get git hash if possible

+ git_hash = None

+ dlc_basedir = os.path.dirname(os.path.dirname(__file__))

+ try:

+ git_hash = (

+ subprocess.check_output(["git", "rev-parse", "HEAD"], cwd=dlc_basedir)

+ .decode("utf-8")

+ .strip()

+ )

+ except subprocess.CalledProcessError:

+ # Not installed from git repo, e.g., pypi

+ pass

+

+ # Get device info (GPU or CPU)

+ if torch.cuda.is_available():

+ dev_type = "GPU"

+ dev = [torch.cuda.get_device_name(torch.cuda.current_device())]

+ else:

+ from cpuinfo import get_cpu_info

+

+ dev_type = "CPU"

+ dev = [get_cpu_info()["brand_raw"]]

+

+ return {

+ "host_name": host_name,

+ "op_sys": op_sys,

+ "python": host_python,

+ "device_type": dev_type,

+ "device": dev,

+ "freeze": list(freeze.freeze()),

+ "python_version": sys.version,

+ "git_hash": git_hash,

+ "dlclive_version": VERSION,

+ }

+

+

+def analyze_video(

+ video_path: str,

+ model_path: str,

+ model_type: str,

+ device: str,

+ precision: str = "FP32",

+ snapshot: str = None,

+ display=True,

+ pcutoff=0.5,

+ display_radius=5,

+ resize=None,

+ cropping=None, # Adding cropping to the function parameters

+ dynamic=(False, 0.5, 10),

+ save_poses=False,

+ save_dir="model_predictions",

+ draw_keypoint_names=False,

+ cmap="bmy",

+ get_sys_info=True,

+ save_video=False,

+):

+ """

+ Analyzes a video to track keypoints using a DeepLabCut model, and optionally saves the keypoint data and the labeled video.

+

+ Parameters

+ ----------

+ video_path : str

+ Path to the video file to be analyzed.

+ model_path : str

+ Path to the DeepLabCut model.

+ model_type : str

+ Type of the model (e.g., 'onnx').

+ device : str

+ Device to run the model on ('cpu' or 'cuda').

+ precision : str, optional, default='FP32'

+ Precision type for the model ('FP32' or 'FP16').

+ snapshot : str, optional

+ Snapshot to use for the model, if using pytorch as model type.

+ display : bool, optional, default=True

+ Whether to display frame with labelled key points.

+ pcutoff : float, optional, default=0.5

+ Probability cutoff below which keypoints are not visualized.

+ display_radius : int, optional, default=5

+ Radius of circles drawn for keypoints on video frames.

+ resize : tuple of int (width, height) or None, optional

+ Resize dimensions for video frames. e.g. if resize = 0.5, the video will be processed in half the original size. If None, no resizing is applied.

+ cropping : list of int or None, optional

+ Cropping parameters [x1, x2, y1, y2] in pixels. If None, no cropping is applied.

+ dynamic : tuple, optional, default=(False, 0.5, 10) (True/false), p cutoff, margin)

+ Parameters for dynamic cropping. If the state is true, then dynamic cropping will be performed. That means that if an object is detected (i.e. any body part > detectiontreshold), then object boundaries are computed according to the smallest/largest x position and smallest/largest y position of all body parts. This window is expanded by the margin and from then on only the posture within this crop is analyzed (until the object is lost, i.e. pcutoff:

+ x, y = map(int, this_pose[j, :2])

+ cv2.circle(

+ frame,

+ center=(x, y),

+ radius=display_radius,

+ color=colors[j],

+ thickness=-1,

+ )

+

+ if draw_keypoint_names:

+ cv2.putText(

+ frame,

+ text=bodyparts[j],

+ org=(x + 10, y),

+ fontFace=cv2.FONT_HERSHEY_SIMPLEX,

+ fontScale=0.5,

+ color=colors[j],

+ thickness=1,

+ lineType=cv2.LINE_AA,

+ )

+

+ vwriter.write(image=frame)

+ frame_index += 1

+

+ cap.release()

+ if save_video:

+ vwriter.release()

+

+ if get_sys_info:

+ print(get_system_info())

+

+ if save_poses:

+ save_poses_to_files(video_path, save_dir, bodyparts, poses, timestamp=timestamp)

+

+ return poses, times

+

+

+def save_poses_to_files(video_path, save_dir, bodyparts, poses, timestamp):

+ """

+ Saves the detected keypoint poses from the video to CSV and HDF5 files.

+

+ Parameters

+ ----------

+ video_path : str

+ Path to the analyzed video file.

+ save_dir : str

+ Directory where the pose data files will be saved.

+ bodyparts : list of str

+ List of body part names corresponding to the keypoints.

+ poses : list of dict

+ List of dictionaries containing frame numbers and corresponding pose data.

+

+ Returns

+ -------

+ None

+ """

+

+ base_filename = os.path.splitext(os.path.basename(video_path))[0]

+ csv_save_path = os.path.join(save_dir, f"{base_filename}_poses_{timestamp}.csv")

+ h5_save_path = os.path.join(save_dir, f"{base_filename}_poses_{timestamp}.h5")

+

+ # Save to CSV

+ with open(csv_save_path, mode="w", newline="") as file:

+ writer = csv.writer(file)

+ header = ["frame"] + [

+ f"{bp}_{axis}" for bp in bodyparts for axis in ["x", "y", "confidence"]

+ ]

+ writer.writerow(header)

+ for entry in poses:

+ frame_num = entry["frame"]

+ pose = entry["pose"]["poses"][0][0]

+ row = [frame_num] + [

+ item.item() if isinstance(item, torch.Tensor) else item

+ for kp in pose

+ for item in kp

+ ]

+ writer.writerow(row)

+

+ # Save to HDF5

+ with h5py.File(h5_save_path, "w") as hf:

+ hf.create_dataset(name="frames", data=[entry["frame"] for entry in poses])

+ for i, bp in enumerate(bodyparts):

+ hf.create_dataset(

+ name=f"{bp}_x",

+ data=[

+ (

+ entry["pose"]["poses"][0][0][i, 0].item()

+ if isinstance(entry["pose"]["poses"][0][0][i, 0], torch.Tensor)

+ else entry["pose"]["poses"][0][0][i, 0]

+ )

+ for entry in poses

+ ],

+ )

+ hf.create_dataset(

+ name=f"{bp}_y",

+ data=[

+ (

+ entry["pose"]["poses"][0][0][i, 1].item()

+ if isinstance(entry["pose"]["poses"][0][0][i, 1], torch.Tensor)

+ else entry["pose"]["poses"][0][0][i, 1]

+ )

+ for entry in poses

+ ],

+ )

+ hf.create_dataset(

+ name=f"{bp}_confidence",

+ data=[

+ (

+ entry["pose"]["poses"][0][0][i, 2].item()

+ if isinstance(entry["pose"]["poses"][0][0][i, 2], torch.Tensor)

+ else entry["pose"]["poses"][0][0][i, 2]

+ )

+ for entry in poses

+ ],

+ )

+

+

+import argparse

+import os

+

+

+def main():

+ """Provides a command line interface to analyze_video function."""

+

+ parser = argparse.ArgumentParser(

+ description="Analyze a video using a DeepLabCut model and visualize keypoints."

+ )

+ parser.add_argument("model_path", type=str, help="Path to the model.")

+ parser.add_argument("video_path", type=str, help="Path to the video file.")

+ parser.add_argument("model_type", type=str, help="Type of the model (e.g., 'DLC').")

+ parser.add_argument(

+ "device", type=str, help="Device to run the model on (e.g., 'cuda' or 'cpu')."

+ )

+ parser.add_argument(

+ "-p",

+ "--precision",

+ type=str,

+ default="FP32",

+ help="Model precision (e.g., 'FP32', 'FP16').",

+ )

+ parser.add_argument(

+ "-s",

+ "--snapshot",

+ type=str,

+ default=None,

+ help="Path to a specific model snapshot.",

+ )

+ parser.add_argument(

+ "-d", "--display", action="store_true", help="Display keypoints on the video."

+ )

+ parser.add_argument(

+ "-c",

+ "--pcutoff",

+ type=float,

+ default=0.5,

+ help="Probability cutoff for keypoints visualization.",

+ )

+ parser.add_argument(

+ "-dr",

+ "--display-radius",

+ type=int,

+ default=5,

+ help="Radius of keypoint circles in the display.",

+ )

+ parser.add_argument(

+ "-r",

+ "--resize",

+ type=int,

+ default=None,

+ help="Resize video frames to [width, height].",

+ )

+ parser.add_argument(

+ "-x",

+ "--cropping",

+ type=int,

+ nargs=4,

+ default=None,

+ help="Cropping parameters [x1, x2, y1, y2].",

+ )

+ parser.add_argument(

+ "-y",

+ "--dynamic",

+ type=float,

+ nargs=3,

+ default=[False, 0.5, 10],

+ help="Dynamic cropping [flag, pcutoff, margin].",

+ )

+ parser.add_argument(

+ "--save-poses", action="store_true", help="Save the keypoint poses to files."

+ )

+ parser.add_argument(

+ "--save-video",

+ action="store_true",

+ help="Save the output video with keypoints.",

+ )

+ parser.add_argument(

+ "--save-dir",

+ type=str,

+ default="model_predictions",

+ help="Directory to save output files.",

+ )

+ parser.add_argument(

+ "--draw-keypoint-names",

+ action="store_true",

+ help="Draw keypoint names on the video.",

+ )

+ parser.add_argument(

+ "--cmap", type=str, default="bmy", help="Colormap for keypoints visualization."

+ )

+ parser.add_argument(

+ "--no-sys-info",

+ action="store_false",

+ help="Do not print system info.",

+ dest="get_sys_info",

+ )

+

+ args = parser.parse_args()

+

+ # Call the analyze_video function with the parsed arguments

+ analyze_video(

+ video_path=args.video_path,

+ model_path=args.model_path,

+ model_type=args.model_type,

+ device=args.device,

+ precision=args.precision,

+ snapshot=args.snapshot,

+ display=args.display,

+ pcutoff=args.pcutoff,

+ display_radius=args.display_radius,

+ resize=tuple(args.resize) if args.resize else None,

+ cropping=args.cropping,

+ dynamic=tuple(args.dynamic),

+ save_poses=args.save_poses,

+ save_dir=args.save_dir,

+ draw_keypoint_names=args.draw_keypoint_names,

+ cmap=args.cmap,

+ get_sys_info=args.get_sys_info,

+ save_video=args.save_video,

+ )

+

+

+if __name__ == "__main__":

+ main()

diff --git a/dlclive/benchmark_tf.py b/dlclive/benchmark_tf.py

new file mode 100644

index 0000000..d955496

--- /dev/null

+++ b/dlclive/benchmark_tf.py

@@ -0,0 +1,717 @@

+"""

+DeepLabCut Toolbox (deeplabcut.org)

+© A. & M. Mathis Labs

+

+Licensed under GNU Lesser General Public License v3.0

+"""

+

+import os

+import pickle

+import platform

+import subprocess

+import sys

+import time

+import typing

+import warnings

+

+import colorcet as cc

+import ruamel

+from PIL import ImageColor

+

+try:

+ from pip._internal.operations import freeze

+except ImportError:

+ from pip.operations import freeze

+

+import cv2

+import numpy as np

+import tensorflow as tf

+from dlclive import VERSION, DLCLive

+from dlclive import __file__ as dlcfile

+from dlclive.utils import decode_fourcc

+from tqdm import tqdm

+

+

+def download_benchmarking_data(

+ target_dir=".",

+ url="http://deeplabcut.rowland.harvard.edu/datasets/dlclivebenchmark.tar.gz",

+):

+ """

+ Downloads a DeepLabCut-Live benchmarking Data (videos & DLC models).

+ """

+ import tarfile

+ import urllib.request

+

+ from tqdm import tqdm

+

+ def show_progress(count, block_size, total_size):

+ pbar.update(block_size)

+

+ def tarfilenamecutting(tarf):

+ """' auxfun to extract folder path

+ ie. /xyz-trainsetxyshufflez/

+ """

+ for memberid, member in enumerate(tarf.getmembers()):

+ if memberid == 0:

+ parent = str(member.path)

+ l = len(parent) + 1

+ if member.path.startswith(parent):

+ member.path = member.path[l:]

+ yield member

+

+ response = urllib.request.urlopen(url)

+ print(

+ "Downloading the benchmarking data from the DeepLabCut server @Harvard -> Go Crimson!!! {}....".format(

+ url

+ )

+ )

+ total_size = int(response.getheader("Content-Length"))

+ pbar = tqdm(unit="B", total=total_size, position=0)

+ filename, _ = urllib.request.urlretrieve(url, reporthook=show_progress)

+ with tarfile.open(filename, mode="r:gz") as tar:

+ tar.extractall(target_dir, members=tarfilenamecutting(tar))

+

+

+def get_system_info() -> dict:

+ """Return summary info for system running benchmark

+ Returns

+ -------

+ dict

+ Dictionary containing the following system information:

+ * ``host_name`` (str): name of machine

+ * ``op_sys`` (str): operating system

+ * ``python`` (str): path to python (which conda/virtual environment)

+ * ``device`` (tuple): (device type (``'GPU'`` or ``'CPU'```), device information)

+ * ``freeze`` (list): list of installed packages and versions

+ * ``python_version`` (str): python version

+ * ``git_hash`` (str, None): If installed from git repository, hash of HEAD commit

+ * ``dlclive_version`` (str): dlclive version from :data:`dlclive.VERSION`

+ """

+

+ # get os

+

+ op_sys = platform.platform()

+ host_name = platform.node().replace(" ", "")

+

+ # A string giving the absolute path of the executable binary for the Python interpreter, on systems where this makes sense.

+ if platform.system() == "Windows":

+ host_python = sys.executable.split(os.path.sep)[-2]

+ else:

+ host_python = sys.executable.split(os.path.sep)[-3]

+

+ # try to get git hash if possible

+ dlc_basedir = os.path.dirname(os.path.dirname(dlcfile))

+ git_hash = None

+ try:

+ git_hash = subprocess.check_output(

+ ["git", "rev-parse", "HEAD"], cwd=dlc_basedir

+ )

+ git_hash = git_hash.decode("utf-8").rstrip("\n")

+ except subprocess.CalledProcessError:

+ # not installed from git repo, eg. pypi

+ # fine, pass quietly

+ pass

+

+ # get device info (GPU or CPU)

+ dev = None

+ if tf.test.is_gpu_available():

+ gpu_name = tf.test.gpu_device_name()

+ from tensorflow.python.client import device_lib

+

+ dev_desc = [

+ d.physical_device_desc

+ for d in device_lib.list_local_devices()

+ if d.name == gpu_name

+ ]

+ dev = [d.split(",")[1].split(":")[1].strip() for d in dev_desc]

+ dev_type = "GPU"

+ else:

+ from cpuinfo import get_cpu_info

+

+ dev = [get_cpu_info()["brand"]]

+ dev_type = "CPU"

+

+ return {

+ "host_name": host_name,

+ "op_sys": op_sys,

+ "python": host_python,

+ "device_type": dev_type,

+ "device": dev,

+ # pip freeze to get versions of all packages

+ "freeze": list(freeze.freeze()),

+ "python_version": sys.version,

+ "git_hash": git_hash,

+ "dlclive_version": VERSION,

+ }

+

+

+def benchmark(

+ model_path,

+ video_path,

+ tf_config=None,

+ resize=None,

+ pixels=None,

+ cropping=None,

+ dynamic=(False, 0.5, 10),

+ n_frames=1000,

+ print_rate=False,

+ display=False,

+ pcutoff=0.0,

+ display_radius=3,

+ cmap="bmy",

+ save_poses=False,

+ save_video=False,

+ output=None,

+) -> typing.Tuple[np.ndarray, tuple, bool, dict]:

+ """Analyze DeepLabCut-live exported model on a video:

+ Calculate inference time,

+ display keypoints, or

+ get poses/create a labeled video

+

+ Parameters

+ ----------

+ model_path : str

+ path to exported DeepLabCut model

+ video_path : str

+ path to video file

+ tf_config : :class:`tensorflow.ConfigProto`

+ tensorflow session configuration

+ resize : int, optional

+ resize factor. Can only use one of resize or pixels. If both are provided, will use pixels. by default None

+ pixels : int, optional

+ downsize image to this number of pixels, maintaining aspect ratio. Can only use one of resize or pixels. If both are provided, will use pixels. by default None

+ cropping : list of int

+ cropping parameters in pixel number: [x1, x2, y1, y2]

+ dynamic: triple containing (state, detectiontreshold, margin)

+ If the state is true, then dynamic cropping will be performed. That means that if an object is detected (i.e. any body part > detectiontreshold),

+ then object boundaries are computed according to the smallest/largest x position and smallest/largest y position of all body parts. This window is

+ expanded by the margin and from then on only the posture within this crop is analyzed (until the object is lost, i.e. `, by default "bmy"

+ save_poses : bool, optional

+ flag to save poses to an hdf5 file. If True, operates similar to :function:`DeepLabCut.benchmark_videos`, by default False

+ save_video : bool, optional

+ flag to save a labeled video. If True, operates similar to :function:`DeepLabCut.create_labeled_video`, by default False

+ output : str, optional

+ path to directory to save pose and/or video file. If not specified, will use the directory of video_path, by default None

+

+ Returns

+ -------